The Language Instinct: How the Mind Creates Language (13 page)

Read The Language Instinct: How the Mind Creates Language Online

Authors: Steven Pinker

But the device does not work.

Either

must be followed later in a sentence by

or

, no one says

Either the girl eats ice cream, then the girl eats candy

. Similarly,

if

requires

then

; no one says

If the girl eats ice cream, or the girl likes candy

. But to satisfy the desire of a word early in a sentence for some other word late in the sentence, the device has to remember the early word while it is churning out all the words in between. And that is the problem: a word-chain device is an amnesiac, remembering only which word list it has just chosen from, nothing earlier. By the time it reaches the

or/then

list, it has no means of remembering whether it said

if

or

either

way back at the beginning. From our vantage point, peering down at the entire road map, we can remember which choice the device made at the first fork in the road, but the device itself, creeping antlike from list to list, has no way of remembering.

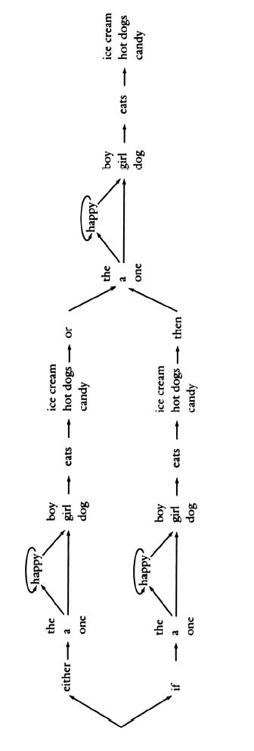

Now, you might think it would be a simple matter to redesign the device so that it does not have to remember early choices at late points in the sentence. For example, one could join up

either

and

or

and all the possible word sequences in between into one giant sequence, and

if

and

then

and all the sequences in between as a second giant sequence, before returning to a third copy of the sequence—yielding a chain so long I have to print it sideways (“Chapter 4”). There is something immediately disturbing about this solution: there are three identical subnetworks. Clearly, whatever people can say between an

either

and an

or

, they can say between an

if

and a

then

, and also after the

or

or the

then

. But this ability should come naturally out of the design of whatever the device is in people’s heads that allows them to speak. It shouldn’t depend on the designer’s carefully writing down three identical sets of instructions (or, more plausibly, on the child’s having to learn the structure of the English sentence three different times, once between

if

and

then

, once between

either

and

or

, and once after a

then

or an

or

).

But Chomsky showed that the problem is even deeper. Each of these sentences can be embedded in any of the others, including itself:

If either the girl eats ice cream or the girl eats candy, then the boy eats hot dogs.

Either if the girl eats ice cream then the boy eats ice cream, or if the girl eats ice cream then the boy eats candy.

For the first sentence, the device has to remember

if

and

either

so that it can continue later with

or

and

then

, in that order. For the second sentence, it has to remember

either

and

if

so that it can complete the sentence with

then

and

or

. And so on. Since there’s no limit in principle to the number of

if

’s and

either

’s that can begin a sentence, each requiring its own order of

then

’s and

or

’s to complete it, it does no good to spell out each memory sequence as its own chain of lists; you’d need an infinite number of chains, which won’t fit inside a finite brain.

This argument may strike you as scholastic. No real person ever begins a sentence with

Either either if either if if

, so who cares whether a putative model of that person can complete it with

then…then…or…then…or…or?

But Chomsky was just adopting the esthetic of the mathematician, using the interaction between

either-or

and

if-then

as the simplest possible example of a property of language—its use of “long-distance dependencies” between an early word and a later one—to prove mathematically that word-chain devices cannot handle these dependencies.

The dependencies, in fact, abound in languages, and mere mortals use them all the time, over long distances, often handling several at once—just what a word-chain device cannot do. For example, there is an old grammarian’s saw about how a sentence can end in five prepositions. Daddy trudges upstairs to Junior’s bedroom to read him a bedtime story. Junior spots the book, scowls, and asks, “Daddy, what did you bring that book that I don’t want to be read to out of up for?” By the point at which he utters

read

, Junior has committed himself to holding four dependencies in mind:

to be read

demands

to, that book that

requires

out of, bring

requires

up

, and

what

requires

for

. An even better, real-life example comes from a letter to

TV Guide:

How Ann Salisbury can claim that Pam Dawber’s anger at not receiving her fair share of acclaim for

Mork and Mindy

’s success derives from a fragile ego escapes me.

At the point just after the word

not

, the letter-writer had to keep four grammatical commitments in mind: (1)

not

requires -

ing

(her anger at

not

receiv

ing

acclaim); (2)

at

requires some kind of noun or gerund (her anger

at

not

receiving acclaim

); (3) the singular subject

Pam Dawber’s anger

requires the verb fourteen words downstream to agree with it in number (Dawber’s

anger…derives

from); (4) the singular subject beginning with

How

requires the verb twenty-seven words downstream to agree with it in number (

How…escapes

me). Similarly, a reader must keep these dependencies in mind while interpreting the sentence. Now, technically speaking, one could rig up a word-chain model to handle even these sentences, as long as there is some actual limit on the number of dependencies that the speaker need keep in mind (four, say). But the degree of redundancy in the device would be absurd; for each of the thousands of

combinations

of dependencies, an identical chain must be duplicated inside the device. In trying to fit such a superchain in a person’s memory, one quickly runs out of brain.

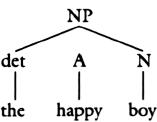

The difference between the artificial combinatorial system we see in word-chain devices and the natural one we see in the human brain is summed up in a line from the Joyce Kilmer poem: “Only God can make a tree.” A sentence is not a chain but a tree. In a human grammar, words are grouped into phrases, like twigs joined in a branch. The phrase is given a name—a mental symbol—and little phrases can be joined into bigger ones.

Take the sentence

The happy boy eats ice cream

. It begins with three words that hang together as a unit, the noun phrase

the happy boy

. In English a noun phrase (NP) is composed of a noun (N), sometimes preceded by an article or “determinator” (abbreviated “det”) and any number of adjectives (A). All this can be captured in a rule that defines what English noun phrases look like in general. In the standard notation of linguistics, an arrow means “consists of,” parentheses mean “optional,” and an asterisk means “as many of them as you want,” but I provide the rule just to show that all of its information can be captured precisely in a few symbols; you can ignore the notation and just look at the translation into ordinary words below it:

NP

(det) A

*

N“A noun phrase consists of an optional determiner, followed by any number of adjectives, followed by a noun.”

The rule defines an upside-down tree branch:

Here are two other rules, one defining the English sentence (S), the other defining the predicate or verb phrase (VP); both use the NP symbol as an ingredient:

S

NP VP

“A sentence consists of a noun phrase followed by a verb phrase.”

VP

VNP

“A verb phrase consists of a verb followed by a noun phrase.”