The Language Instinct: How the Mind Creates Language (54 page)

Read The Language Instinct: How the Mind Creates Language Online

Authors: Steven Pinker

There is already some evidence that the linguistic brain might be organized in this tortuous way. The neurosurgeon George Ojemann, following up on Penfield’s methods, electrically stimulated different sites in conscious, exposed brains. He found that stimulating within a site no more than a few millimeters across could disrupt a single function, like repeating or completing a sentence, naming an object, or reading a word. But these dots were scattered over the brain (largely, but not exclusively, in the perisylvian regions) and were found in different places in different individuals.

From the standpoint of what the brain is designed to do, it would not be surprising if language subcenters are idiosyncratically tangled or scattered over the cortex. The brain is a special kind of organ, the organ of computation, and unlike an organ that moves stuff around in the physical world such as the hip or the heart, the brain does not need its functional parts to have nice cohesive shapes. As long as the connectivity of the neural microcircuitry is preserved, its parts can be put in different places and do the same thing, just as the wires connecting a set of electrical components can be haphazardly stuffed into a cabinet, or the headquarters of a corporation can be located anywhere if it has good communication links to its plants and warehouses. This seems especially true of words: lesions or electrical stimulation over wide areas of the brain can cause naming difficulties. A word is a bundle of different kinds of information. Perhaps each word is like a hub that can be positioned anywhere in a large region, as long as its spokes extend to the parts of the brain storing its sound, its syntax, its logic, and the appearance of the things it stands for.

The developing brain may take advantage of the disembodied nature of computation to position language circuits with some degree of flexibility. Say a variety of brain areas have the potential to grow the precise wiring diagrams for language components. An initial bias causes the circuits to be laid down in their typical sites; the alternative sites are then suppressed. But if those first sites get damaged within a certain critical period, the circuits can grow elsewhere. Many neurologists believe that this is why the language centers are located in unexpected places in a significant minority of people. Birth is traumatic, and not just for the familiar psychological reasons. The birth canal squeezes the baby’s head like a lemon, and newborns frequently suffer small strokes and other brain insults. Adults with anomalous language areas may be the recovered victims of these primal injuries. Now that MRI machines are common in brain research centers, visiting journalists and philosophers are sometimes given pictures of their brains to take home as a souvenir. Occasionally the picture will reveal a walnut-sized dent, which, aside from some teasing from friends who say they knew it all along, bespeaks no ill effects.

There are other reasons why language functions have been so hard to pin down in the brain. Some kinds of linguistic knowledge might be stored in multiple copies, some of higher quality than others, in several places. Also, by the time stroke victims can be tested systematically, they have often recovered some of their facility with language, in part by compensating with general reasoning abilities. And neurologists are not like electronics technicians who can wiggle a probe into the input or output line of some component to isolate its function. They must tap the whole patient via his or her eyes and ears and mouth and hands, and there are many computational waystations between the stimulus they present and the response they observe. For example, naming an object involves recognizing it, looking up its entry in the mental dictionary, accessing its pronunciation, articulating it, and perhaps also monitoring the output for errors by listening to it. A naming problem could arise if any of these processes tripped up.

There is some hope that we will have better localization of mental processes soon, because more precise brain-imaging technologies are rapidly being developed. One example is Functional MRI, which can measure—with much more precision than PET—how hard the different parts of the brain are working during different kinds of mental activity. Another is Magneto-Encephalography, which is like EEG but can pinpoint the part of the brain that an electromagnetic signal is coming from.

We will never understand language organs and grammar genes by looking only for postage-stamp-sized blobs of brain. The computations underlying mental life are caused by the wiring of the intricate networks that make up the cortex, networks with millions of neurons, each neuron connected to thousands of others, operating in thousandths of a second. What would we see if we could crank up the microscope and peer into the microcircuitry of the language areas? No one knows, but I would like to give you an educated guess. Ironically, this is both the aspect of the language instinct that we know the least about and the aspect that is the most important, because it is there that the actual causes of speaking and understanding lie. I will present you with a dramatization of what grammatical information processing might be like from a neuron’s-eye view. It is not something that you should take particularly seriously; it is simply a demonstration that the language instinct is compatible in principle with the billiard-ball causality of the physical universe, not just mysticism dressed up in a biological metaphor.

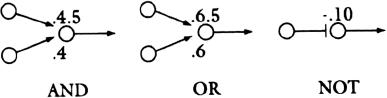

Neural network modeling is based on a simplified toy neuron. This neuron can do just a few things. It can be active or inactive. When active, it sends a signal down its axon (output wire) to the other cells it is connected to; the connections are called synapses. Synapses can be excitatory or inhibitory and can have various degrees of strength. The neuron at the receiving end adds up any signals coming in from excitatory synapses, subtracts any signals coming in from inhibitory synapses, and if the sum exceeds a threshold, the receiving neuron becomes active itself.

A network of these toy neurons, if large enough, can serve as a computer, calculating the answer to any problem that can be specified precisely, just like the page-crawling Turing machine in Chapter 3 that could deduce that Socrates is mortal. That is because toy neurons can be wired together in a few simple ways that turn them into “logic gates,” devices that can compute the logical relations “and,” “or,” and “not” that underlie deduction. The meaning of the logical relation “and” is that the statement “A and B” is true if A is true and if B is true. An

AND

gate that computes that relation would be one that turns itself on if all of its inputs are on. If we assume that the threshold for our toy neurons is .5, then a set of incoming synapses whose weights are each less than .5 but that sum to greater than .5, say .4 and .4, will function as an

AND

gate, such as the one on the left here:

The meaning of the logical relation “or” is that a statement “A or B” is true if A is true or if B is true. Thus an

OR

gate must turn on if at least one of its inputs is on. To implement it, each synaptic weight must be greater than the neuron’s threshold, say .6, like the middle circuit in the diagram. Finally, the meaning of the logical relation “not” is that a statement “Not A” is true if A is false, and vice versa. Thus a

NOT

gate should turn its output off if its input is on, and vice versa. It is implemented by an inhibitory synapse, shown on the right, whose negative weight is sufficient to turn off an output neuron that is otherwise always on.

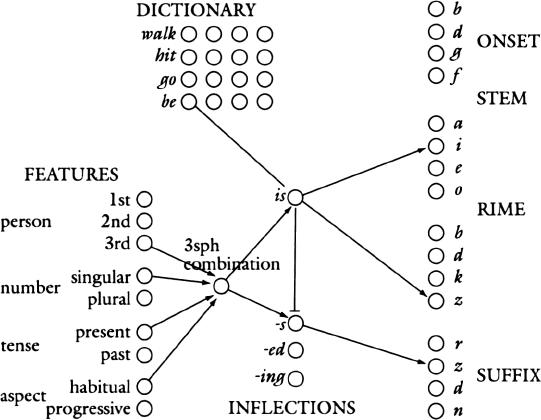

Here is how a network of neurons might compute a moderately complex grammatical rule. The English inflection -

s

as in

Bill walks

is a suffix that should be applied under the following conditions: when the subject is in the third person

AND

singular

AND

the action is in the present tense

AND

is done habitually (this is its “aspect,” in lingo)—but

NOT

if the verb is irregular like

do, have, say

, or

be

(for example, we say

Bill is

, not

Bill be’s

). A network of neural gates that computes these logical relations looks like this:

First, there is a bank of neurons standing for inflectional features on the lower left. The relevant ones are connected via an

AND

gate to a neuron that stands for the combination third person, singular number, present tense, and habitual aspect (labeled “3sph”). That neuron excites a neuron corresponding to the -

s

inflection, which in turn excites the neuron corresponding to the phoneme

z

in a bank of neurons that represent the pronunciations of suffixes. If the verb is regular, this is all the computation that is needed for the suffix; the pronunciation of the stem, as specified in the mental dictionary, is simply copied over verbatim to the stem neurons by connections I have not drawn in. (That is, the form for

to hit

is just

hit + s

; the form for

to wug

is just

wug + s

.) For irregular verbs like

be

, this process must be blocked, or else the neural network would produce the incorrect

be’s

. So the 3sph combination neuron also sends a signal to a neuron that stands for the entire irregular form

is

. If the person whose brain we are modeling is intending to use the verb

be

, a neuron standing for the verb

be

is already active, and it, too, sends activation to the

is

neuron. Because the two inputs to

is

are connected as an

AND

gate, both must be on to activate

is

. That is, if and only if the person is thinking of

be

and third-person-singular-present-habitual at the same time, the

is

neuron is activated. The

is

neuron inhibits the -

s

inflection via a

NOT

gate formed by an inhibitory synapse, preventing

ises

or

be’s

, but activates the vowel

i

and the consonant

z

in the bank of neurons standing for the stem. (Obviously I have omitted many neurons and many connections to the rest of the brain.)

I have hand-wired this network, but the connections are specific to English and in a real brain would have to have been learned. Continuing our neural network fantasy for a while, try to imagine what this network might look like in a baby. Pretend that each of the pools of neurons is innately there. But wherever I have drawn an arrow from a single neuron in one pool to a single neuron in another, imagine a suite of arrows, from every neuron in one pool to every neuron in another. This corresponds to the child innately “expecting” there to be, say, suffixes for persons, numbers, tenses, and aspects, as well as possible irregular words for those combinations, but not knowing exactly which combinations, suffixes, or irregulars are found in the particular language. Learning them corresponds to strengthening some of the synapses at the arrowheads (the ones I happen to have drawn in) and letting the others stay invisible. This could work as follows. Imagine that when the infant hears a word with a

z

in its suffix, the

z

neuron in the suffix pool at the right edge of the diagram gets activated, and when the infant thinks of third person, singular number, present tense, and habitual aspect (parts of his construal of the event), those four neurons at the left edge get activated, too. If the activation spreads backwards as well as forwards, and if a synapse gets strengthened every time it is activated at the same time that its output neuron is already active, then all the synapses lining the paths between “3rd,” “singular,” “present,” “habitual” at one end, and “z” at the other end, get strengthened. Repeat the experience enough times, and the partly specified neonate network gets tuned into the adult one I have pictured.