The Language Instinct: How the Mind Creates Language (34 page)

Read The Language Instinct: How the Mind Creates Language Online

Authors: Steven Pinker

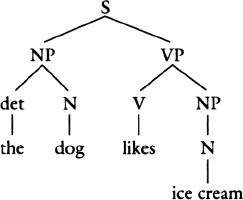

What does come next is

ice cream

, a noun, which can be part of an NP—just as the dangling NP branch predicts. The last pieces of the puzzle snap nicely together:

The word

ice cream

has completed the noun phrase, so it need not be kept in memory any longer; the NP has completed the verb phrase, so it can be forgotten, too; and the VP has completed the sentence. When memory has been emptied of all its incomplete dangling branches, we experience the mental “click” that signals that we have just heard a complete grammatical sentence.

As the parser has been joining up branches, it has been building up the meaning of the sentence, using the definitions in the mental dictionary and the principles for combining them. The verb is the head of its VP, so the VP is about liking. The NP inside the VP,

ice cream

, is the verb’s object. The dictionary entry for

likes

says that its object is the liked entity; therefore the VP is about being fond of ice cream. The NP to the left of a tensed verb is the subject; the entry for

likes

says that its subject is the one doing the liking. Combining the semantics of the subject with the semantics of the VP, the parser has determined that the sentence asserts that an aforementioned canine is fond of frozen confections.

Why is it so hard to program a computer to do this? And why do people, too, suddenly find it hard to do this when reading bureaucratese and other bad writing? As we stepped our way through the sentence pretending we were the parser, we faced two computational burdens. One was memory: we had to keep track of the dangling phrases that needed particular kinds of words to complete them. The other was decision-making: when a word or phrase was found on the right-hand side of two different rules, we had to decide which to use to build the next branch of the tree. In accord with the first law of artificial intelligence, that the hard problems are easy and the easy problems are hard, it turns out that the memory part is easy for computers and hard for people, and the decision-making part is easy for people (at least when the sentence has been well constructed) and hard for computers.

A sentence parser requires many kinds of memory, but the most obvious is the one for incomplete phrases, the remembrance of things parsed. Computers must set aside a set of memory locations, usually called a “stack,” for this task; this is what allows a parser to use phrase structure grammar at all, as opposed to being a word-chain device. People, too, must dedicate some of their short-term memory to dangling phrases. But short-term memory is the primary bottleneck in human information processing. Only a few items—the usual estimate is seven, plus or minus two—can be held in the mind at once, and the items are immediately subject to fading or being overwritten. In the following sentences you can feel the effects of keeping a dangling phrase open in memory too long:

He gave the girl that he met in New York while visiting his parents for ten days around Christmas and New Year’s the candy.

He sent the poisoned candy that he had received in the mail from one of his business rivals connected with the Mafia to the police.

She saw the matter that had caused her so much anxiety in former years when she was employed as an efficiency expert by the company through.

That many teachers are being laid off in a shortsighted attempt to balance this year’s budget at the same time that the governor’s cronies and bureaucratic hacks are lining their pockets is appalling.

These memory-stretching sentences are called “top-heavy” in style manuals. In languages that use case markers to signal meaning, a heavy phrase can simply be slid to the end of the sentences, so the listener can digest the beginning without having to hold the heavy phrase in mind. English is tyrannical about order, but even English provides its speakers with some alternative constructions in which the order of phrases is inverted. A considerate writer can use them to save the heaviest for last and lighten the burden on the listener. Note how much easier these sentences are to understand:

He gave the candy to the girl that he met in New York while visiting his parents for ten days around Christmas and New Year’s.

He sent to the police the poisoned candy that he had received in the mail from one of his business rivals connected with the Mafia.

She saw the matter through that had caused her so much anxiety in former years when she was employed as an efficiency expert by the company.

It is appalling that teachers are being laid off in a short-sighted attempt to balance this year’s budget at the same time that the governor’s cronies and bureaucratic hacks are lining their pockets.

Many linguists believe that the reason that languages allow phrase movement, or choices among more-or-less synonymous constructions, is to ease the load on the listener’s memory.

As long as the words in a sentence can be immediately grouped into complete phrases, the sentence can be quite complex but still understandable:

Remarkable is the rapidity of the motion of the wing of the hummingbird.

This is the cow with the crumpled horn that tossed the dog that worried the cat that killed the rat that ate the malt that lay in the house that Jack built.

Then came the Holy One, blessed be He, and destroyed the angel of death that slew the butcher that killed the ox that drank the water that quenched the fire that burned the stick that beat the dog that bit the cat my father bought for two zuzim.

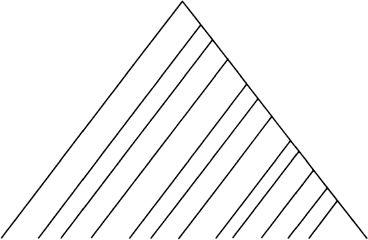

These sentences are called “right-branching,” because of the geometry of their phrase structure trees. Note that as one goes from left to right, only one branch has to be left dangling at a time:

Remarkable is the rapidity of the motion of the wing of the hummingbird

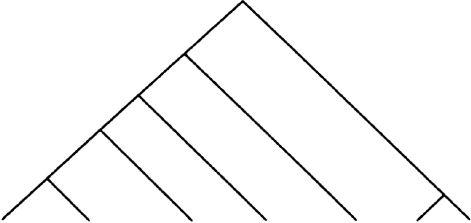

Sentences can also branch to the left. Left-branching trees are most common in head-last languages like Japanese but are found in a few constructions in English, too. As before, the parser never has to keep more than one dangling branch in mind at a time:

The hummingbird’s wing’s motion’s rapidity is remarkable

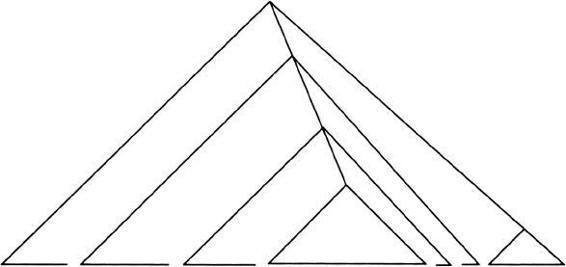

There is a third kind of tree geometry, but it goes down far less easily. Take the sentence

The rapidity that the motion has is remarkable.

The clause

that the motion has

has been embedded in the noun phrase containing

The rapidity

. The result is a bit stilted but easy to understand. One can also say

The motion that the wing has is remarkable.

But the result of embedding the

motion that the wing has

phrase inside the

rapidity that the motion has

phrase is surprisingly hard to understand:

The rapidity that the motion that the wing has has is remarkable.

Embedding a third phrase, like

the wing that the hummingbird has

, creating a triply embedded onion sentence, results in complete unintelligibility:

The rapidity that the motion that the wing that the hummingbird has has has is remarkable

When the human parser encounters the three successive

has

’s, it thrashes ineffectively, not knowing what to do with them. But the problem is not that the phrases have to be held in memory too long; even short sentences are uninterpretable if they have multiple embeddings:

The dog the stick the fire burned beat bit the cat.

The malt that the rat that the cat killed ate lay in the house.

If if if it rains it pours I get depressed I should get help.

That that that he left is apparent is clear is obvious.

Why does human sentence understanding undergo such complete collapse when interpreting sentences that are like onions or Russian dolls? This is one of the most challenging puzzles about the design of the mental parser and the mental grammar. At first one might wonder whether the sentences are even grammatical. Perhaps we got the rules wrong, and the real rules do not even provide a way for these words to fit together. Could the maligned word-chain device of Chapter 4, which has no memory for dangling phrases, be the right model of humans after all? No way; the sentences check out perfectly. A noun phrase can contain a modifying clause; if you can say

the rat

, you can say

the rat that S

, where S is a sentence missing an object that modifies

the rat

. And a sentence like

the cat killed X

can contain a noun phrase, such as its subject,

the cat

. So when you say

The rat that the cat killed

, you have modified a noun phrase with something that in turn contains a noun phrase. With just these two abilities, onion sentences become possible: just modify the noun phrase inside a clause with a modifying clause of its own. The only way to prevent onion sentences would be to claim that the mental grammar defines two different kinds of noun phrase, a kind that can be modified and a kind that can go inside a modifier. But that can’t be right: both kinds of noun phrase would have to be allowed to contain the same twenty thousand nouns, both would have to allow articles and adjectives and possessors in identical positions, and so on. Entities should not be multiplied unnecessarily, and that is what such tinkering would do. Positing different kinds of phrases in the mental grammar just to explain why onion sentences are unintelligible would make the grammar exponentially more complicated and would give the child an exponentially larger number of rules to record when learning the language. The problem must lie elsewhere.