Connectome (26 page)

Authors: Sebastian Seung

Â

Â

Â

Â

Figure 37. Neurons of the retina reconstructed automatically by computer

Â

Even with these improvements, the computer still makes errors. I'm confident that the application of machine learning will continue to reduce the error rate. But as the field of connectomics develops, computers will be called upon to analyze larger and larger images, and the absolute number of errors will remain large, even if the error

rate

is decreasing. In the foreseeable future, image analysis will never be 100 percent automaticâwe will always need some element of human intelligenceâbut the process will speed up considerably.

***

It was the legendary inventor Doug Engelbart who first developed the idea of interacting with computers through a mouse. The full implications were not realized until the 1980s, when the personal-computer revolution swept the world. But Engelbart invented the mouse back in 1963, while directing a research team at the Stanford Research Institute, a California think tank. That same year, Marvin Minsky co-founded the Artificial Intelligence Laboratory (AI Lab) on the other side of the country, at the Massachusetts Institute of Technology. His researchers were among the first to confront the problem of making computers see.

Old-time computer hackers

like to tell the story, perhaps apocryphal, of a meeting between these two great minds. Minsky proudly proclaimed, “We're going to make machines intelligent! We're going to make them walk and talk! We're going to make them conscious!” Engelbart shot back, “You're going to do that for computers? Well, what are you going to do for people?”

Engelbart laid out his ideas in a manifesto called “Augmenting Human Intellect,” which defined a field he called Intelligence Amplification,

or IA. Its goal was subtly different from that of AI. Minsky aimed to make machines smarter; Engelbart wanted machines that made

people

smarter.

My laboratory's research on machine learning belonged to the domain of AI, while the software program of Fiala and Harris was a direct descendant of Engelbart's ideas. It was not AI, as it was not smart enough to see boundaries by itself. Instead it amplified human intelligence, helping humans analyze electron microscopic images more efficiently. The field of IA is becoming increasingly important for science, now that it's possible to “crowdsource”

tasks to large numbers of people over the Internet. The Galaxy Zoo project, for instance, invites members of the public to help astronomers classify galaxies by their appearance in telescope images.

But AI and IA are not actually in competition, because the best approach is to combine them, and that is what my laboratory is currently doing. AI should be part of any IA system. AI should take care of the easy decisions and leave the difficult ones for humans. The best way of making humans effective is to minimize the time they waste on trivial tasks. And an IA system is the perfect platform for collecting examples that can be used to improve AI by machine learning. The marriage of IA and AI leads to a system that gets smarter over time, amplifying human intelligence by a greater and greater factor.

People are sometimes frightened by the prospect of AI, having seen too many science fiction movies in which machines have rendered humans obsolete. And researchers can be distracted by the promise of AI, struggling in vain to fully automate tasks that would be more efficiently accomplished by the cooperation of computers and humans. That's why we should never forget that the ultimate goal is IA, not AI. Engelbart's message still comes through loud and clear for the computational challenges of connectomics.

These advances in image analysis are exciting and encouraging, but how quickly can we expect connectomics to progress in the future? We have all experienced incredible technological progress in our own lifetimes, especially in the area of computers. The heart of a desktop computer is a silicon chip called a microprocessor. The first microprocessors, released in 1971, contained just a few thousand transistors. Since then, semiconductor companies have been locked in a race to pack more and more transistors onto a chip. The pace of progress has been breathtaking. The cost per transistor has halved every two years, orâanother way of looking at itâthe number of transistors in a microprocessor of fixed cost has doubled every two years.

Sustained, regular doubling is an example of a type of growth called “exponential,” after the mathematical function that behaves in this way. Exponential growth in the complexity of computer chips is known as Moore's Law, because it was foreseen by Gordon Moore in a 1965 article in

Electronics

magazine. This was three years before he helped found Intel, now the world's largest manufacturer of microprocessors.

The exponential rate of progress makes the computer business unlike almost any other. Many years after his prediction was confirmed, Moore quipped, “If the automobile industry advanced as rapidly as the semiconductor industry, a Rolls Royce would get half a million miles per gallon, and it would be cheaper to throw it away than to park it.” We are persuaded to throw our computers away every few years and buy new ones. This is usually not because the old computers are broken, but because they have been rendered obsolete.

Interestingly, genomics has also progressed at an exponential rate, more like semiconductors than automobiles. In fact, genomics has bounded ahead even faster than computers. The cost per letter

of the DNA sequence has halved even faster than the cost per transistor.

Will connectomics be like genomics, with exponential progress? In the long run, it's arguable that computational power will be the primary constraint on finding connectomes. After all, the image analysis took up far more time than the image acquisition in the

C. elegans

project. In other words, connectomics will ride on the back of the computer industry. If Moore's Law continues to hold, then connectomics will experience exponential growthâbut no one knows for sure whether that will happen. On the one hand, growth in the number of transistors in a single microprocessor has started to falter, a sign that Moore's Law could break down soon. On the other, growth might be maintainedâor even acceleratedâby the introduction of new computing architectures or nanoelectronics.

If connectomics experiences sustained exponential progress, then finding entire human connectomes will become easy well before the end of the twenty-first century. At present, my colleagues and I are preoccupied with surmounting the technical barriers to seeing connectomes. But what happens when we succeed? What will we do with them? In the next few chapters I'll explore some of the exciting possibilities, which include creating better maps of the brain, uncovering the secrets of memory, zeroing in on the fundamental causes of brain disorders, and even using connectomes to find new ways of treating them.

One day when I was a boy, my father brought home a globe. I ran my fingers over the raised relief and felt the bumpiness of the Himalayas. I clicked the rocker switch on the cord and lay in bed gazing at the globe's luminous roundness in my darkened room. Later on, I was fascinated by a large folio book, my father's atlas of the world. I used to smell the leathery cover and leaf through the pages looking at the exotic names of faraway countries and oceans. My schoolteachers taught me and my classmates about the Mercator projection, and we giggled at the grotesque enlargement of Greenland with the same perverse enjoyment that came from a funhouse mirror or a newspaper cartoon on a piece of Silly Putty.

Today maps are practical items for me, not magical objects. As my childhood memories grow faint, I wonder whether my fascination with maps helped me cope with a fear of the world's vastness. Back then, I never ventured beyond the streets of my neighborhood without my parents; the city beyond seemed frightening. Placing the entire world on a sphere or enclosing it within the pages of a book made it seem finite and harmless.

In ancient times, fear of the world's vastness was not limited to children. When medieval cartographers drew maps, they did not leave unknown areas blank; they filled them with sea serpents, other imaginary monsters, and the words “Here be dragons.” As the centuries passed, explorers crossed every ocean and climbed every mountain, gradually filling in the blank areas of the map with real lands. Today we marvel at photos of the Earth's beauty taken from outer space, and our communications networks have created a global village. The world has grown small.

Unlike the world, the brain seemed compact at first, fitting nicely inside the confines of a skull. But the more we know about the brain with its billions of neurons, the more it seems intimidatingly vast. The first neuroscientists carved the brain into regions and gave each one a name or number, as Brodmann did with his map of the cortex. Finding this approach too coarse, Cajal pioneered another one, coping with the immensity of the brain forest

by classifying its trees like a botanist. Cajal was a “neuron collector.”

We learned earlier why it's important to carve the brain into regions. Neurologists interpret the symptoms of brain damage using Brodmann's map. Every cortical area is associated with a specific mental ability, such as understanding or speaking words, and damage to that area impairs that particular ability. But why is it important to carve the brain more finely, into neuron types? For one thing, neurologists can use such information. It's less relevant for stroke or other injuries, which tend to affect all neurons at a particular location in the brain. Some diseases of the brain, however, affect certain types of neurons while sparing others.

Parkinson's disease (PD) starts by impairing the control of movement. Most noticeably, there is a resting tremor, or involuntary quivering of the limbs when the patient is not attempting to move them. As the disease progresses, it can cause intellectual and emotional problems, and even dementia. The cases of Michael J. Fox and Muhammad Ali have raised public awareness of the disease.

Like Alzheimer's disease, PD involves the degeneration and death of neurons. In the earlier stages, the damage is confined to a region known as the basal ganglia. This hodgepodge of structures is buried deep inside the cerebrum, and is also involved in Huntington's disease,

Tourette syndrome, and obsessive-compulsive disorder. The region's role in so many diseases suggests that it is very important,

even if much smaller than the surrounding cortex.

One part of the basal ganglia, the

substantia nigra pars compacta,

bears the brunt of degeneration in PD. We can zero in even further to a particular neuron type within this region, which secretes the neurotransmitter dopamine, and is progressively destroyed in PD. There is currently no cure, but the symptoms are managed by therapies that compensate for the reduction of dopamine.

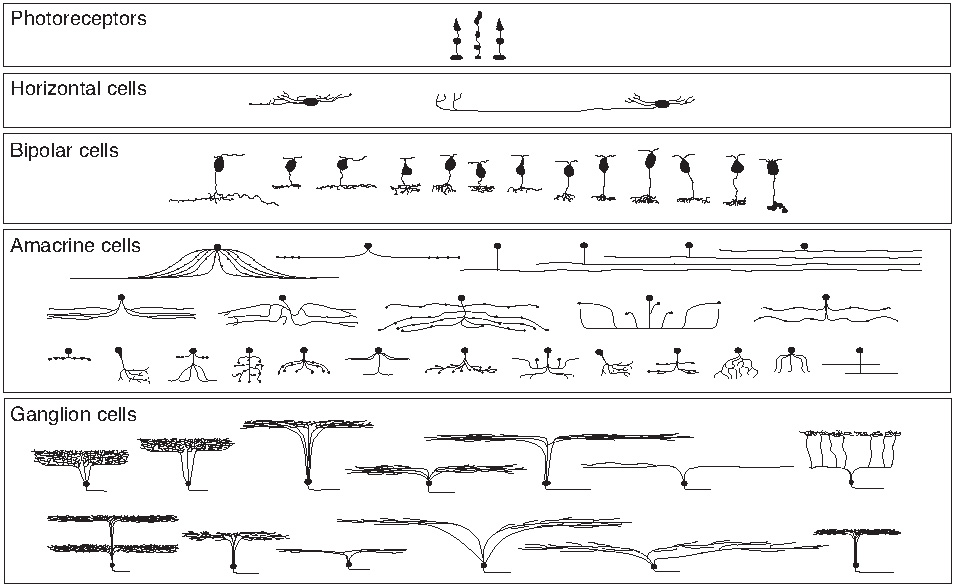

Neuron types are important not only in disease but also in the normal operation of the nervous system. For example, the five broad classes of neurons in the retinaâphotoreceptors, horizontal cells, bipolar cells, amacrine cells, and ganglion cellsâspecialize in different functions. Photoreceptors sense light striking the retina and convert it into neural signals. The output of the retina leaves through the axons of ganglion cells, which travel in the optic nerve to the brain.

These five broad classes have been subdivided even further into over fifty types, as shown in Figure 38. Each strip

represents a class, and contains the neuron types that belong to the class. The functions of retinal neurons are much simpler than that of the Jennifer Aniston neuron. For example, some spike in response to a light spot on a dark background, or vice versa. Each neuron type studied so far has been shown to possess a distinct function, and the effort to assign functions to all types is ongoing.

Â

Â

Â

Â

Figure 38. Types of neurons in the retina

Â

As I'll explain in this chapter, it's not as easy as it sounds to divide the brain into regions and neuron types. Our current methods date back over a century to Brodmann and Cajal, and they are looking increasingly outmoded. One major contribution of connectomics will be new and improved methods for carving up the brain. This in turn will help us understand the pathologies that so often plague it, as well as its normal operation.