The Numbers Behind NUMB3RS (7 page)

Read The Numbers Behind NUMB3RS Online

Authors: Keith Devlin

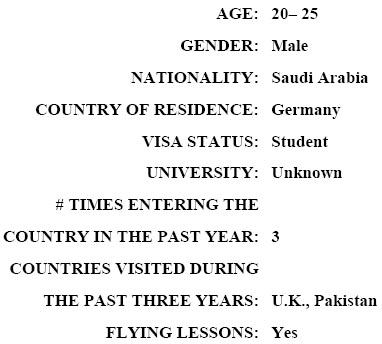

For instance, although the actual systems used are not made public, it seems highly likely that an individual trying to enter the country would be held for further questioning if he or she had the following characteristics:

The system would probably simply suggest that the agent investigate further based on the first seven features, but the final two would likely trigger more substantive action. (One can imagine the final feature being activated only when several of the earlier ones raise the likelihood that the individual is a terrorist.)

Of course, the above example is grossly simplified to illustrate the general idea. The power of machine learning is that it can build up fairly complex profiles that would escape a human agent. Moreover, using Bayesian methods (see Chapter 6) for updating probabilities, the system can attach a probability to each conclusion. In the above example, the profile might yield the advice:

Though our example is fictitious, machine learning systems are in daily use by border guards and law enforcement agencies when screening people entering the country for possible drug-smuggling or terrorist activities. Detecting financial fraud is another area where law enforcement agencies make use of machine learning. And the business world also makes extensive use of such systems, in marketing, customer profiling, quality control, supply chain management, distribution, and so forth, while major political parties use them to determine where and how to target their campaigns.

In some applications, machine learning systems operate like the ones described above; others make use of neural networks, which we consider next.

NEURAL NETWORKS

On June 12, 2006,

The Washington Post

carried a full-page advertisement from Visa Corporation, announcing that their record of credit card fraud was near its all-time low, citing neural networks as the leading security measure that the company had taken to stop credit card fraud. Visa's success came at the end of a long period of development of neural networkâbased fraud prevention measures that began in 1993, when the company was the first to experiment with the use of such systems to reduce the incidence of card fraud. The idea was that by analyzing typical card usage patterns, a neural networkâbased risk management tool would notify banks immediately when any suspicious activity occurred, so they could inform their customers if a card appears to have been used by someone other than the legitimate cardholder.

Credit card fraud detection is just one of many applications of data mining that involve the use of a neural network. What exactly are neural networks and how do they work?

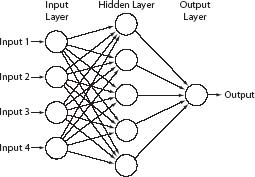

A neural network is a particular kind of computer program, originally developed to try to mimic the way the human brain works. It is essentially a computer simulation of a complex circuit through which electric current flows. (See figure 2.)

Neural networks are particularly suited to recognizing patterns, and were introduced into the marketplace in the 1980s, for tasks such as classifying loan applications as good or bad risks, distinguishing legal from fraudulent financial transactions, identifying possible credit card theft, recognizing signatures, and identifying purchasing patterns in branch supermarkets. Law enforcement agencies started using neural networks soon afterward, applying them to such tasks as recognizing a “forensic fingerprint” that indicates that different cases of arson are likely the work of a single individual, or to recognize activity and behavioral patterns that indicate possible smuggling or terrorist intent.

Figure 2. A simple neural network with a single hidden layer and one output node.

To go into a little more detail about the technology, a neural network consists of

*

many (typically several hundred or several thousand) nodes arranged in two or more “parallel layers,” with each node in one layer connected to one or more nodes in the adjacent layer. One end-layer is the input layer, the other end-layer is the output layer. All the other layers are called intermediate layers or hidden layers. (The brain-modeling idea is that the nodes simulate neurons and the connections dendrites.) figure 2 gives the general idea, although a network with so few nodes would be of little practical use.

The network commences an operation cycle when a set of input signals is fed into the nodes of the input layer. Whenever a node anywhere in the network receives an input signal, it sends output signals to all those nodes on the next layer to which it is connected. The cycle completes when signals have propagated through the entire network and an output signal (or signals) emerges from the output node (or the multiple nodes in the output layer if that is how the network is structured). Each input signal and each signal that emerges from a node has a certain “signal strength” (expressed by a number between 1 and 100). Each inter-node connection has a “transmission strength” (also a number), and the strength of the signal passing along a connection is a function of the signal at the start node and the transmission strength of the connection. Every time a signal is transmitted along a connection, the strength of that connection (also often called its “weight”) is increased or decreased proportional to the signal strength, according to a preset formula. (This corresponds to the way that, in a living brain, life experiences result in changes to the strengths of the synaptic connections between neurons in the brain.) Thus, the overall connection-strength configuration of the network changes with each operational cycle.

To use the network to carry out a particular computational task, the input(s) to the computation must be encoded as a set of input signals to the input layer and the corresponding output signal(s) interpreted as a result of the computation. The behavior of the networkâwhat it does to the input(s)âis dependent on the weights of the various network connections. Essentially, the patterns of those weights constitute the network's “memory.” The ability of a neural network to perform a particular task at any moment in time depends upon the actual architecture of the network and its current memory.

TRAINING A NEURAL NETWORK

Neural networks are not programmed in the usual sense of programming a computer. In the majority of cases, particularly neural networks used for classification, the application of a network must be preceded by a process of “training” to set the various connection weights.

By way of an example, suppose a bank wanted to train a neural network to recognize unauthorized credit card use. The bank first presents the network with a large number of previous credit card transactions (recorded in terms of user's home address, credit history, spending limit, expenditure, date, amount, location, etc.), each known to be either authentic or fraudulent. For each one, the network has to make a prediction concerning the transaction's authenticity. If the connection weights in the network are initially set randomly or in some neutral way, then some of its predictions will be correct and others wrong. During the training process, the network is “rewarded” each time its prediction is correct and “punished” each time it is wrong. (That is to say, the network is constructed so that a “correct grade”âi.e., positive feedback on its predictionâcauses it to continue adjusting the connection weights as before, whereas a “wrong grade” causes it to adjust them differently.) After many cycles (thousands or more), the connection weights will adjust so that on the majority of occasions (generally the

vast

majority) the decision made by the network is correct. What happens is that, over the course of many training cycles, the connection weights in the network will adjust in a way that corresponds to the profiles of legitimate and fraudulent credit card use, whatever those profiles may be (and, of great significance, without the programmer having to know them).

Some skill is required to turn these general ideas into a workable system, and many different network architectures have been developed to build systems that are suited to particular classification tasks.

After completion of a successful training cycle, it can be impossible for a human operator to figure out just what patterns of features (to continue with our current example) of credit card transactions the network has learned to identify as indicative of fraud. All that the operator can know is that the system is accurate to a certain degree of error, giving a correct prediction perhaps 95 percent of the time.

A similar phenomenon can occur with highly trained, highly experienced human experts in a particular domain, such as physicians. An experienced doctor will sometimes examine a patient and say with some certainty what she believes is wrong with the individual, and yet be unable to explain exactly just what specific symptoms led her to make that conclusion.

Much of the value of neural networks comes from the fact that they can acquire the ability to discern feature-patterns that no human could uncover. To take one example, typically just one credit card transaction among every 50,000 is fraudulent. No human could monitor that amount of activity to identify the frauds.

On occasion, however, the very opacity of neural networksâthe fact that they can uncover patterns that the human would not normally recognize as suchâcan lead to unanticipated results. According to one oft-repeated story, some years ago the U.S. Army trained a neural network to recognize tanks despite their being painted in camouflage colors to blend in with the background. The system was trained by showing it many photographs of scenes, some with tanks in, others with no tanks. After many training cycles, the network began to display extremely accurate tank recognition capacity. finally, the day came to test the system in the field, with real tanks in real locations. And to everyone's surprise, it performed terribly, seeming quite unable to distinguish between a scene with tanks and one without. The red-faced system developers retreated to their research laboratory and struggled to find out what had gone wrong. Eventually, someone realized what the problem was. The photos used to train the system had been taken on two separate days. The photos with tanks in them had been taken on a sunny day, the tank-free photos on a cloudy day. The neural network had certainly learned the difference between the two sets of photos, but the pattern it had discerned had nothing to do with the presence or absence of tanks; rather, the system had learned to distinguish a sunny day scene from a cloudy day scene. The moral of this tale being, of course, that you have to be careful when interpreting exactly which pattern a neural network has identified. That caution aside, however, neural networks have proved themselves extremely useful both in industry and commerce, and in law enforcement and defense.

Various network architectures have been developed to speed up the initial training process before a neural network can be put to work, but in most cases it still takes some time to complete. The principal exceptions are the Kohonen networks (named after Dr. Tevo Kohonen, who developed the idea), also known as Self-Organizing Maps (SOMs), which are used to identify clusters, and which we mentioned in Chapter 3 in connection with clustering crimes into groups that are likely to be the work of one individual or gang.