The Numbers Behind NUMB3RS (5 page)

Read The Numbers Behind NUMB3RS Online

Authors: Keith Devlin

Cobb and Gehlbach did not disagree on any of the statistical analysis. (In fact, they ended up writing a joint article about the case.) Rather, their roles were different, and they were addressing different issues. Gehlbach's task was to use statistics to determine if there were reasonable grounds to suspect Gilbert of multiple murder. More specifically, he carried out an analysis that showed that the increased numbers of deaths at the hospital during the shifts when Gilbert was on duty could not have arisen due to chance variation. That was sufficient to cast suspicion on Gilbert as the cause of the increase, but not at all enough to prove that she

did

cause the increase. What Cobb argued was that the establishment of a statistical relationship does not explain the cause of that relationship. The judge in the case accepted this argument, since the purpose of the trial was not to decide if there were grounds to make Gilbert a suspectâthe grand jury and the state attorney's office had done that. Rather, the job before the court was to determine whether or not Gilbert caused the deaths in question. His reason for excluding the statistical evidence was that, as experiences in previous court cases had demonstrated, jurors not well versed in statistical reasoningâand that would be almost all jurorsâtypically have great difficulty appreciating why odds of 1 in 100 million against the suspicious deaths occurring by chance does

not

imply that the odds that Gilbert did not kill the patients are likewise 1 in 100 million. The original odds could be

caused

by something else.

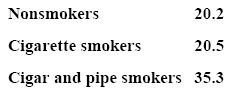

Cobb illustrated the distinction by means of a famous example from the long struggle physicians and scientists had in overcoming the powerful tobacco lobby to convince governments and the public that cigarette smoking causes lung cancer. Table 2 shows the mortality rates for three categories of people: nonsmokers, cigarette smokers, and cigar and pipe smokers.

Table 2. Mortality rates per 1,000 people per year.

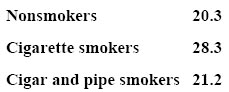

At first glance, the figures in Table 2 seem to indicate that cigarette smoking is not dangerous but pipe and cigar smoking are. However, this is not the case. There is a crucial variable lurking behind the data that the numbers themselves do not indicate: age. The average age of the nonsmokers was 54.9, the average age of the cigarette smokers was 50.5, and the average age of the cigar and pipe smokers was 65.9. Using statistical techniques to make allowance for the age differences, statisticians were able to adjust the figures to produce Table 3.

Table 3. Mortality rates per 1,000 people per year, adjusted for age.

Now a very different pattern emerges, indicating that cigarette smoking is highly dangerous.

Whenever a calculation of probabilities is made based on observational data, the most that can generally be concluded is that there is a correlation between two or more factors. That can mean enough to spur further investigation, but on its own it does not establish causation. There is always the possibility of a hidden variable that lies behind the correlation.

When a study is made of, say, the effectiveness or safety of a new drug or medical procedure, statisticians handle the problem of hidden parameters by relying not on observational data, but instead by conducting a randomized, double-blind trial. In such a study, the target population is divided into two groups by an entirely random procedure, with the group allocation unknown to both the experimental subjects and the caregivers administering the drug or treatment (hence the term “double-blind”). One group is given the new drug or treatment, the other is given a placebo or dummy treatment. With such an experiment, the random allocation into groups overrides the possible effect of hidden parameters, so that in this case a low probability that a positive result is simply chance variation can indeed be taken as conclusive evidence that the drug or treatment is what caused the result.

In trying to solve a crime, there is of course no choice but to work with the data available. Hence, use of the hypothesis-testing procedure, as in the Gilbert case, can be highly effective in the identification of a suspect, but other means are generally required to secure a conviction.

In

United States v. Kristen Gilbert

, the jury was not presented with Gehlbach's statistical analysis, but they did find sufficient evidence to convict her on three counts of first-degree murder, one count of second-degree murder, and two counts of attempted murder. Although the prosecution asked for the death sentence, the jury split 8â4 on that issue, and accordingly Gilbert was sentenced to life imprisonment with no possibility of parole.

POLICING THE POLICE

Another use of basic statistical techniques in law enforcement concerns the important matter of ensuring that the police themselves obey the law.

Law enforcement officers are given a considerable amount of power over their fellow citizens, and one of the duties of society is to make certain that they do not abuse that power. In particular, police officers are supposed to treat everyone equally and fairly, free of any bias based on gender, race, ethnicity, economic status, age, dress, or religion.

But determining bias is a tricky business and, as we saw in our previous discussion of cigarette smoking, a superficial glance at the statistics can sometimes lead to a completely false conclusion. This is illustrated in a particularly dramatic fashion by the following example, which, while not related to police activity, clearly indicates the need to approach statistics with some mathematical sophistication.

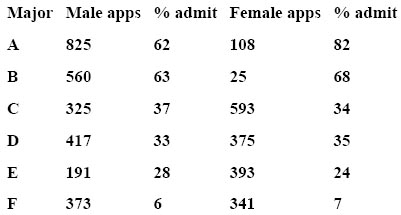

In the 1970s, somebody noticed that 44 percent of male applicants to the graduate school of the University of California at Berkeley were accepted, but only 35 percent of female applicants were accepted. On the face of it, this looked like a clear case of gender discrimination, and, not surprisingly (particularly at Berkeley, long acknowledged as home to many leading advocates for gender equality), there was a lawsuit over gender bias in admissions policies.

It turns out that Berkeley applicants do not apply to the graduate school, but to individual programs of studyâsuch as engineering, physics, or Englishâso if there is any admissions bias, it will occur within one or more particular program. Table 4 gives the admission data program by program:

Table 4. Admission figures from the University of California at Berkeley on a program-by-program basis.

If you look at each program individually, however, there doesn't appear to be an advantage in admission for male applicants. Indeed, the percentage of female applicants admitted to heavily subscribed program A is considerably higher than for males, and in all other programs the percentages are fairly close. So how can there appear to be an advantage for male applicants overall?

To answer this question, you need to look at what programs males and females applied to. Males applied heavily to programs A and B, females applied primarily to programs C, D, E, and F. The programs that females applied to were more difficult to get into than those for males (the percentages admitted are low for both genders), and this is why it appears that males had an admission advantage when looking at the aggregate data.

There was indeed a gender factor at work here, but it had nothing to do with the university's admissions procedures. Rather, it was one of self-selection by the applying students, where female applicants avoided progams A and B.

The Berkeley case was an example of a phenomenon known as Simpson's paradox, named for E. H. Simpson, who studied this curious phenomenon in a famous 1951 paper.

*

HOW DO YOU DETERMINE BIAS?

With the above cautionary example in mind, what should we make of the study carried out in Oakland, California, in 2003 (by the RAND Corporation, at the request of the Oakland Police Department's Racial Profiling Task Force), to determine if there was systematic racial bias in the way police stopped motorists?

The RAND researchers analyzed 7,607 vehicle stops recorded by Oakland police officers between June and December 2003, using various statistical tools to examine a number of variables to uncover any evidence that suggested racial profiling. One figure they found was that blacks were involved in 56 percent of all traffic stops studied, although they make up just 35 percent of Oakland's residential population. Does this finding indicate racial profiling? Well, it might, but as soon as you look more closely at what other factors could be reflected in those numbers, the issue is by no means clear cut.

For instance, like many inner cities, Oakland has some areas with much higher crime rates than others, and the police patrol those higher crime areas at a much greater rate than they do areas having less crime. As a result, they make more traffic stops in those areas. Since the higher crime areas typically have greater concentrations of minority groups, the higher rate of traffic stops in those areas manifests itself as a higher rate of traffic stops of minority drivers.

To overcome these uncertainties, the RAND researchers devised a particularly ingenious way to look for possible racial bias. If racial profiling was occurring, they reasoned, stops of minority drivers would be higher when the officers could determine the driver's race prior to making the stop. Therefore, they compared the stops made during a period just before nightfall with those made after darkâwhen the officers would be less likely to be able to determine the driver's race. The figures showed that 50 percent of drivers stopped during the daylight period were black, compared with 54 percent when it was dark. Based on that finding, there does not appear to be systematic racial bias in traffic stops.

But the researchers dug a little further, and looked at the officers' own reports as to whether they could determine the driver's race prior to making the stop. When officers reported knowing the race in advance of the stop, 66 percent of drivers stopped were black, compared with only 44 percent when the police reported not knowing the driver's race in advance. This is a fairly strong indicator of racial bias.

*

3

Data Mining

Finding Meaningful Patterns in Masses of Information

BRUTUS

Charlie Eppes is sitting in front of a bank of computers and television monitors. He is testing a computer program he is developing to help police monitor large crowds, looking for unusual behavior that could indicate a pending criminal or terrorist act. His idea is to use standard mathematical equations that describe the flow of fluidsâin rivers, lakes, oceans, tanks, pipes, even blood vessels.

*

He is trying out the new system at a fund-raising reception for one of the California state senators. Overhead cameras monitor the diners as they move around the room, and Charlie's computer program analyzes the “flow” of the people. Suddenly the test takes on an unexpected aspect. The FBI receives a telephone warning that a gunman is in the room, intending to kill the senator.

The software works, and Charlie is able to identify the gunman, but Don and his team are not able to get to the killer before he has shot the senator and then turned the gun on himself.

The dead assassin turns out to be a Vietnamese immigrant, a former Vietcong member, who, despite having been in prison in California, somehow managed to obtain U.S. citizenship and be the recipient of a regular pension from the U.S. Army. He had also taken the illegal drug speed on the evening of the assassination. When Don makes some enquiries to find out just what is going on, he is visited by a CIA agent who asks for help in trying to prevent too much information about the case leaking out. Apparently the dead killer had been part of a covert CIA behavior modification project carried out in California prisons during the 1960s to turn inmates into trained assassins who, when activated, would carry out their assigned task before killing themselves. (Sadly, this idea is no less fanciful than that of Charlie using fluid flow equations to study crowd behavior.)

But why had this particular individual suddenly become active and murdered the state senator?

The picture becomes much clearer when a second murder occurs. The victim this time is a prominent psychiatrist, the killer a Cuban immigrant. The killer had also spent time in a California prison, and he too was the recipient of regular Army pension checks. But on this occasion, when the assassin tries to shoot himself after killing the victim, the gun fails to go off and he has to flee the scene. A fingerprint identification from the gun soon leads to his arrest.

When Don realizes that the dead senator had been urging a repeal of the statewide ban on the use of behavior modification techniques on prison inmates, and that the dead psychiatrist had been recommending the re-adoption of such techniques to overcome criminal tendencies, he quickly concludes that someone has started to turn the conditioned assassins on the very people who were pressing for the reuse of the techniques that had produced them. But who?

Don thinks his best line of investigation is to find out who supplied the guns that the two killers had used. He knows that the weapons originated with a dealer in Nevada. Charlie is able to provide the next step, which leads to the identification of the individual behind the two assassinations. He obtains data on all gun sales involving that particular dealer and analyzes the relationships among all sales that originated there. He explains that he is employing mathematical techniques similar to those used to analyze calling patterns on the telephone networkâan approach used frequently in real-life law enforcement.

This is what viewers saw in the third-season episode of

NUMB3RS

called “Brutus” (the code name for the fictitious CIA conditioned-assassinator project), first aired on November 24, 2006. As usual, the mathematics Charlie uses in the show is based on real life.

The method Charlie uses to track the gun distribution is generally referred to as “link analysis,” and is one among many that go under the collective heading of “data mining.” Data mining obtains useful information among the mass of data that is availableâoften publiclyâin modern society.

FINDING MEANING IN INFORMATION

Data mining was initially developed by the retail industry to detect customer purchasing patterns. (Ever wonder why supermarkets offer customers those loyalty cardsâsometimes called “club” cardsâin exchange for discounts? In part it's to encourage customers to keep shopping at the same store, but low prices would do that. The significant factor for the company is that it enables them to track detailed purchase patterns that they can link to customers' home zip codes, information that they can then analyze using data-mining techniques.)

Though much of the work in data mining is done by computers, for the most part those computers do not run autonomously. Human expertise also plays a significant role, and a typical data-mining investigation will involve a constant back-and-forth interplay between human expert and machine.

Many of the computer applications used in data mining fall under the general area known as artificial intelligence, although that term can be misleading, being suggestive of computers that think and act like people. Although many people believed that was a possibility back in the 1950s when AI first began to be developed, it eventually became clear that this was not going to happen within the foreseeable future, and may well never be the case. But that realization did not prevent the development of many “automated reasoning” programs, some of which eventually found a powerful and important use in data mining, where the human expert often provides the “high-level intelligence” that guides the computer programs that do the bulk of the work. In this way, data mining provides an excellent example of the power that results when human brains team up with computers.

Among the more prominent methods and tools used in data mining are:

- Link analysis

âlooking for associations and other forms of connection among, say, criminals or terrorists - Geometric clustering

âa specific form of link analysis - Software agents

âsmall, self-contained pieces of computer code that can monitor, retrieve, analyze, and act on information - Machine learning

âalgorithms that can extract profiles of criminals and graphical maps of crimes - Neural networks

âspecial kinds of computer programs that can predict the probability of crimes and terrorist attacks.

We'll take a brief look at each of these topics in turn.

LINK ANALYSIS

Newspapers often refer to link analysis as “connecting the dots.” It's the process of tracking connections between people, events, locations, and organizations. Those connections could be family ties, business relationships, criminal associations, financial transactions, in-person meetings, e-mail exchanges, and a host of others. Link analysis can be particularly powerful in fighting terrorism, organized crime, money laundering (“follow the money”), and telephone fraud.

Link analysis is primarily a human-expert driven process. Mathematics and technology are used to provide a human expert with powerful, flexible computer tools to uncover, examine, and track possible connections. Those tools generally allow the analyst to represent linked data as a network, displayed and examined (in whole or in part) on the computer screen, with nodes representing the individuals or organizations or locations of interest and the links between those nodes representing relationships or transactions. The tools may also allow the analyst to investigate and record details about each link, and to discover new nodes that connect to existing ones or new links between existing nodes.

For example, in an investigation into a suspected crime ring, an investigator might carry out a link analysis of telephone calls a suspect has made or received, using telephone company call-log data, looking at factors such as number called, time and duration of each call, or number called next. The investigator might then decide to proceed further along the call network, looking at calls made to or from one or more of the individuals who had had phone conversations with the initial suspect. This process can bring to the investigator's attention individuals not previously known. Some may turn out to be totally innocent, but others could prove to be criminal collaborators.

Another line of investigation may be to track cash transactions to and from domestic and international bank accounts.

Still another line may be to examine the network of places and people visited by the suspect, using such data as train and airline ticket purchases, points of entry or departure in a given country, car rental records, credit card records of purchases, websites visited, and the like.

Given the difficulty nowadays of doing almost anything without leaving an electronic trace, the challenge in link analysis is usually not one of having insufficient data, but rather of deciding which of the megabytes of available data to select for further analysis. Link analysis works best when backed up by other kinds of information, such as tips from police informants or from neighbors of possible suspects.

Once an initial link analysis has identified a possible criminal or terrorist network, it may be possible to determine who the key players are by examining which individuals have the most links to others in the network.

GEOMETRIC CLUSTERING

Because of resource limitations, law enforcement agencies generally focus most of their attention on major crime, with the result that minor offenses such as shoplifting or house burglaries get little attention. If, however, a single person or an organized gang commits many such crimes on a regular basis, the aggregate can constitute significant criminal activity that deserves greater police attention. The problem facing the authorities, then, is to identify within the large numbers of minor crimes that take place every day, clusters that are the work of a single individual or gang.

One example of a “minor” crime that is often carried out on a regular basis by two (and occasionally three) individuals acting together is the so-called

bogus official burglary

(or

distraction burglary

). This is where two people turn up at the front door of a homeowner (elderly people are often the preferred targets) posing as some form of officialsâperhaps telephone engineers, representatives of a utility company, or local government agentsâand, while one person secures the attention of the homeowner, the other moves quickly through the house or apartment taking any cash or valuables that are easily accessible.

Victims of bogus official burglaries often file a report to the police, who will send an officer to the victim's home to take a statement. Since the victim will have spent considerable time with one of the perpetrators (the distracter), the statement will often include a fairly detailed descriptionâgender, race, height, body type, approximate age, general facial appearance, eyes, hair color, hair length, hair style, accent, identifying physical marks, mannerisms, shoes, clothing, unusual jewelry, etc.âtogether with the number of accomplices and their genders. In principle, this wealth of information makes crimes of this nature ideal for data mining, and in particular for the technique known as

geometric clustering

, to identify groups of crimes carried out by a single gang. Application of the method is, however, fraught with difficulties, and to date the method appears to have been restricted to one or two experimental studies. We'll look at one such study, both to show how the method works and to illustrate some of the problems often faced by the data-mining practitioner.

The following study was carried out in England in 2000 and 2001 by researchers at the University of Wolverhampton, together with the West Midlands Police.

*

The study looked at victim statements from bogus official burglaries in the police region over a three-year period. During that period, there were 800 such burglaries recorded, involving 1,292 offenders. This proved to be too great a number for the resources available for the study, so the analysis was restricted to those cases where the distracter was female, a group comprising 89 crimes and 105 offender descriptions.

The first problem encountered was that the descriptions of the perpetrators was for the most part in narrative form, as written by the investigating officer who took the statement from the victim. A data-mining technique known as text mining had to be used to put the descriptions into a structured form. Because of the limitations of the text-mining software available, human input was required to handle many of the entries; for instance, to cope with spelling mistakes, ad hoc or inconsistent abbreviations (e.g., “Bham” or “B'ham” for “Birmingham”), and the use of different ways of expressing the same thing (e.g., “Birmingham accent”, “Bham accent”, “local accent”, “accent: local”, etc.).

After some initial analysis, the researchers decided to focus on eight variables: age, height, hair color, hair length, build, accent, race, and number of accomplices.

Once the data had been processed into the appropriate structured format, the next step was to use geometric clustering to group the 105 offender descriptions into collections that were likely to refer to the same individual. To understand how this was done, let's first consider a method that at first sight might appear to be feasible, but which soon proves to have significant weaknesses. Then, by seeing how those weaknesses may be overcome, we will arrive at the method used in the British study.

First, you code each of the eight variables numerically. Ageâoften a guessâis likely to be recorded either as a single figure or a range; if it is a range, take the mean. Gender (not considered in the British Midlands study because all the cases examined had a female distracter) can be coded as 1 for male, 0 for female. Height may be given as a number (inches), a range, or a term such as “tall”, “medium”, or “short”; again, some method has to be chosen to convert each of these to a single figure. Likewise, schemes have to be devised to represent each of the other variables as a number.