The Numbers Behind NUMB3RS (18 page)

Read The Numbers Behind NUMB3RS Online

Authors: Keith Devlin

To experts in the methods of scientific investigation, it is simply mind-boggling to hear fingerprint evidence justified by the “no two are ever the same” claim. That is, at best, the right answer to the wrong question. Even if the one-trillion-plus possible pairings of full-set “exemplar” prints from the FBI's 150-million-set noncriminal database were thoroughly examined by the best human experts and found to satisfy the “no two ever match” claim, the level of assurance provided by that claim alone would be minimal. The right sort of question is this: How often are individual experts wrong when they declare a match between a high-quality exemplar of ten fingers and smudged partial prints of two fingers lifted from a crime scene?

There is a compelling irony in the fact that DNA evidence (discussed in Chapter 7), which in the 1980s and '90s only gradually earned its place in the courtroom as “genetic fingerprinting” through scientific validation studies, is now being cited as the standard for validating the claimed reliability of fingerprint evidence. The careful scientific foundation that was laid then, bringing data and hardcore probability theory and statistical analysis to bear on questions about the likelihood of an erroneous match of DNA, has by now established a “single point of comparison”âbut a very powerful oneâfor fingerprint evidence. Charlie's question, “But prints don't have odds?” isn't heard only on TV.

Just after Christmas in 2005, the Supreme Judicial Court in Massachusetts ruled that prosecutors in the retrial of defendant Terry L. Patterson could not present the proposed testimony of an expert examiner matching Patterson's prints with those found on the car of a Boston police detective who was murdered in 1993. The ruling came after the court solicited amicus curiae (“friend of the court”) briefs from a variety of scientific and legal experts regarding the reliability of identifications based on “simultaneous impressions.” Specifically, the examiner from the Boston Police Department was prepared to testify that three partial prints found on the detective's car appeared conclusively to have been made at the same time, therefore by the same individual, and that he had found six points of comparison on one finger, two on another finger, and five on a third.

Even by the loose standards of American fingerprint experts regarding the minimum number of points required to declare a match, this combining of different fingers with just a few points of comparison on each oneâthat is, the use of “simultaneous impressions”âis quite a stretch. Although at least one of the amicus briefs, authored by a blue ribbon team of statisticians, scientists, and legal scholars, asked the court to rule that

all fingerprint evidence should be excluded

from trials until its validity has been tested and its error rates determined, the court (perhaps not surprisingly) limited its ruling to the particular testimony offered.

The arguments made in

Patterson

and in several other similar cases cite recent examples of mistakes made in fingerprint identifications offered in criminal trials. One of these was the 1997 conviction of Stephan Cowans for the shooting of a Boston policeman based on a combination of eyewitness testimony and a thumbprint found on a glass mug from which the shooter drank water. After serving six years of a thirty-five-year sentence, Cowans had earned enough money in prison to pay for a DNA test of the evidence. That test exonerated him, and he was released from prison.

In another notorious case, the lawyers defending Byron Mitchell on a charge of armed robbery in 1999 questioned the reliability of his identification based on two prints lifted from the getaway car. To bolster the prosecution's arguments on admissibility of the testimony of their fingerprint expert, the FBI sent the two prints and Mitchell's exemplar to fifty-three crime labs for confirmation. This test was not nearly so stringent as the kinds of tests that scientists have proposed, involving matching between groups of fingerprint samples. Nevertheless, of the thirty-nine labs that sent back opinions, nine (23 percent) declared that Mitchell's prints were

not

a match for the prints from the getaway car. The judge rejected the defense challenge, however, and Mitchell was convicted and sent to prison. As of this writing, the FBI has not repeated this sort of test, and the bureau still claims that there has never been a case where one of their fingerprint experts had given court testimony based on an erroneous match. That claim hangs by a slender thread, however, in light of the following story.

AN FBI FINGERPRINT FIASCO: THE BRANDON MAYFIELD CASE

On the morning of March 11, 2004, a series of coordinated bombings of the commuter train system in Madrid killed 191 people and wounded more than two thousand. The attack was blamed on local Islamic extremists inspired by Al Qaeda. The attacks came three days before Spanish elections, and an angry electorate ousted the conservative government, which had backed the U.S. effort in Iraq. Throughout Europe and the world, the repercussions were enormous. No surprise, then, that the FBI was eager to help when Spanish authorities sent them a digital copy of fingerprints found on a plastic bag full of detonators discovered near the scene of one of the bombingsâfingerprints that the Spanish investigators had not been able to match.

The FBI's database included the fingerprints of a thirty-seven-year-old Portland-area lawyer, Brandon Mayfield, obtained when he served as a lieutenant in the United States Army. In spite of the relatively poor quality of the digital images sent by the Spanish investigators, three examiners from the FBI's Latent fingerprint Unit claimed to make a positive match between the crime-scene prints and those of Mayfield. Though Mayfield had never been to Spain, the FBI was understandably intrigued to find a match to his fingerprints: He had converted to Islam in the 1980s and had already attracted interest by defending a Muslim terrorist suspect, Jeffrey Battle, in a child custody case. Acting under the U.S. Patriot Act, the FBI twice surreptitiously entered his family's home and removed potential evidence, including computers, papers, copies of the Koran, and what were later described as “Spanish documents”âsome homework papers of one of Mayfield's sons, as it turned out. Confident that they had someone who not only matched the criminal fingerprints but also was plausibly involved in the Madrid bombing plot, the FBI imprisoned Mayfield under the Patriot Act as a “material witness.”

Mayfield was held for two weeks, then released, but he was not fully cleared of suspicion or freed from restrictions on his movements until four days later, when a federal judge dismissed the “material witness” proceedings against him, based substantially upon evidence that Spanish authorities had linked the original latent fingerprints to an Algerian. It turned out that the FBI had known

before

detaining Mayfield that the forensic science division of the Spanish National Police disagreed with the FBI experts' opinion that his fingerprints were a match for the crime-scene prints. After the judge's ruling, which ordered the FBI to return all property and personal documents seized from Mayfield's home, the bureau issued a statement apologizing to him and his family for “the hardships that this matter has caused.”

A U.S. Attorney in Oregon, Karin Immergut, took pains to deny that Mayfield was targeted because of his religion or the clients he had represented. Indeed, court documents suggested that the initial error was due to an FBI supercomputer's selecting his prints from its database, and that the error was compounded by the FBI's expert analysts. As would be expected, the government conducted several investigations of this embarrassing failure of the bureau's highly respected system of fingerprint identification. According to a November 17, 2004, article in

The New York Times

, an international team of forensic experts, led by Robert B. Stacey, head of the quality-assurance unit of the FBI's Quantico, Virginia, laboratory, concluded that the two fingerprint experts asked to confirm the first expert's opinion erred because “the FBI culture discouraged fingerprint examiners from disagreeing with their superiors.” So much for the myth of the dispassionate, objective scientist.

WHAT'S A POOR MATHEMATICIAN TO DO?

In TV land, Don and Charlie would not rest until they found out not only who committed the garroting murder, but whether Carl Howard was innocent of the previous crime, and, if so, who was the real killer. Predictably, within the episode's forty-two minutes (the time allotted between commercials), Charlie was able to help Don and his fellow agents apprehend the real perpetratorâ

of both crimes

âwho turned out to be the eyewitness who identified Carl Howard from the police lineup (a conflict of interest that does not occur too often in actual cases). The fingerprint identification of Carl Howard was just plain wrong.

Given the less than reassuring state of affairs in the real world, with the looming possibility of challenges to fingerprint identifications both in new criminal cases and in the form of appeals of old convictions, many mathematicians and statisticians, along with other scientists, would like to help. No one seriously doubts that fingerprints are an extremely valuable tool for crime investigators and prosecutors. But the principles of fairness and integrity that are part of the very foundations of the criminal justice system and the system of knowing called science demand that the long-overdue study and analysis of the reliability of fingerprint evidence be undertaken without further pointless delay. The rate of errors in expert matching of fingerprints is clearly dependent on a number of mathematically quantifiable factors, including:

- the skill of the expert

- the protocol and method used by the expert in the individualization process

- the image quality, completeness, and number of fingers in the samples to be compared

- the number of possible matches the expert is asked to consider for a suspect print

- the time available to perform the analysis

- the size and composition of the gallery of exemplars available for comparison

- the frequency of near agreement between partial or complete prints of individual fingers from different people.

Perhaps the biggest driver for consideration of such quantifiable factors in the coming years will not be the demands of the criminal justice system, but the need for substantial development and improvement of automated systems for fingerprint verification and identificationâfor example, in “biometric security systems” and in rapid fingerprint screening systems for use in homeland security.

FINGERPRINTS ONLINE

By the time the twentieth century was drawing to a close, the FBI's collection of fingerprints, begun in 1924, had grown to more than 200 million index cards, stored in row after row of filing cabinets (over 2,000 of them) that occupied approximately an acre of floor space at the FBI's Criminal Justice Information Services Division in Clarksburg, West Virginia. The bureau was receiving more than 30,000 requests a day for fingerprint comparisons. The need for electronic storage and automated search was clear.

The challenge was to find the most efficient way to encode digitized versions of the fingerprint images. (Digital capture of fingerprints in the first place came later, adding an extra layer of efficiency, though also raising legal concerns about the fidelity of such crucial items of evidence when the alteration of a digital image is such an easy matter.) The solution chosen made use of a relatively new branch of mathematics called wavelet theory. This choice led to the establishment of a national standard: the discrete wavelet transform-based algorithm, sometimes referred to as Wavelet/Scalar Quantization (WSQ).

Like the much more widely known JPEG-2000 digital image encoding standard, which also uses wavelet theory, WSQ is essentially a compression algorithm, which processes the original digital image to give a file that uses less storage. When scanned at 500 pixels per inch, a set of fingerprints will generate a digital file of around 10 MB. In the 1990s, when the system was being developed, that would have meant that the FBI needed a lot of electronic file space, but the problem was not so much the storing of files, but of moving them around the country (and the world) quickly, sometimes over slow modem connections to law enforcement agents in remote locations. The WSQ system reduces the file size by a factor of 20, which means that the resulting file is a mere 500 KB. There's mathematical power for you. To be sure, you lose some details in the process, but not enough to be noticeable to the human eye, even when the resulting image is blown up to several times actual fingerprint size for a visual comparison.

*

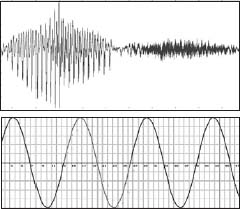

The idea behind wavelet encoding (and compression) goes back to the work of the early nineteenth-century French mathematician Joseph Fourier, who showed how any real-world function that takes real numbers and produces real number values can be represented as a sum of multiples of the familiar sine and cosine functions. (See figure 7.) Fourier himself was interested in functions that describe the way heat dissipates, but his mathematics works for a great many functions, including those that describe digital images. (From a mathematical standpoint, a digital image

is

a function, namely one that assigns to each pixel a number that represents a particular color or shade of gray.) For almost all real-world functions you need to add together infinitely many sine and cosine functions to reproduce the function, but Fourier provided a method for doing this, in particular for computing the coefficient of each sine and cosine function term in the sum.