Superintelligence: Paths, Dangers, Strategies (14 page)

Read Superintelligence: Paths, Dangers, Strategies Online

Authors: Nick Bostrom

Tags: #Science, #Philosophy, #Non-Fiction

The

ultimately

attainable advantages of machine intelligence, hardware and software combined, are enormous.

33

But how rapidly could those potential advantages be realized? That is the question to which we now turn.

The kinetics of an intelligence explosion

Once machines attain some form of human-equivalence in general reasoning ability, how long will it then be before they attain radical superintelligence? Will this be a slow, gradual, protracted transition? Or will it be sudden, explosive? This chapter analyzes the kinetics of the transition to superintelligence as a function of optimization power and system recalcitrance. We consider what we know or may reasonably surmise about the behavior of these two factors in the neighborhood of human-level general intelligence.

Given that machines will

eventually

vastly exceed biology in general intelligence, but that machine cognition is

currently

vastly narrower than human cognition, one is led to wonder how quickly this usurpation will take place. The question we are asking here must be sharply distinguished from the question we considered in

Chapter 1

about how far away we currently are from developing a machine with human-level general intelligence. Here the question is instead,

if and when such a machine is developed, how long will it be from then until a machine becomes radically superintelligent?

Note that one could think that it will take quite a long time until machines reach the human baseline, or one might be agnostic about how long that will take, and yet have a strong view that once this happens, the further ascent into strong superintelligence will be very rapid.

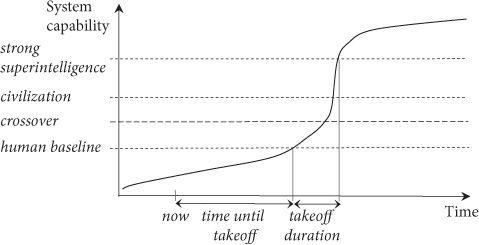

It can be helpful to think about these matters schematically, even though doing so involves temporarily ignoring some qualifications and complicating details. Consider, then, a diagram that plots the intellectual capability of the most advanced machine intelligence system as a function of time (

Figure 7

).

A horizontal line labeled “human baseline” represents the effective intellectual capabilities of a representative human adult with access to the information

sources and technological aids currently available in developed countries. At present, the most advanced AI system is far below the human baseline on any reasonable metric of general intellectual ability. At some point in future, a machine might reach approximate parity with this human baseline (which we take to be fixed—anchored to the year 2014, say, even if the capabilities of human individuals should have increased in the intervening years): this would mark the onset of the takeoff. The capabilities of the system continue to grow, and at some later point the system reaches parity with the combined intellectual capability of all of humanity (again anchored to the present): what we may call the “civilization baseline”. Eventually, if the system’s abilities continue to grow, it attains “strong superintelligence”—a level of intelligence vastly greater than contemporary humanity’s combined intellectual wherewithal. The attainment of strong superintelligence marks the completion of the takeoff, though the system might continue to gain in capacity thereafter. Sometime during the takeoff phase, the system may pass a landmark which we can call “the crossover”, a point beyond which the system’s further improvement is mainly driven by the system’s own actions rather than by work performed upon it by others.

1

(The possible existence of such a crossover will become important in the subsection on optimization power and explosivity, later in this chapter.)

Figure 7

Shape of the takeoff. It is important to distinguish between these questions: “Will a takeoff occur, and if so, when?” and “If and when a takeoff does occur, how steep will it be?” One might hold, for example, that it will be a very long time before a takeoff occurs, but that when it does it will proceed very quickly. Another relevant question (not illustrated in this figure) is, “How large a fraction of the world economy will participate in the takeoff?” These questions are related but distinct.

With this picture in mind, we can distinguish three classes of transition scenarios—scenarios in which systems progress from human-level intelligence to superintelligence—based on their steepness; that is to say, whether they represent a slow, fast, or moderate takeoff.

Slow

A slow takeoff is one that occurs over some long temporal interval, such as decades or centuries. Slow takeoff scenarios offer excellent opportunities for

human political processes to adapt and respond. Different approaches can be tried and tested in sequence. New experts can be trained and credentialed. Grassroots campaigns can be mobilized by groups that feel they are being disadvantaged by unfolding developments. If it appears that new kinds of secure infrastructure or mass surveillance of AI researchers is needed, such systems could be developed and deployed. Nations fearing an AI arms race would have time to try to negotiate treaties and design enforcement mechanisms. Most preparations undertaken before onset of the slow takeoff would be rendered obsolete as better solutions would gradually become visible in the light of the dawning era.

Fast

A fast takeoff occurs over some short temporal interval, such as minutes, hours, or days. Fast takeoff scenarios offer scant opportunity for humans to deliberate. Nobody need even notice anything unusual before the game is already lost. In a fast takeoff scenario, humanity’s fate essentially depends on preparations previously put in place. At the slowest end of the fast takeoff scenario range, some simple human actions might be possible, analogous to flicking open the “nuclear suitcase”; but any such action would either be elementary or have been planned and pre-programmed in advance.

Moderate

A moderate takeoff is one that occurs over some intermediary temporal interval, such as months or years. Moderate takeoff scenarios give humans some chance to respond but not much time to analyze the situation, to test different approaches, or to solve complicated coordination problems. There is not enough time to develop or deploy new systems (e.g. political systems, surveillance regimes, or computer network security protocols), but extant systems could be applied to the new challenge.

During a slow takeoff, there would be plenty of time for the news to get out. In a moderate takeoff, by contrast, it is possible that developments would be kept secret as they unfold. Knowledge might be restricted to a small group of insiders, as in a covert state-sponsored military research program. Commercial projects, small academic teams, and “nine hackers in a basement” outfits might also be clandestine—though, if the prospect of an intelligence explosion were “on the radar” of state intelligence agencies as a national security priority, then the most promising private projects would seem to have a good chance of being under surveillance. The host state (or a dominant foreign power) would then have the option of nationalizing or shutting down any project that showed signs of commencing takeoff. Fast takeoffs would happen so quickly that there would not be much time for word to get out or for anybody to mount a meaningful reaction if it did. But an outsider might intervene

before

the onset of the takeoff if they believed a particular project to be closing in on success.

Moderate takeoff scenarios could lead to geopolitical, social, and economic turbulence as individuals and groups jockey to position themselves to gain from the unfolding transformation. Such upheaval, should it occur, might impede efforts to orchestrate a well-composed response; alternatively, it might enable solutions more radical than calmer circumstances would permit. For instance, in a moderate takeoff scenario where cheap and capable emulations or other digital minds gradually flood labor markets over a period of years, one could imagine mass protests by laid-off workers pressuring governments to increase unemployment benefits or institute a living wage guarantee to all human citizens, or to levy special taxes or impose minimum wage requirements on employers who use emulation workers. In order for any relief derived from such policies to be more than fleeting, support for them would somehow have to be cemented into permanent power structures. Similar issues can arise if the takeoff is slow rather than moderate, but the disequilibrium and rapid change in moderate scenarios may present special opportunities for small groups to wield disproportionate influence.

It might appear to some readers that of these three types of scenario, the slow takeoff is the most probable, the moderate takeoff is less probable, and the fast takeoff is utterly implausible. It could seem fanciful to suppose that the world could be radically transformed and humanity deposed from its position as apex cogitator over the course of an hour or two. No change of such moment has ever occurred in human history, and its nearest parallels—the Agricultural and Industrial Revolutions—played out over much longer timescales (centuries to millennia in the former case, decades to centuries in the latter). So the base rate for the kind of transition entailed by a fast or medium takeoff scenario, in terms of the speed and magnitude of the postulated change, is zero: it lacks precedent outside myth and religion.

2

Nevertheless, this chapter will present some reasons for thinking that the slow transition scenario is improbable. If and when a takeoff occurs, it will likely be explosive.

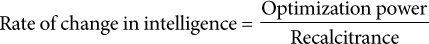

To begin to analyze the question of how fast the takeoff will be, we can conceive of the rate of increase in a system’s intelligence as a (monotonically increasing) function of two variables: the amount of “optimization power”, or quality-weighted design effort, that is being applied to increase the system’s intelligence, and the responsiveness of the system to the application of a given amount of such optimization power. We might term the inverse of responsiveness “recalcitrance”, and write:

Pending some specification of how to quantify intelligence, design effort, and recalcitrance, this expression is merely qualitative. But we can at least observe that a system’s intelligence will increase rapidly if

either

a lot of skilled effort is applied to the task of increasing its intelligence and the system’s intelligence is not too hard to increase

or

there is a non-trivial design effort and the system’s

recalcitrance is low (or both). If we know how much design effort is going into improving a particular system, and the rate of improvement this effort produces, we could calculate the system’s recalcitrance.

Further, we can observe that the amount of optimization power devoted to improving some system’s performance varies between systems and over time. A system’s recalcitrance might also vary depending on how much the system has already been optimized. Often, the easiest improvements are made first, leading to diminishing returns (increasing recalcitrance) as low-hanging fruits are depleted. However, there can also be improvements that make further improvements easier, leading to improvement cascades. The process of solving a jigsaw puzzle starts out simple—it is easy to find the corners and the edges. Then recalcitrance goes up as subsequent pieces are harder to fit. But as the puzzle nears completion, the search space collapses and the process gets easier again.

To proceed in our inquiry, we must therefore analyze how recalcitrance and optimization power might vary in the critical time periods during the takeoff. This will occupy us over the next few pages.

Let us begin with recalcitrance. The outlook here depends on the type of the system under consideration. For completeness, we first cast a brief glance at the recalcitrance that would be encountered along paths to superintelligence that do not involve advanced machine intelligence. We find that recalcitrance along those paths appears to be fairly high. That done, we will turn to the main case, which is that the takeoff involves machine intelligence; and there we find that recalcitrance at the critical juncture seems low.