Incognito (8 page)

Authors: David Eagleman

Eric reports that although he first perceived the tongue stimulation as unidentifiable edges and shapes, he quickly learned to recognize the stimulation at a deeper level. He can now pick up a cup of coffee or kick a soccer ball back and forth with his daughter.

33

If seeing with your tongue sounds strange, think of the experience of a blind person learning to read

Braille. At first it’s just bumps; eventually those bumps come to have meaning. And if you’re having a hard time imagining the transition from cognitive puzzle to direct perception, just consider the way you are reading

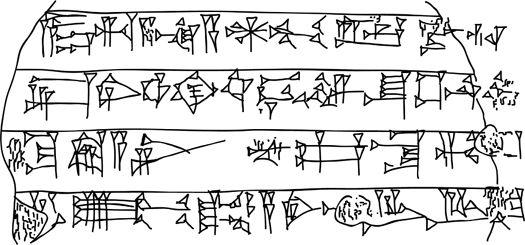

the letters on this page. Your eyes flick effortlessly over the ornate shapes without any awareness that you are translating them: the meaning of the words simply comes to you. You perceive the language, not the low-level details of the graphemes. To drive home the point, try reading this:

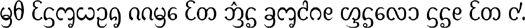

If you were an ancient Sumerian, the meaning would be readily apparent—it would flow off the tablet directly into meaning with no awareness of the mediating shapes. And the meaning of the next sentence is immediately apparent if you’re from Jinghong, China (but not from other Chinese regions):

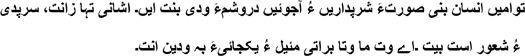

This next sentence is hilariously funny if you are a reader of the northwestern Iranian language of Baluchi:

To the reader of cuneiform, New Tai Lue, or Baluchi, the rest of the English script on this page looks as foreign and uninterpretable as their script looks to you. But these letters are effortless for you, because you’ve already turned the chore of cognitive translation into direct perception.

And so it goes with the electrical signals coming into the brain: at first they are meaningless; with time they accrue meaning. In

the same way that you immediately “see” the meaning in these words, your brain “sees” a timed barrage of electrical and chemical signals as, say, a horse galloping between snow-blanketed pine trees. To Mike May’s brain, the neural letters coming in are still in need of translation. The visual signals generated by the horse are uninterpretable bursts of activity, giving little indication, if any, of what’s out there; the signals on his retina are like letters of Baluchi that struggle to be translated one by one. To

Eric Weihenmayer’s brain, his tongue is sending messages in New Tai Lue—but with enough practice, his brain learns to understand the language. At that point, his understanding of the visual world is as directly apparent as the words of his native tongue.

Here’s an amazing consequence of the brain’s plasticity: in the future we may be able to plug new sorts of data streams directly into the brain, such as infrared or ultraviolet vision, or even weather data or stock market data.

34

The brain will struggle to absorb the data at first, but eventually it will learn to speak the language. We’ll be able to add new functionality and roll out Brain 2.0.

This idea is not science fiction; the work has already begun. Recently, researchers

Gerald Jacobs and

Jeremy Nathans took the gene for a human photopigment—a protein in the retina that absorbs light of a particular wavelength—and spliced it into color-blind mice.

35

What emerged? Color vision. These mice can now tell different colors apart. Imagine you give them a task in which they can gain a reward by hitting a blue button but they get no reward for hitting a red button. You randomize the positions of the buttons on each trial. The modified mice, it turns out, learn to choose the blue button, while to normal mice the buttons look indistinguishable—and hence they choose randomly. The brains of the new mice have figured out how to listen to the new dialect their eyes are speaking.

From the natural laboratory of evolution comes a related phenomenon in humans. At least 15 percent of human females possess a genetic mutation that gives them an extra (fourth) type of color photoreceptor—and this allows them to discriminate between colors that look identical to the majority of us with a

mere three types of color photoreceptors.

36

Two color swatches that look identical to the majority of people would be clearly distinguishable to these ladies. (No one has yet determined what percentage of fashion arguments is caused by this mutation.)

So plugging new data streams into the brain is not a theoretical notion; it already exists in various guises. It may seem surprising how easily new inputs can become operable—but, as

Paul Bach-y-Rita simply summarized his decades of research, “Just give the brain the information and it will figure it out.”

If any of this has changed your view of how you perceive reality, strap in, because it gets stranger. We’ll next discover why seeing has very little to do with your eyes.

In the traditionally taught view of perception, data from the sensorium pours into the brain, works its way up the

sensory hierarchy, and makes itself seen, heard, smelled, tasted, felt—“perceived.” But a closer examination of the data suggests this is incorrect. The brain is properly thought of as a mostly closed system that runs on its own internally generated activity.

37

We already have many examples of this sort of activity: for example, breathing, digestion, and walking are controlled by autonomously running activity generators in your brain stem and spinal cord. During dream sleep the brain is isolated from its normal input, so internal activation is the only source of cortical stimulation. In the awake state,

internal activity is the basis for imagination and

hallucinations.

The more surprising aspect of this framework is that the internal data is not

generated

by external sensory data but merely

modulated

by it. In 1911, the Scottish mountaineer and neurophysiologist

Thomas Graham Brown showed that the program for moving the muscles for walking is built into the machinery of the spinal cord.

38

He severed the sensory nerves from a cat’s legs and demonstrated that the cat could walk on a treadmill perfectly well. This indicated

that the program for walking was internally generated in the spinal cord and that sensory feedback from the legs was used only to

modulate

the program—when, say, the cat stepped on a slippery surface and needed to stay upright.

The deep secret of the brain is that not only the spinal cord but the entire central nervous system works this way: internally generated activity is modulated by sensory input. In this view, the difference between being awake and being asleep is merely that the data coming in from the eyes

anchors

the perception. Asleep vision (

dreaming) is perception that is not tied down to anything in the real world; waking perception is something like dreaming with a little more commitment to what’s in front of you. Other examples of

unanchored perception are found in prisoners in pitch-dark solitary confinement, or in people in sensory deprivation chambers. Both of these situations quickly lead to

hallucinations.

Ten percent of people with eye disease and visual loss will experience visual hallucinations. In the bizarre disorder known as

Charles Bonnet syndrome, people losing their sight will begin to see things—such as flowers, birds, other people, buildings—that they know are not real. Bonnet, a Swiss philosopher who lived in the 1700s, first described this phenomenon when he noticed that his grandfather, who was losing his vision to cataracts, tried to interact with objects and animals that were not physically there.

Although the syndrome has been in the literature for centuries, it is underdiagnosed for two reasons. The first is that many physicians do not know about it and attribute its symptoms to dementia. The second is that the people experiencing the hallucinations are discomfited by the knowledge that their visual scene is at least partially the counterfeit coinage of their brains. According to several surveys, most of them will never mention their hallucinations to their doctor out of fear of being diagnosed with mental illness.

As far as the clinicians are concerned, what matters most is whether the patient can perform a reality check and know that he is hallucinating; if so, the vision is labeled a

pseudohallucination

. Of course, sometimes it’s quite difficult to know if you’re hallucinating. You

might hallucinate a silver pen on your desk right now and never suspect it’s not real—because its presence is plausible. It’s easy to spot a hallucination only when it’s bizarre. For all we know, we hallucinate all the time.

As we’ve seen, what we call normal perception does not really differ from hallucinations, except that the latter are not anchored by external input. Hallucinations are simply unfastened vision.

Collectively, these strange facts give us a surprising way to look at the brain, as we are about to see.

Early ideas of brain

function were squarely based on a computer analogy: the brain was an input–output device that moved sensory information through different processing stages until reaching an end point.

But this assembly line model began to draw suspicion when it was discovered that brain wiring does not simply run from A to B to C: there are feedback

loops from C to B, C to A, and B to A. Throughout the brain there is as much feedback as feedforward—a feature of brain wiring that is technically called recurrence and colloquially called loopiness.

39

The whole system looks a lot more like a marketplace than an assembly line. To the careful observer, these features of the neurocircuitry immediately raise the possibility that visual perception is not a procession of data crunching that begins from the eyes and ends with some mysterious end point at the back of the brain.

In fact, nested feedback connections are so extensive that the system can even run backward. That is, in contrast to the idea that primary sensory areas merely process input into successively more complex interpretations for the next highest area of the brain, the higher areas are also talking directly back to the lower ones. For instance: shut your eyes and imagine an ant crawling on a red-and-white tablecloth toward a jar of purple jelly. The low-level parts of your visual system just lit up with activity. Even though you weren’t

actually seeing the ant, you were seeing it in your mind’s eye. The higher-level areas were driving the lower ones. So although the eyes feed into these low-level brain areas, the interconnectedness of the system means these areas do just fine on their own in the dark.

It gets stranger. Because of these rich marketplace dynamics, the different senses influence one another, changing the story of what is thought to be out there. What comes in through the eyes is not just the business of the visual system—the rest of the brain is invested as well. In the ventriloquist illusion,

sound comes from one location (the ventriloquist’s mouth), but your eyes see a moving mouth in a different location (that of the ventriloquist’s dummy). Your brain concludes that the sound comes directly from the dummy’s mouth. Ventriloquists don’t “throw” their voice. Your brain does all of the work for them.