Gödel, Escher, Bach: An Eternal Golden Braid (84 page)

Read Gödel, Escher, Bach: An Eternal Golden Braid Online

Authors: Douglas R. Hofstadter

Tags: #Computers, #Art, #Classical, #Symmetry, #Bach; Johann Sebastian, #Individual Artists, #Science, #Science & Technology, #Philosophy, #General, #Metamathematics, #Intelligence (AI) & Semantics, #G'odel; Kurt, #Music, #Logic, #Biography & Autobiography, #Mathematics, #Genres & Styles, #Artificial Intelligence, #Escher; M. C

So what is to be done? There is no end in sight. It appears that

TNT

, even when extended ad infinitum, cannot be made complete.

TNT

is therefore said to suffer from

essential incompleteness

because the income-pleteness here is part and parcel of

TNT

; it is an essential part of the nature of

TNT

and cannot be eradicated in any way, whether simpleminded or ingenious. What's more, this problem will haunt any formal version of number theory, whether it is an extension of

TNT

, a modification of

TNT

, or an alternative to

TNT

. The fact of the matter is this: the possibility of constructing, in a given system, an undecidable string via Gödel’s selfreference method, depends on three basic conditions:

(1) That the system should be rich enough so that all desired statements about numbers, whether true or false, can be expressed in it. (Failure on this count means that the system is from the very start too weak to be counted as a rival to

TNT

, because it can't even express number-theoretical notions that

TNT

can.

In the metaphor of the Contracrosttpunctus, it is as if one did not have a phonograph but a refrigerator or some other kind of object.)

(2) That all general recursive relations should be represented by formulas in the system. (Failure on this count means the system fails to capture in a theorem some general recursive truth, which can only be considered a pathetic bellyflop if it is attempting to produce all of number theory's truths. In the Contracrostipunctus metaphor, this is like having a record player, but one of low fidelity.)

(3) That the axioms and typographical patterns defined by its rules be recognizable by some terminating decision procedure. (Failure on this count means that there is no method to distinguish valid derivations in the system from invalid ones-thus that the "formal system" is not formal after all, and in fact is not even well-defined. In the Contracrostipunctus metaphor, it is a phonograph which is still on the drawing board, only partially designed.)

Satisfaction of these three conditions guarantees that any consistent system will be incomplete, because Gödel’s construction is applicable.

The fascinating thing is that any such system digs its own hole; the system's own richness brings about its own downfall. The downfall occurs essentially because the system is powerful enough to have self-referential sentences. In physics, the notion exists of a "critical mass" of a fissionable substance, such as uranium. A solid lump of the substance will just sit there, if its mass is less than critical. But beyond the critical mass, such a lump will undergo a chain reaction, and blow up. It seems that with formal systems there is an analogous critical point. Below that point, a system is "harmless" and does not even approach defining arithmetical truth formally; but beyond the critical point, the system suddenly attains the capacity for self-reference, and thereby dooms itself to incompleteness. The threshold seems to be roughly when a system attains the three properties listed above.

Once this ability for self-reference is attained, the system has a hole which is tailor-made for itself; the hole takes the features of the system into account and uses them against the system.

The Passion According to Lucas

The baffling repeatability of the Gödel argument has been used by various people-notably J. R. Lucas-as ammunition in the battle to show that there is some elusive and ineffable quality to human intelligence, which makes it unattainable by "mechanical automata"-that is, computers. Lucas begins his article "Minds, Machines, and Gödel" with these words: Gödel’s theorem seems to me to prove that Mechanism is false, that is, that minds cannot be explained as machines.'

Then he proceeds to give an argument which, paraphrased, runs like this. For a computer to be considered as intelligent as a person is, it must be able to do every intellectual task which a person can do. Now Lucas claims that no computer can do

"Gödelization" (one of his amusingly irreverent terms) in the manner that people can.

Why not? Well, think of any particular formal system, such as

TNT

, or

TNT+G

, or even

TNT+G

.. One can write a computer program rather easily which will systematically generate theorems of that system, and in such a manner that eventually, any preselected theorem will be printed out. That is, the theorem-generating program won't skip any portion of the "space" of all theorems. Such a program would be composed of two major parts: (1) a subroutine which stamps out axioms, given the "molds" of the axiom schemas (if there are any), and (2) a subroutine which takes known theorems (including axioms, of course) and applies rules of inference to produce new theorems. The program would alternate between running first one of these subroutines, and then the other.

We can anthropomorphically say that this program "knows" some facts of number theory-namely, it knows those facts which it prints out. If it fails to print out some true fact of number theory, then of course it doesn't "know" that fact. Therefore, a computer program will be inferior to human beings if it can be shown that humans know something which the program cannot know. Now here is where Lucas starts rolling. He says that we humans can always do the Gödel trick on any formal system as powerful as TNT-and hence no matter what the formal system, we know more than it does. Now this may only sound like an argument about formal systems, but it can also be slightly modified so that it becomes, seemingly, an invincible argument against the possibility of Artificial Intelligence ever reproducing the human level of intelligence. Here is the gist of it: Rigid internal codes entirely rule computers and robots; ergo ...

Computers are isomorphic to formal systems. Now .. .

Any computer which wants to be as smart as we are has got to be able to do number theory as well as we can, so….

Among other things, it has to be able to do primitive recursive arithmetic. But for this very reason .. .

It is vulnerable to the Gödelian "hook", which implies that ...

We, with our human intelligence, can concoct a certain statement of number theory which is true, but the computer is blind to that statement's truth (i.e., will never print it out), precisely because of Gödel’s boomeranging argument.

This implies that there is one thing which computers just cannot be programmed to do, but which we can do. So we are smarter.

Let us enjoy, with Lucas, a transient moment of anthropocentric glory: However complicated a machine we construct, it will, if it is a machine, correspond to a formal system, which in turn will be liable to the Gödel procedure for finding a formula unprovable-in-that-system. This formula the machine will be unable to produce as being true, although a mind can see it is true. And so the machine will still not be an adequate model of the mind. We are trying to produce a model of the mind which is mechanical-which is essentially "dead"-but the mind, being in fact

"alive," can always go one better than any formal, ossified, dead system can. Thanks to Gödel’s theorem. the mind always has the last word.2

On first sight, and perhaps even on careful analysis, Lucas' argument appears compelling. It usually evokes rather polarized reactions. Some ;eize onto it as a nearly religious proof of the existence of souls, while others laugh it off as being unworthy of comment. I feel it is wrong, but Fascinatingly so-and therefore quite worthwhile taking the time to rebut. In fact, it was one of the major early forces driving me to think over the matters in this book. I shall try to rebut it in one way in this Chapter, and in ether ways in Chapter XVII.

We must try to understand more deeply why Lucas says the computer cannot be programmed to "know" as much as we do. Basically the idea is :hat we are always outside the system, and from out there we can always perform the "Gödelizing"

operation, which yields something which the program, from within, can't see is true. But why can't the "Gödelizing operator", as Lucas calls it, be programmed and added to the program as a third major component, Lucas explains:

The procedure whereby the Gödelian formula is constructed is a standard procedure-only so could we be sure that a Gödelian formula can be constructed for every formal system. But if it is a standard procedure, then a machine should be able to be programmed to carry it out too.... This would correspond to having a system with an additional rule of inference which allowed one to add, as a theorem, the Gödelian formula of the rest of' the formal system, and then the Gödelian formula of this new, strengthened, formal system, and so on. It would be tantamount to adding to the original formal system an infinite sequence of axioms, each the Gödelian formula of the system hitherto obtained… We might expect a mind, faced with a machine that possessed a Gödelizing operator, to take this into account, and

out-Gödel the new machine, Gödelizing operator and all. This has, in fact, proved to be the case. Even if we adjoin to a formal system the infinite set of axioms consisting of the successive Gödelian formulae, the resulting system is still incomplete, and contains a formula which cannot be proved-in-the system, although a rational being can, standing outside the system, see that it is true. We had expected this, for even if an infinite set of axioms were added, they would have to be specified by some finite rule or specification, and this further rule or specification could then be taken into account by a mind considering the enlarged formal system. In a sense, just because the mind has the last word, it can always pick a hole in any formal system presented to it as a model of its own workings.

The mechanical model must be, in some sense, finite and definite: and then the mind can always go one better.'

Jumping Up a Dimension

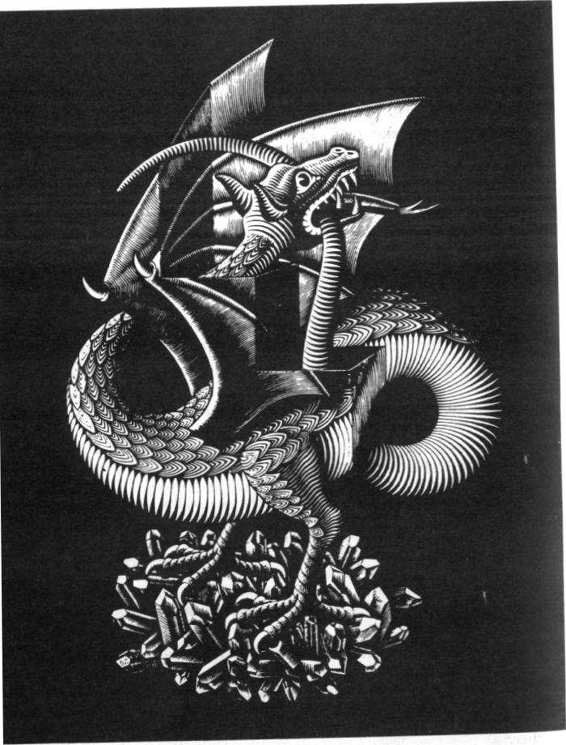

A visual image provided by M. C. Escher is extremely useful in aiding the intuition here: his drawing

Dragon

(Fig. 76). Its most salient feature is, of course, its subject matter-a dragon biting its tail, with all the Gödelian connotations which that carries. But there is a deeper theme to this picture. Escher himself wrote the following most interesting comments. The first comment is about a set of his drawings all of which are concerned with "the conflict between the flat and the spatial"; the second comment is about Dragon in particular.

I Our three-dimensional space is the only true reality we know. The two-dimensional is every bit as fictitious as the four-dimensional, for nothing is flat, not even the most finely polished mirror. And yet we stick to the convention that a wall or a piece of paper is flat, and curiously enough, we still go on, as we have done since time immemorial, producing illusions of space on just such plane surfaces as these. Surely it is a bit absurd to draw a few lines and then claim: "This is a house".

This odd situation is the theme of the next five pictures ( Including Dragon) II. However much this dragon tries to be spatial, he remains completely flat. Two incisions are made in the paper on which he is printed. Then it is folded in such a way as to leave two square openings. But this dragon is an obstinate beast, and in'

spite of his two dimensions he persists in assuming that he has three; so he sticks his head through one of the holes and his tail through the others 5

This second remark especially is a very telling remark. The message is that no matter how cleverly you try to simulate three dimensions in two, you are always missing some

"essence of three-dimensionality". The dragon tries very hard to fight his two-dimensionality. He defies the two-dimensionality of the paper on which he thinks he is drawn, by sticking his head through it; and yet all the while, we outside the drawing can see the pathetic futility of it all, for the dragon and the holes and the folds are all merely two-dimensional simulations of those concepts, and not a one of them is real. But the dragon cannot step out of his two-dimensional space, and cannot

FIGURE 76. Dragon,

by M. C. Escher (wood-engraving

, 1952).

know it as we do. We could, in fact, carry the Escher picture any number of steps further.

For instance, we could tear it out of the book, fold it, cut holes in it, pass it through itself, and photograph the whole mess, so that it again becomes two-dimensional. And to that photograph, we could once again do the same trick. Each time, at the instant that it becomes two- Matter how 'cleverly we seem to have simulated three dimensions inside two—it becomes vulnerable to being cut and folded again.