Emotional Design (23 page)

Authors: Donald A. Norman

By continually being in communication with friends across a lifetime, across the world, we risk the paradox of enhancing shallow interactions at the expense of deep ones. Yes, we can hold continual, short interactions with numerous people, thus keeping friendships alive. But the more we hold short, brief, fleeting interactions and allow ourselves to interrupt ongoing conversations and interactions, the less we allow any depth of interaction, any depth to a relationship.

“Continuously divided attention” is the way Linda Stone has described this phenomenon, but no matter how we may deplore it, it has become a commonplace aspect of everyday life.

The Role of Design“Continuously divided attention” is the way Linda Stone has described this phenomenon, but no matter how we may deplore it, it has become a commonplace aspect of everyday life.

Technology often forces us into situations where we can't live without the technology even though we may actively dislike its impact. Or we may love what the technology provides us while hating the frustrations encountered while trying to use it. Love and hate: two conflicting emotions, but commonly combined to form an enduring, if uncomfortable, relationship. These love-hate relationships can be amazingly stable.

Love-hate relationships offer promise, if only the hate can be dissipated, retaining the love. The designer has some power here, but only to a limited extent, for although some of the irritation and dislike is a result of inappropriate or impoverished design, much is a result of societal norms and standards, and these can only be changed by society itself.

Much of modern technology is really the technology of social interaction: it is the technology of trust and emotional bonds. But neither social interaction nor trust were designed into the technology or even thought through: they came about through happenstance, through the accidental byproducts of deployment. To the technologist, the technology provides a means of communication; for us, however, it provides a means for social interaction.

There is much that can be done to enhance these technologies. We have already seen that lack of trust comes about from lack of understanding, from situations where we feel out of control, unaware of what has happened, or why, or what we should do the next time. And we have seen how the unscrupulous, the thief and the terrorist can take advantage of the normal trust people have for one another, a trust that is essential if normal civilization is to exist.

In the case of the personal computer, the frustrations and irritations that lead to “computer rage” are indeed the domain of design. These are caused by design flaws that exacerbate the problems. Some have to do with the lack of reliability and bad programming, some with the lack of understanding of human needs, and some the lack of fit between the operation of the computer and the tasks that people wish to do. All these can be solved. Today, communication seems always to be with us, whether we wish it to or not. Whether at work or play, school or home, we can make contact with others. Moreover, the distinctions among the various media are disappearing, as we send voice and text, words and images, music and video back and forth with increasing ease and frequency. When my friend in Japan uses his cell phone to take a photograph of his new grandchild and sends it to me in the United States, is this email, photography, or telephony?

The good news is that the new technologies enable us always to feel connected, to be able to share our thoughts and feelings no matter where we are, no matter what we are doing, independent of the time or time zone. The bad news is, of course, those very same things. If all my friends were always to keep in touch, there would be no time for anything else. Life would be filled with interruptions, twenty-four hours a day. Each interaction alone would be pleasant and rewarding, but the total impact would be overwhelming.

The problem, however, is that the ease of short, brief communication with friends around the world disrupts the normal, everyday social interaction. Here, the only hope is for a change in social acceptance. This can go in two directions. We could all come to accept the interruptions as a part of life, thinking nothing of it when the several members of a group continually enter their own private space to interact with othersâfriends, bosses, coworkers, family, or perhaps their video game, where their characters are in desperate need of help. The other direction is for people to learn to limit their interactions, to let the telephone take messages by text, video or voice, so that the calls can be returned at a convenient time. I can imagine solutions designed to help facilitate this, so that the technology within a telephone might negotiate

with a caller, checking the calendars of each party and setting up a time to converse, all without bothering any of the individuals.

with a caller, checking the calendars of each party and setting up a time to converse, all without bothering any of the individuals.

We need technologies that provide the rich power of interaction without the disruption: we need to regain control over our lives. Control, in fact, seems to be the common theme, whether it be to avoid the frustration, alienation, and anger we feel toward today's technologies, or to permit us to interact with others reliably, or to keep tight the bonds between us and our family, friends, and colleagues.

Not every interaction has to be done in real time, with participants interrupting one another, always available, always responding. The store-and-forward technologiesâfor example email and voice mailâallow messages to be sent at the sender's convenience, but then listened to or viewed at the receiver's convenience. We need ways of intermixing the separate communication methods, so that we could choose mail, email, telephone, voice, or text as the occasion demands. People need also to set aside time when they can concentrate without interruption, so that they can stay focused.

Most of us already do this. We turn off our cell phones and deliberately do not carry them at times. We screen our telephone calls, not answering unless we seeâor hearâthat it is from someone we really wish to speak to. We go away to private locations, the better to write, think, or simply relax.

Today, the technologies are struggling to ensure their ubiquitous presence, so that no matter where we are, no matter what we are doing, they are available. That is fine, as long as the choice of whether to use them remains with the individual at the receiving end. I have great faith in society. I believe we will come to a sensible accommodation with these technologies. In the early years of any technology, the potential applications are matched by the all-too-apparent drawbacks, yielding the love-hate relationship so common with new technologies. Love for the potential, hate for the actuality. But with time, with improved design of both the technology and the manner in which it is used, it is possible to minimize the hate and transform the relationship to one of love.

CHAPTER SIX

Emotional Machines

Dave, stop . . . Stop, will you . . . Stop, Dave . . . Will you stop, Dave . . . Stop, Dave . . . I'm afraid. I'm afraid . . . I'm afraid, Dave . . . Dave . . . My mind is going . . . I can feel it ... I can feel it . . . My mind is going . . . There is no question about it . . . I can feel it . . . I can feel it . . . I'm a. . . fraid.

Â

âHAL, the all-powerful computer, in the movie2001

Â

Â

HAL IS CORRECT TO BE AFRAID: Dave is about to shut him down by dismantling his parts. Of course, Dave is afraid, too: HAL has killed all the other crew of the spacecraft and made an unsuccessful attempt on Dave's life.

But why and how does HAL have fear? Is it real fear? I suspect not. HAL correctly diagnoses Dave's intent: Dave wants to kill him. So fearâbeing afraidâis a logical response to the situation. But human emotions have more than a logical, rational component; they are tightly coupled to behavior and feelings. Were HAL a human, he would fight hard to prevent his death, slam some doors, do something to survive.

He could threaten, “Kill me and you will die, too, as soon as the air in your backpack runs out.” But HAL doesn't do any of this; he simply states, as a fact, “I'm afraid.” HAL has an intellectual knowledge of what it means to be afraid, but it isn't coupled to feelings or to action: it isn't real emotion.

He could threaten, “Kill me and you will die, too, as soon as the air in your backpack runs out.” But HAL doesn't do any of this; he simply states, as a fact, “I'm afraid.” HAL has an intellectual knowledge of what it means to be afraid, but it isn't coupled to feelings or to action: it isn't real emotion.

But why would HAL need real emotions to function? Our machines today don't need emotions. Yes, they have a reasonable amount of intelligence. But emotions? Nope. But future machines will need emotions for the same reasons people do: The human emotional system plays an essential role in survival, social interaction and cooperation, and learning. Machines will need a form of emotionâmachine emotionâwhen they face the same conditions, when they must operate continuously without any assistance from people in the complex, ever-changing world where new situations continually arise. As machines become more and more capable, taking on many of our roles, designers face the complex task of deciding just how they shall be constructed, just how they will interact with one another and with people. Thus, for the same reason that animals and people have emotions, I believe that machines will also need them. They won't be human emotions, mind you, but rather emotions that fit the needs of the machines themselves.

Robots already exist. Most are fairly simple automated arms and tools in factories, but they are increasing in power and capabilities, branching out to a much wider array of activities and places. Some do useful jobs, as do the lawn-mowing and vacuum-cleaning robots that already exist. Some, such as the surrogate pets, are playful. Some simple robots are being used for dangerous jobs, such as fire fighting, search-and-rescue missions, or for military purposes. Some robots even deliver mail, dispense medicine, and take on other relatively simple tasks. As robots become more advanced, they will need only the simplest of emotions, starting with such practical ones as visceral-like fear of heights or concern about bumping into things. Robot pets will have playful, engaging personalities. With time, as these robots gain in capability, they will come to possess full-fledged emotions: fear and

anxiety when in dangerous situations, pleasure when accomplishing a desired goal, pride in the quality of their work, and subservience and obedience to their owners. Because many of these robots will work in the home environment, interacting with people and other household robots, they will need to display their emotions, to have something analogous to facial expressions and body language.

anxiety when in dangerous situations, pleasure when accomplishing a desired goal, pride in the quality of their work, and subservience and obedience to their owners. Because many of these robots will work in the home environment, interacting with people and other household robots, they will need to display their emotions, to have something analogous to facial expressions and body language.

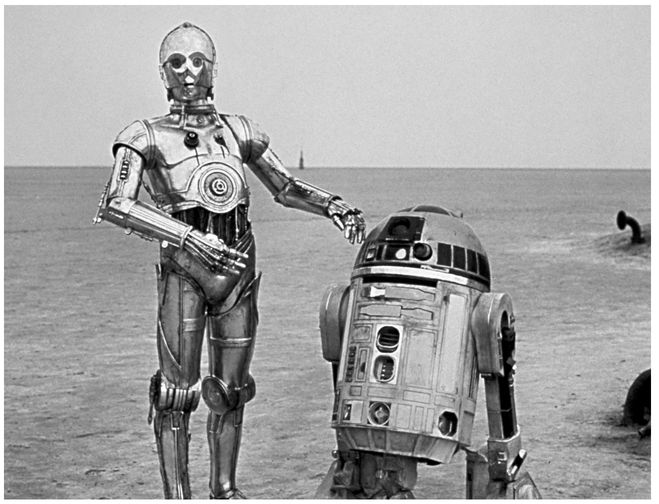

Both are remarkably expressive despite R2D2's lack of body and facial structure.

(Courtesy of Lucasfilm Ltd.)

Facial expressions and body language are part of the “system image” of a robot, allowing the people with whom it interacts to have a better conceptual model of its operation. When we interact with other people, their facial expressions and body language let us know if they understand us, if they are puzzled, and if they are in agreement. We can tell when people are having difficulty by their expressions. The same sort of nonverbal feedback will be invaluable when we interact with robots: Do the robots understand their instructions?

When are they working hard at the task? When are they being successful? When are they having difficulties? Emotional expressions will let us know their motivations and desires, their accomplishments and frustrations, and thus will increase our satisfaction and understanding of the robots: we will be able to tell what they are capable of and what they aren't.

When are they working hard at the task? When are they being successful? When are they having difficulties? Emotional expressions will let us know their motivations and desires, their accomplishments and frustrations, and thus will increase our satisfaction and understanding of the robots: we will be able to tell what they are capable of and what they aren't.

Finding the right mix of emotions and intelligence is not easy. The two robots from the

Star Wars

films, R2D2 and C3PO, act like machines we might enjoy having around the house. I suspect that part of their charm is in the way they display their limitations. C3PO is a clumsy, well-meaning oaf, pretty incompetent at all tasks except the one for which he is a specialist: translating languages and machine communication. R2D2 is designed for interacting with other machines and has limited physical capabilities. It has to rely upon C3PO to talk with people.

Star Wars

films, R2D2 and C3PO, act like machines we might enjoy having around the house. I suspect that part of their charm is in the way they display their limitations. C3PO is a clumsy, well-meaning oaf, pretty incompetent at all tasks except the one for which he is a specialist: translating languages and machine communication. R2D2 is designed for interacting with other machines and has limited physical capabilities. It has to rely upon C3PO to talk with people.

R2D2 and C3PO show their emotions well, letting the screen charactersâand the movie audienceâunderstand, empathize with, and, at times, get annoyed with them. C3PO has a humanlike form, so he can show facial expressions and body motions: he does a lot of hand wringing and body swaying. R2D2 is more limited, but nonetheless very expressive, showing how able we are to impute emotions when all we can see is a head shaking, the body moving back and forth, and some cute but unintelligible sounds. Through the skills of the moviemakers, the conceptual models underlying R2D2 and C3PO are quite visible. Thus, people always have pretty accurate understanding of their strengths and weaknesses, which make them enjoyable and effective.

Movie robots haven't always fared well. Notice what happened to two movie robots: HAL, of the movie

2001

and David, of the movie

AI

(

Artificial Intelligence

). HAL is afraid, as the opening quotation of this chapter illustrates, and properly so. He is being taken apartâbasically, being murdered.

2001

and David, of the movie

AI

(

Artificial Intelligence

). HAL is afraid, as the opening quotation of this chapter illustrates, and properly so. He is being taken apartâbasically, being murdered.

David is a robot built to be a surrogate child, taking the place of a real child in a household. David is sophisticated, but a little too perfect. According to the story, David is the very first robot to have

“unconditional love.” But this is not a true love. Perhaps because it is “unconditional,” it seems artificial, overly strong, and unaccompanied by the normal human array of emotional states. Normal children may love their parents, but they also go through stages of dislike, anger, envy, disgust, and just plain indifference toward them. David does not exhibit any of these feelings. David's pure love means a happy devoted child, following his mother's footsteps, quite literally, every second of the day. This behavior is so irritating that he is finally abandoned by his foster mother, left in the wilderness, and told not to come back.

“unconditional love.” But this is not a true love. Perhaps because it is “unconditional,” it seems artificial, overly strong, and unaccompanied by the normal human array of emotional states. Normal children may love their parents, but they also go through stages of dislike, anger, envy, disgust, and just plain indifference toward them. David does not exhibit any of these feelings. David's pure love means a happy devoted child, following his mother's footsteps, quite literally, every second of the day. This behavior is so irritating that he is finally abandoned by his foster mother, left in the wilderness, and told not to come back.

Other books

The Breaking Dawn (The Kingdom of Mercia Book 1) by Jayne Castel

Forceful Justice by Blair Aaron

Natural Causes by James Oswald

Demon Vampire (The Redgold Series) by Virgil Allen Moore

Summer Is for Lovers by Jennifer McQuiston

Whispers of Home by April Kelley

04 Silence by Kailin Gow

The Snow Angel by Beck, Glenn, Baart, Nicole

Chocolate Quake by Fairbanks, Nancy