Bad Science (18 page)

Authors: Ben Goldacre

Tags: #General, #Life Sciences, #Health & Fitness, #Errors, #Health Care Issues, #Essays, #Scientific, #Science

Publication bias is common, and in some fields it is more rife than in others. In 1995, only 1 percent of all articles published in alternative medicine journals gave a negative result. The most recent figure is 5 percent negative. This is very, very low, although to be fair, it could be worse. A review in 1998 looked at the entire canon of Chinese medical research and found that not one single negative trial had ever been published. Not one. You can see why I use CAM as a simple teaching tool for evidence-based medicine.

Generally the influence of publication bias is more subtle, and you can get a hint that publication bias exists in a field by doing something very clever called a funnel plot. This requires, only briefly, that you pay attention.

If there are lots of trials on a subject, then quite by chance they all will give slightly different answers, but you would expect them all to cluster fairly equally around the true answer. You would also expect that the bigger studies, with more participants in them, and with better methods, would be more closely clustered around the correct answer than the smaller studies; the smaller studies, meanwhile, will be all over the shop, unusually positive and negative at random, because in a study with, say, twenty patients, you need only three freak results to send the overall conclusions right off.

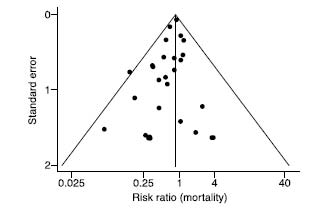

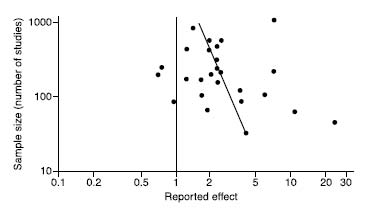

A funnel plot is a clever way of graphing this (as demonstrated by the graph shown

here

). You put the effect (i.e., how effective the treatment is) on the x-axis, from left to right. Then, on the y-axis (top to bottom, for those of you who cut math) you put how big the trial was, or some other measure of how accurate it was. If there is no publication bias, you should see a nice inverted funnel. The big, accurate trials all cluster around one another at the top of the funnel, and then, as you go down the funnel, the little, inaccurate trials gradually spread out to the left and right, as they become more and more wildly inaccurate—both positively and negatively.

If there is publication bias, however, the results will be skewed. The smaller, more rubbish

negative

trials seem to be missing, because they were ignored—nobody had anything to lose by letting these tiny, unimpressive trials sit in their bottom drawer, and so only the positive ones were published. Not only has publication bias been demonstrated in many fields of medicine, but a paper has even found evidence of publication bias in studies of publication bias. The graph below is the funnel plot for that paper. This is what passes for humor in the world of evidence-based medicine. The most heinous recent case of publication bias has been in the area of SSRI antidepressant drugs, as has been shown in various papers. A group of academics published a paper in

The New England Journal of Medicine

at the beginning of 2008 that listed all the trials on SSRIs that had ever been formally registered with the FDA, and examined the same trials in the academic literature. Thirty-seven studies were assessed by the FDA as positive: with one exception, every single one of those positive trials was properly written up and published. Meanwhile, twenty-two studies that had negative or iffy results were simply not published at all, and eleven were written up and published in a way that described them as having a positive outcome.

This is more than cheeky. Doctors need reliable information if they are to make helpful and safe decisions about prescribing drugs to their patients. Depriving them of this information, and deceiving them, are a major moral crime. If I weren’t writing a light and humorous book about science right now, I would descend into gales of rage.

Duplicate Publication

Drug companies can go one better than neglecting negative studies. Sometimes, when they get positive results, instead of just publishing them once, they publish them several times, in different places, in different forms, so that it looks as if there were lots of different positive trials. This is particularly easy if you’ve performed a large “multicenter” trial, because you can publish overlapping bits and pieces from each center separately or in different permutations. It’s also a very clever way of kludging the evidence, because it’s almost impossible for the reader to spot.

A classic piece of detective work was performed in this area by a vigilant anesthetist from Oxford named Martin Tramer, who was looking at the efficacy of a nausea drug called ondansetron. He noticed that lots of the data in a meta-analysis he was doing seemed to be replicated; the results for many individual patients had been written up several times, in slightly different forms, in apparently different studies, in different journals. Crucially, data that showed the drug in a better light was more likely to be duplicated than the data that showed it to be less impressive, and overall, this led to a 23 percent overestimate of the drug’s efficacy.

Hiding Harm

That’s how drug companies dress up the positive results. What about the darker, more headline-grabbing side, where they hide the serious harms?

Side effects are a fact of life: they need to be accepted, managed in the context of benefits, and carefully monitored, because the unintended consequences of interventions can be extremely serious. The stories that grab the headlines are ones in which there is foul play, or a cover-up, but in fact, important findings can also be missed for much more innocent reasons, like the normal human processes of accidental neglect in publication bias or because the worrying findings are buried from view in the noise of the data.

Antiarrhythmic drugs are an interesting example. People who have heart attacks get irregular heart rhythms fairly commonly (because bits of the timekeeping apparatus in the heart have been damaged), and they also commonly die from them. Antiarrhythmic drugs are used to treat and prevent irregular rhythms in people who have them. Why not, thought doctors, just give them to everyone who has had a heart attack? It made sense on paper, the drugs seemed safe, and nobody knew at the time that they would actually increase the risk of death in this group—because that didn’t make sense from the theory (like with antioxidants). But they do, and at the peak of their use in the 1980s, antiarrhythmic drugs were causing comparable numbers of deaths to the total number of Americans who died in the Vietnam War. Information that could have helped avert this disaster was sitting, tragically, in a bottom drawer, as a researcher later explained: “When we carried out our study in 1980 we thought that the increased death rate…was an effect of chance…The development of [the drug] was abandoned for commercial reasons, and so this study was therefore never published; it is now a good example of ‘publication bias.’ The results described here…might have provided an early warning of trouble ahead.”

That was neglect, and wishful thinking. But sometimes it seems that dangerous effects from drugs can be either deliberately downplayed or, worse than that, simply not published. There has been a string of major scandals from the pharmaceutical industry recently, in which it seems that evidence of harm for drugs including Vioxx and the SSRI antidepressants has gone missing in action. It didn’t take long for the truth to out, and anybody who claims that these issues have been brushed under the medical carpet is simply ignorant. They were dealt with, you’ll remember, in the three highest-ranking papers in the

British Medical Journal

’s archive. They are worth looking at again, in more detail.

Vioxx

Vioxx was a painkiller developed by the Merck company and approved by the American FDA in 1999. Many painkillers can cause gut problems—ulcers and more—and the hope was that this new drug might not have such side effects. This was examined in a trial called VIGOR, comparing Vioxx with an older drug, naproxen, and a lot of money was riding on the outcome. The trial had mixed results. Vioxx was no better at relieving the symptoms of rheumatoid arthritis, but it did halve the risk of gastrointestinal events, which was excellent news. But an increased risk of heart attacks was also found.

When the VIGOR trial was published, however, this cardiovascular risk was hard to see. There was an “interim analysis” for heart attacks and ulcers, in which ulcers were counted for longer than heart attacks. It wasn’t described in the publication, and it overstated the advantage of Vioxx regarding ulcers, while understating the increased risk of heart attacks. “This untenable feature of trial design,” said an unusually punishing editorial in

The New England Journal of Medicine

, “which inevitably skewed the results, was not disclosed to the editors or the academic authors of the study.” Was it a problem? Yes. For one thing, three additional myocardial infarctions occurred in the Vioxx group in the month after the researchers stopped counting, while none occurred in the naproxen control group.

An internal memo from Edward Scolnick, the company’s chief scientist, shows that Merck knew about this cardiovascular risk (“It is a shame but it is a low incidence and it is mechanism based as we worried it was”).

The New England Journal of Medicine

was not impressed, publishing a pair of spectacularly critical editorials.

The worrying excess of heart attacks was only really picked up by people examining the FDA data, something that doctors tend, of course, not to do, as they read academic journal articles at best. In an attempt to explain the moderate extra risk of heart attacks that

could

be seen in the final paper, the authors proposed something called the naproxen hypothesis: Vioxx wasn’t causing heart attacks, they suggested, but naproxen was preventing them. There is no accepted evidence that naproxen has a strong protective effect against heart attacks.

The internal memo, discussed at length in the coverage of the case, suggested that the company was concerned at the time. Eventually more evidence of harm emerged. Vioxx was taken off the market in 2004, but analysts from the FDA estimated that it had caused between 88,000 and 139,000 heart attacks, 30 to 40 percent of which were probably fatal, in its five years on the market. It’s hard to be sure if that figure is reliable, but when you look at the pattern of how the information came out, it’s certainly felt, very widely, that both Merck and the FDA could have done much more to mitigate the damage done over the many years of this drug’s life span, after the concerns were apparent to them. Data in medicine is important; it means lives. Merck has not admitted liability and has proposed a $4.85 billion settlement in the United States.

Authors Forbidden to Publish Data

This all seems pretty bad. Which researchers are doing it, and why can’t we stop them? Some, of course, are mendacious. But many have been bullied or pressured not to reveal information about the trials they have performed, funded by the pharmaceutical industry.

Here are two extreme examples of what is, tragically, a fairly common phenomenon. In 2000, a U.S. company filed a claim against both the lead investigators and their universities in an attempt to block publication of a study on an HIV vaccine that found the product was no better than placebo. The investigators felt they had to put patients before the product. The company felt otherwise. The results were published in

JAMA

that year.

In the second example, Nancy Olivieri, director of the Toronto Hemoglobinopathy Program, was conducting a clinical trial on deferiprone, a drug that removes excess iron from the bodies of patients who become iron overloaded after many blood transfusions. She was concerned when she saw that iron concentrations in the liver seemed to be poorly controlled in some of the patients, exceeding the safety threshold for increased risk of cardiac disease and early death. More extended studies suggested that deferiprone might accelerate the development of hepatic fibrosis.

The drug company, Apotex, threatened Olivieri, repeatedly and in writing, that if she published her findings and concerns, it would take legal action against her. With great courage—and, shamefully, without the support of her university—Olivieri presented her findings at several scientific meetings and in academic journals. She believed she had a duty to disclose her concerns, regardless of the personal consequences. It should never have been necessary for her to need to make that decision.