A Briefer History of Time (9 page)

The leading candidate for the next supernova explosion in our galaxy is a star called Rho Cassiopeiae. Fortunately, it is a safe and comfortable ten thousand light-years from us. It is in a class of stars known as yellow hypergiants, one of only seven known yellow hypergiants in the Milky Way. An international team of astronomers began to study this star in 1993. In the next few years they observed it undergoing periodic temperature fluctuations of a few hundred degrees. Then in the summer of 2000, its temperature suddenly plummeted from around 7,000 degrees to 4,000 degrees Celsius. During that time, they also detected titanium oxide in the star’s atmosphere, which they believe is part of an outer layer thrown off from the star by a massive shock wave.

In a supernova, some of the heavier elements produced near the end of the star’s life are flung back into the galaxy and provide some of the raw material for the next generation of stars. Our own sun contains about 2 percent of these heavier elements. It is a second- or third-generation star, formed some five billion years ago out of a cloud of rotating gas containing the debris of earlier supernovas. Most of the gas in that cloud went to form the sun or got blasted away, but small amounts of the heavier elements collected together to form the bodies that now orbit the sun as planets like the earth. The gold in our jewelry and the uranium in our nuclear reactors are both remnants of the supernovas that occurred before our solar system was born!

When the earth was newly condensed, it was very hot and without an atmosphere. In the course of time, it cooled and acquired an atmosphere from the emission of gases from the rocks. This early atmosphere was not one in which we could have survived. It contained no oxygen, but it did contain a lot of other gases that are poisonous to us, such as hydrogen sulfide (the gas that gives rotten eggs their smell). There are, however, other primitive forms of life that can flourish under such conditions. It is thought that they developed in the oceans, possibly as a result of chance combinations of atoms into large structures, called macromolecules, that were capable of assembling other atoms in the ocean into similar structures. They would thus have reproduced themselves and multiplied. In some cases there would be errors in the reproduction. Mostly these errors would have been such that the new macromolecule could not reproduce itself and eventually would have been destroyed. However, a few of the errors would have produced new macromolecules that were even better at reproducing themselves. They would have therefore had an advantage and would have tended to replace the original macromolecules. In this way a process of evolution was started that led to the development of more and more complicated, self-reproducing organisms. The first primitive forms of life consumed various materials, including hydrogen sulfide, and released oxygen. This gradually changed the atmosphere to the composition that it has today, and allowed the development of higher forms of life such as fish, reptiles, mammals, and ultimately the human race.

The twentieth century saw man’s view of the universe transformed: we realized the insignificance of our own planet in the vastness of the universe, and we discovered that time and space were curved and inseparable, that the universe was expanding, and that it had a beginning in time.

The picture of a universe that started off very hot and cooled as it expanded was based on Einstein’s theory of gravity, general relativity. That it is in agreement with all the observational evidence that we have today is a great triumph for that theory. Yet because mathematics cannot really handle infinite numbers, by predicting that the universe began with the big bang, a time when the density of the universe and the curvature of space-time would have been infinite, the theory of general relativity predicts that there is a point in the universe where the theory itself breaks down, or fails. Such a point is an example of what mathematicians call a singularity. When a theory predicts singularities such as infinite density and curvature, it is a sign that the theory must somehow be modified. General relativity is an incomplete theory because it cannot tell us how the universe started off.

In addition to general relativity, the twentieth century also spawned another great partial theory of nature, quantum mechanics. That theory deals with phenomena that occur on very small scales. Our picture of the big bang tells us that there must have been a time in the very early universe when the universe was so small that, even when studying its large-scale structure, it was no longer possible to ignore the small-scale effects of quantum mechanics. We will see in the next chapter that our greatest hope for obtaining a complete understanding of the universe from beginning to end arises from combining these two partial theories into a single quantum theory of gravity, a theory in which the ordinary laws of science hold everywhere, including at the beginning of time, without the need for there to be any singularities.

9

QUANTUM GRAVITY

THE SUCCESS OF SCIENTIFIC THEORIES, particularly Newton’s theory of gravity, led the Marquis de Laplace at the beginning of the nineteenth century to argue that the universe was completely deterministic. Laplace believed that there should be a set of scientific laws that would allow us—at least in principle—to predict everything that would happen in the universe. The only input these laws would need is the complete state of the universe at any one time. This is called an initial condition or a boundary condition. (A boundary can mean a boundary in space or time; a boundary condition in space is the state of the universe at its outer boundary—if it has one.) Based on a complete set of laws and the appropriate initial or boundary condition, Laplace believed, we should be able to calculate the complete state of the universe at any time.

The requirement of initial conditions is probably intuitively obvious: different states of being at present will obviously lead to different future states. The need for boundary conditions in space is a little more subtle, but the principle is the same. The equations on which physical theories are based can generally have very different solutions, and you must rely on the initial or boundary conditions to decide which solutions apply. It’s a little like saying that your bank account has large amounts going in and out of it. Whether you end up bankrupt or rich depends not only on the sums paid in and out but also on the boundary or initial condition of how much was in the account to start with.

If Laplace were right, then, given the state of the universe at the present, these laws would tell us the state of the universe in both the future and the past. For example, given the positions and speeds of the sun and the planets, we can use Newton’s laws to calculate the state of the solar system at any later or earlier time. Determinism seems fairly obvious in the case of the planets—after all, astronomers are very accurate in their predictions of events such as eclipses. But Laplace went further to assume that there were similar laws governing everything else, including human behavior.

Is it really possible for scientists to calculate what all our actions will be in the future? A glass of water contains more than 10

24

molecules (a 1 followed by twenty-four zeros). In practice we can never hope to know the state of each of these molecules, much less the complete state of the universe or even of our bodies. Yet to say that the universe is deterministic means that even if we don’t have the brainpower to do the calculation, our futures are nevertheless predetermined.

This doctrine of scientific determinism was strongly resisted by many people, who felt that it infringed God’s freedom to make the world run as He saw fit. But it remained the standard assumption of science until the earl) years of the twentieth century. One of the first indications that this belief would have to be abandoned came when the British scientists Lord Rayleigh and Sir James Jeans calculated the amount of blackbody radiation that a hot object such as a star must radiate. (As noted in Chapter 7, any material body, when heated, will give off blackbody radiation.)

According to the laws we believed at the time, a hot body ought to give off electromagnetic waves equally at all frequencies. If this were true, then it would radiate an equal amount of energy in every color of the spectrum of visible light, and for all frequencies of microwaves, radio waves, X-rays, and so on. Recall that the frequency of a wave is the number of times per second that the wave oscillates up and down, that is, the number of waves per second. Mathematically, for a hot body to give off waves equally at all frequencies means that a hot body should radiate the same amount of energy in waves with frequencies between zero and one million waves per second as it does in waves with frequencies between one million and two million waves per second, two million and three million waves per second, and so forth, going on forever. Let’s say that one unit of energy is radiated in waves with frequencies between zero and one million waves per second, and in waves with frequencies between one million and two million waves per second, and so on. The total amount of energy radiated in all frequencies would then be the sum 1 plus 1 plus 1 plus ... going on forever. Since the number of waves per second in a wave is unlimited, the sum of energies is an unending sum. According to this reasoning, the total energy radiated should be infinite.

In order to avoid this obviously ridiculous result, the German scientist Max Planck suggested in 1900 that light, X-rays, and other electromagnetic waves could be given off only in certain discrete packets, which he called quanta. Today, as mentioned in Chapter 8, we call a quantum of light a photon. The higher the frequency of light, the greater its energy content. Therefore, though photons of any given color or frequency are all identical, Planck’s theory states that photons of different frequencies are different in that they carry different amounts of energy. This means that in quantum theory the faintest light of any given color—the light carried by a single photon—has an energy content that depends upon its color. For example, since violet light has twice the frequency of red light, one quantum of violet light has twice the energy content of one quantum of red light. Thus the smallest possible bit of violet light energy is twice as large as the smallest possible bit of red light energy.

How does this solve the blackbody problem? The smallest amount of electromagnetic energy a blackbody can emit in any given frequency is that carried by one photon of that frequency. The energy of a photon is greater at higher frequencies. Thus the smallest amount of energy a blackbody can emit is higher at higher frequencies. At high enough frequencies, the amount of energy in even a single quantum will be more than a body has available, in which case no light will be emitted, ending the previously unending sum. Thus in Planck’s theory, the radiation at high frequencies would be reduced, so the rate at which the body lost energy would be finite, solving the blackbody problem.

The quantum hypothesis explained the observed rate of emission of radiation from hot bodies very well, but its implications for determinism were not realized until 1926, when another German scientist, Werner Heisenberg, formulated his famous uncertainty principle.

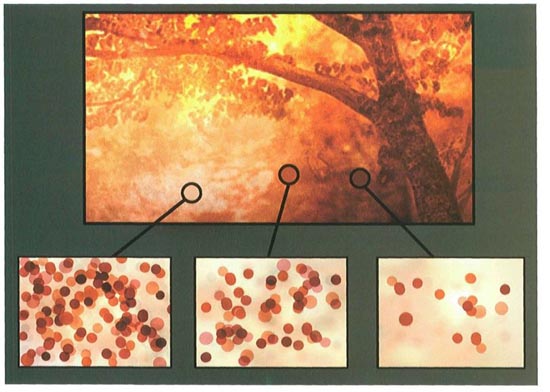

Faintest Possible Light

Faint light means fewer photons.The faintest possible light of any color is the light carried by a single photon

The uncertainty principle tells us that, contrary to Laplace’s belief, nature does impose limits on our ability to predict the future using scientific law. This is because, in order to predict the future position and velocity of a particle, one has to be able to measure its initial state—that is, its present position and its velocity—accurately. The obvious way to do this is to shine light on the particle. Some of the waves of light will be scattered by the particle. These can be detected by the observer and will indicate the particle’s position. However, light of a given wavelength has only limited sensitivity: you will not be able to determine the position of the particle more accurately than the distance between the wave crests of the light. Thus, in order to measure the position of the particle precisely, it is necessary to use light of a short wavelength, that is, of a high frequency. By Planck’s quantum hypothesis, though, you cannot use an arbitrarily small amount of light: you have to use at least one quantum, whose energy is higher at higher frequencies. Thus, the more accurately you wish to measure the position of a particle, the more energetic the quantum of light you must shoot at it.

According to quantum theory, even one quantum of light will disturb the particle: it will change its velocity in a way that cannot be predicted. And the more energetic the quantum of light you use, the greater the likely disturbance. That means that for more precise measurements of position, when you will have to employ a more energetic quantum, the velocity of the particle will be disturbed by a larger amount. So the more accurately you try to measure the position of the particle, the less accurately you can measure its speed, and vice versa. Heisenberg showed that the uncertainty in the position of the particle times the uncertainty in its velocity times the mass of the particle can never be smaller than a certain fixed quantity. That means, for instance, if you halve the uncertainty in position, you must double the uncertainty in velocity, and vice versa. Nature forever constrains us to making this trade-off.

How bad is this trade-off? That depends on the numerical value of the "certain fixed quantity" we mentioned above. That quantity is known as Planck’s constant, and it is a very tiny number. Because Planck’s constant is so tiny, the effects of the trade-off, and of quantum theory in general, are, like the effects of relativity, not directly noticeable in our everyday lives. (Though quantum theory does affect our lives—as the basis of such fields as, say, modern electronics.) For example, if we pinpoint the position of a Ping-Pong ball with a mass of one gram to within one centimeter in any direction, then we can pinpoint its speed to an accuracy far greater than we would ever need to know. But if we measure the position of an electron to an accuracy of roughly the confines of an atom, then we cannot know its speed more precisely than about plus or minus one thousand kilometers per second, which is not very precise at all.

The limit dictated by the uncertainty principle does not depend on the way in which you try to measure the position or velocity of the particle, or on the type of particle. Heisenberg’s uncertainty principle is a fundamental, inescapable property of the world, and it has had profound implications for the way in which we view the world. Even after more than seventy years, these implications have not been fully appreciated by many philosophers and are still the subject of much controversy. The uncertainty principle signaled an end to Laplace’s dream of a theory of science, a model of the universe that would be completely deterministic. We certainly cannot predict future events exactly if we cannot even measure the present state of the universe precisely!

We could still imagine that there is a set of laws that determine events completely for some supernatural being who, unlike us, could observe the present state of the universe without disturbing it. However, such models of the universe are not of much interest to us ordinary mortals. It seems better to employ the principle of economy known as Occam’s razor and cut out all the features of the theory that cannot be observed. This approach led Heisenberg, Erwin Schrödinger, and Paul Dirac in the 1920s to reformulate Newton’s mechanics into a new theory called quantum mechanics, based on the uncertainty principle. In this theory, particles no longer had separate, well-defined positions and velocities. Instead, they had a quantum state, which was a combination of position and velocity defined only within the limits of the uncertainty principle.

One of the revolutionary properties of quantum mechanics is that it does not predict a single definite result for an observation. Instead, it predicts a number of different possible outcomes and tells us how likely each of these is. That is to say, if you made the same measurement on a large number of similar systems, each of which started off in the same way, you would find that the result of the measurement would be A in a certain number of cases, B in a different number, and so on. You could predict the approximate number of times that the result would be A or B, but you could not predict the specific result of an individual measurement.

For instance, imagine you toss a dart toward a dartboard. According to classical theories—that is, the old, nonquantum theories—the dart will either hit the bull’s-eye or it will miss it. And if you know the velocity of the dart when you toss it, the pull of gravity, and other such factors, you’ll be able to calculate whether it will hit or miss. But quantum theory tells us this is wrong, that you cannot say it for certain. Instead, according to quantum theory there is a certain probability that the dart will hit the bull’s-eye, and also a nonzero probability that it will land in any other given area of the board. Given an object as large as a dart, if the classical theory—in this case Newton’s laws—says the dart will hit the bull’s-eye, then you can be safe in assuming it will. At least, the chances that it won’t (according to quantum theory) are so small that if you went on tossing the dart in exactly the same manner until the end of the universe, it is probable that you would still never observe the dart missing its target. But on the atomic scale, matters are different. A dart made of a single atom might have a 90 percent probability of hitting the bull’s-eye, with a 5 percent chance of hitting elsewhere on the board, and another 5 percent chance of missing it completely. You cannot say in advance which of these it will be. All you can say is that if you repeat the experiment many times, you can expect that, on average, ninety times out of each hundred times you repeat the experiment, the dart will hit the bull’s-eye.

Quantum mechanics therefore introduces an unavoidable element of unpredictability or randomness into science. Einstein objected to this very strongly, despite the important role he had played in the development of these ideas. In fact, he was awarded the Nobel Prize for his contribution to quantum theory. Nevertheless, he never accepted that the universe was governed by chance; his feelings were summed up in his famous statement "God does not play dice."