Superintelligence: Paths, Dangers, Strategies (49 page)

Read Superintelligence: Paths, Dangers, Strategies Online

Authors: Nick Bostrom

Tags: #Science, #Philosophy, #Non-Fiction

This is not a prescription of fanaticism. The intelligence explosion might still be many decades off in the future. Moreover, the challenge we face is, in part, to hold on to our humanity: to maintain our groundedness, common sense, and good-humored decency even in the teeth of this most unnatural and inhuman problem. We need to bring all our human resourcefulness to bear on its solution.

Yet let us not lose track of what is globally significant. Through the fog of everyday trivialities, we can perceive—if but dimly—the essential task of our age. In this book, we have attempted to discern a little more feature in what is otherwise still a relatively amorphous and negatively defined vision—one that presents as our principal moral priority (at least from an impersonal and secular perspective) the reduction of existential risk and the attainment of a civilizational trajectory that leads to a compassionate and jubilant use of humanity’s cosmic endowment.

1

. Not all endnotes contain useful information, however.

CHAPTER 1: PAST DEVELOPMENTS AND PRESENT CAPABILITIES2

. I don’t know which ones.

1

. A subsistence-level income today is about $400 (Chen and Ravallion 2010). A million subsistence-level incomes is thus $400,000,000. The current world gross product is about $60,000,000,000,000 and in recent years has grown at an annual rate of about 4% (compound annual growth rate since 1950, based on Maddison [2010]). These figures yield the estimate mentioned in the text, which of course is only an order-of-magnitude approximation. If we look directly at population figures, we find that it currently takes the world population about one and a half weeks to grow by one million; but this underestimates the growth rate of the economy since

per capita

income is also increasing. By 5000

BC

, following the Agricultural Revolution, the world population was growing at a rate of about 1 million per 200 years—a great acceleration since the rate of perhaps 1 million per million years in early humanoid prehistory—so a great deal of acceleration had already occurred by then. Still, it is impressive that an amount of economic growth that took 200 years seven thousand years ago takes just ninety minutes now, and that the world population growth that took two centuries then takes one and a half weeks now. See also Maddison (2005).

2

. Such dramatic growth and acceleration might suggest one notion of a possible coming “singularity,” as adumbrated by John von Neumann in a conversation with the mathematician Stanislaw Ulam:

Our conversation centred on the ever accelerating progress of technology and changes in the mode of human life, which gives the appearance of approaching some essential singularity in the history of the race beyond which human affairs, as we know them, could not continue. (Ulam 1958)

3

. Hanson (2000).

4

. Vinge (1993); Kurzweil (2005).

5

. Sandberg (2010).

6

. Van Zanden (2003); Maddison (1999, 2001); De Long (1998).

7

. Two oft-repeated optimistic statements from the 1960s: “Machines will be capable, within twenty years, of doing any work a man can do” (Simon 1965, 96); “Within a generation … the problem of creating artificial intelligence will substantially be solved” (Minsky 1967, 2). For a systematic review of AI predictions, see Armstrong and Sotala (2012).

8

. See, for example, Baum et al. (2011) and Armstrong and Sotala (2012).

9

. It might suggest, however, that AI researchers know less about development timelines than they think they do—but this could cut both ways: they might overestimate as well as underestimate the time to AI.

10

. Good (1965, 33).

11

.

One exception is Norbert Wiener, who did have some qualms about the possible consequences. He wrote, in 1960: “If we use, to achieve our purposes, a mechanical agency with whose operation we cannot efficiently interfere once we have started it, because the action is so fast and irrevocable that we have not the data to intervene before the action is complete, then we had better be quite sure that the purpose put into the machine is the purpose which we really desire and not merely a colourful imitation of it” (Wiener 1960). Ed Fredkin spoke about his worries about superintelligent AI in an interview described in McCorduck (1979). By 1970, Good himself writes about the risks, and even calls for the creation of an association to deal with the dangers (Good [1970]; see also his later article [Good 1982] where he foreshadows some of the ideas of “indirect normativity” that we discuss in

Chapter 13

). By 1984, Marvin Minsky was also writing about many of the key worries (Minsky 1984).

12

. Cf. Yudkowsky (2008a). On the importance of assessing the ethical implications of potentially dangerous future technologies

before

they become feasible, see Roache (2008).

13

. McCorduck (1979).

14

. Newell et al. (1959).

15

. The SAINTS program, the ANALOGY program, and the STUDENT program, respectively. See Slagle (1963), Evans (1964, 1968), and Bobrow (1968).

16

. Nilsson (1984).

17

. Weizenbaum (1966).

18

. Winograd (1972).

19

. Cope (1996); Weizenbaum (1976); Moravec (1980); Thrun et al. (2006); Buehler et al. (2009); Koza et al. (2003). The Nevada Department of Motor Vehicles issued the first license for a driverless car in May 2012.

20

. The STANDUP system (Ritchie et al. 2007).

21

. Schwartz (1987). Schwartz is here characterizing a skeptical view that he thought was represented by the writings of Hubert Dreyfus.

22

. One vocal critic during this period was Hubert Dreyfus. Other prominent skeptics from this era include John Lucas, Roger Penrose, and John Searle. However, among these only Dreyfus was mainly concerned with refuting claims about what practical accomplishments we should expect from existing paradigms in AI (though he seems to have been open to the possibility that new paradigms could go further). Searle’s target was functionalist theories in the philosophy of mind, not the instrumental powers of AI systems. Lucas and Penrose denied that a classical computer could ever be programmed to do everything that a human mathematician can do, but they did not deny that any particular function could in principle be automated or that AIs might eventually become very instrumentally powerful. Cicero remarked that “there is nothing so absurd but some philosopher has said it” (Cicero 1923, 119); yet it is surprisingly hard to think of

any

significant thinker who has denied the possibility of machine superintelligence in the sense used in this book.

23

. For many applications, however, the learning that takes place in a neural network is little different from the learning that takes place in linear regression, a statistical technique developed by Adrien-Marie Legendre and Carl Friedrich Gauss in the early 1800s.

24

. The basic algorithm was described by Arthur Bryson and Yu-Chi Ho as a multi-stage dynamic optimization method in 1969 (Bryson and Ho 1969). The application to neural networks was suggested by Paul Werbos in 1974 (Werbos 1994), but it was only after the work by David Rumelhart, Geoffrey Hinton, and Ronald Williams in 1986 (Rumelhart et al. 1986) that the method gradually began to seep into the awareness of a wider community.

25

. Nets lacking hidden layers had previously been shown to have severely limited functionality (Minsky and Papert 1969).

26

. E.g., MacKay (2003).

27

. Murphy (2012).

28

. Pearl (2009).

29

. We suppress various technical details here in order not to unduly burden the exposition. We will have occasion to revisit some of these ignored issues in

Chapter 12

.

30

. A program

p

is a description of string

x

if

p

, run on (some particular) universal Turing machine

U

, outputs

x

; we write this as

U(p) = x

. (The string

x

here represents a possible world.) The

Kolmogorov complexity of

x

is then

K

(

x

):=min

p

{

l

(

p

):

U

(

p

) =

x

}, where

l

(

p

) is the length of

p

in bits. The “Solomonoff” probability of

x

is then defined aswhere the sum is defined over all (“minimal,” i.e. not necessarily halting) programs

p

for which

U

outputs a string starting with

x

(Hutter 2005).

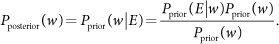

31

. Bayesian conditioning on evidence

E

gives

(The probability of a proposition [like

E

] is the sum of the probability of the possible worlds in which it is true.)

32

. Or randomly picks one of the possible actions with the highest expected utility, in case there is a tie.

33

. More concisely, the expected utility of an action can be written aswhere the sum is over all possible worlds.

34

. See, e.g., Howson and Urbach (1993); Bernardo and Smith (1994); Russell and Norvig (2010).

35

. Wainwright and Jordan (2008). The application areas of Bayes nets are myriad; see, e.g., Pourret et al. (2008).

36

. One might wonder why so much detail is given to game AI here, which to some might seem like an unimportant application area. The answer is that game-playing offers some of the clearest measures of human vs. AI performance.

37

. Samuel (1959); Schaeffer (1997, ch. 6).

38

. Schaeffer et al. (2007).

39

. Berliner (1980a, b).

40

. Tesauro (1995).

41

. Such programs include GNU (see Silver [2006]) and Snowie (see Gammoned.net [2012]).

42

. Lenat himself had a hand in guiding the fleet-design process. He wrote: “Thus the final crediting of the win should be about 60/40% Lenat/Eurisko, though the significant point here is that neither party could have won alone” (Lenat 1983, 80).

43

. Lenat (1982, 1983).

44

. Cirasella and Kopec (2006).

45

. Kasparov (1996, 55).

46

. Newborn (2011).

47

. Keim et al. (1999).

48

. See Armstrong (2012).

49

. Sheppard (2002).

50

. Wikipedia (2012a).

51

. Markoff (2011).