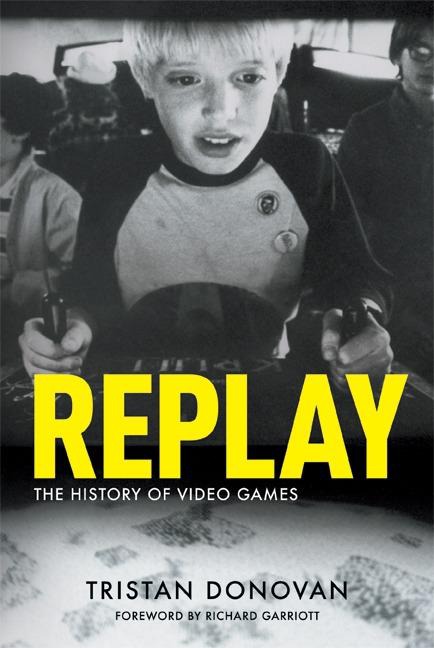

Replay: The History of Video Games

Read Replay: The History of Video Games Online

Authors: Tristan Donovan

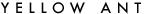

About The Author

Tristan Donovan was born in Shepherd’s Bush, London, in 1975. His first experience of video games was

Space Invaders

and he liked it, which was just as well because that was one of only three games he had on his TI-99/4a computer that saw him through the 1980s.

He disliked English at school and studied ecology at university, so naturally became a journalist after graduating in 1998. Since 2001 he has worked for Haymarket Media in a number of roles, the latest of which is as deputy editor of

Third Sector

. Tristan has also written for

The Guardian

,

Edge

,

Stuff

,

The Big Issue

,

Games

TM

,

Game Developer

,

The Gadget Show

and a whole bunch of trade magazines you probably haven’t heard of.

He lives in East Sussex, UK with his partner and two dachshunds.

REPLAY

The History of Video Games

TRISTAN DONOVAN

Published by Yellow Ant

Copyright © Tristan Donovan 2010

Tristan Donovan has asserted his rights under the Copyright, Design and Patents Act 1988 to be identified as the author of this work.

All rights reserved.

Without limiting the rights under copyright reserved above, no part of this publication may be reproduced, stored in or introduced into a retrieval system, or transmitted, in any form or by any means (electronic, mechanical, photocopying, recording or otherwise) without the prior written permission of both the copyright owner and the above publisher of this book.

First published in Great Britain in 2010 by Yellow Ant. 65 Southover High Street, Lewes, East Sussex, BN7 1JA, United Kingdom

Cover by Jay Priest and Tom Homewood

Cover photo © Corbis

iBook design by Yellow Ant

ISBN 978-0-9565072-2-8

To Jay, Mum, Dad and Jade

Richard Garriott, creator of the

Ultima

series

Foreword by Richard Garriott

Many consider the video games industry a young one. And, indeed, compared to many industries it is. It has developed from being a home-based hobby of the odd computer nerd to a multi-billion dollar business in just 30 years or so. I am old enough, and consider myself lucky enough, to have worked in the industry for much of its history. Astounding achievements in technology and design have driven this business to the forefront of the entertainment industry, surpassing books and movies long ago as not only the preferred medium for entertainment, but the most lucrative as well. Yet, it still has not been recognized as the important cultural art form that it is.

It is important to look back and remember how quickly we got here. Many who consider video game history focus on certain parts, such as consoles and other hardware that helped propel this business into the artistic medium it is today. However, there are many more aspects that are equally important. I believe that Tristan Donovan’s account is the most comprehensive thus far. In this book you will see his account of the inception of the video game’s true foundations. He details with great insight the people and events that led to what is the most powerful creative field today, and he takes a holistic view of the genre. Tristan’s unique approach demonstrates the strength of this field – he focuses on how video games have become a medium for creativity unlike any other industry, and how those creators, artists, storytellers, and developers have impacted culture in not just the US, but worldwide. That is quite a powerful influence and warrants recognition.

This book credits the greatest artistic creators of our time but doesn’t limit what they’ve accomplished to a particular platform. The video game genre spans coin-operated machines, consoles, personal computers, and more recently, the impetus of mobile, web-based, and handheld markets. There are very few venues in life these days you will not see some sort of influence of a video game – from music to film, to education to the military, games have touched the lives of people all over the world. While some cultures prefer a particular game style over another, the common denominator is that the art of the video game is not simply synonymous with entertainment, but with life.

Introduction

“Why are you writing another book on the history of video games,” asked Michael Katz, the former head of Sega of America, when I interviewed him for this book.

There are many reasons why, but two stand out. The first is that the attempts at writing the history of video games to date have been US rather than global histories. In

Replay: The History of Video Games

, I hope to redress the balance, giving the US its due without neglecting the important influence of games developed Japan, Europe and elsewhere. The second, and more important, reason is that video game history is usually told as a story of hardware not software: a tale of successive generations of game consoles and their manufacturers’ battle for market share. I wanted to write a history of video games as an art form rather than as a business product.

In addition, video games do not just exist on consoles. They appear on mobile phones, in arcades, within web browsers and, of course, on computers - formats that lack the distinct generational divides of consoles. Hardware is merely the vehicle for the creativity and vision of the video game developers who have spent the last 50 or so years moulding a new entertainment medium where, unlike almost all other rival media, the user is an active participant rather than a passive observer.

Hardware sets limits on what can be achieved, but it does not dictate what is created. The design of the ZX Spectrum home computer did not guarantee the creation of British surrealist games such as

Jet Set Willy

or

Deus Ex Machina

. The technology of the Nintendo 64 only made

Super Mario 64

possible, it did not ensure that Shigeru Miyamoto would make it.

The real history of video games is a story of human creativity, aided by technological growth.

Replay

sets out to celebrate the vitality and vision of video game creators, and to shed light on why video games have evolved in the way they have. For that reason not all of the games featured in this book will have been popular and conversely some very popular games are not mentioned. The focus is on the innovative, not the commercially successful.

Finally, a note on terminology. I’ve used the term ‘video game’ throughout this book with the occasional use of ‘game’ when there is no risk of confusion with other forms of game such as board games. I chose video game in preference to other terms for several reasons: it remains in every day use, unlike TV game or electronic game; it is broad enough to encompass the entire medium unlike ‘computer game’, which would exclude games, such as Atari’s

Pong

, that did not use microprocessors; and terms such as ‘interactive entertainment’, while more accurate, have failed to catch on despite repeated attempts over the years.

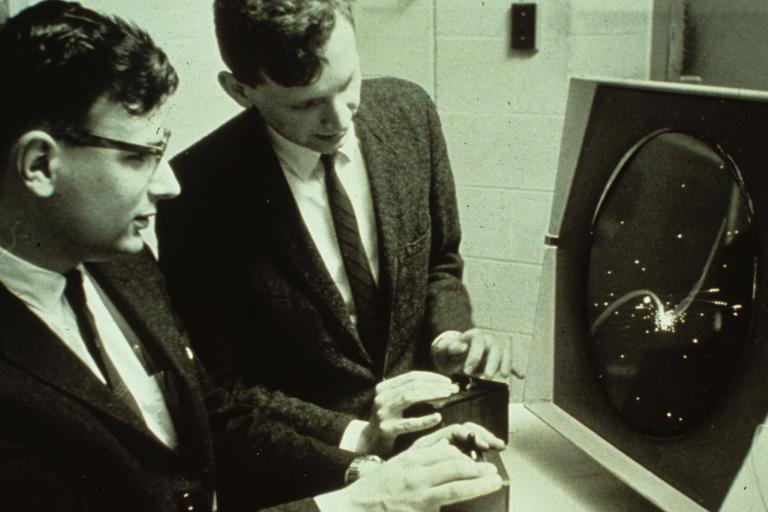

Space race:

Spacewar!

co-creators Dan Edwards (left) and Peter Samson engage in intergalactic warfare on a PDP-1 circa 1962. Courtesy of the Computer History Museum

1. Hey! Let’s Play Games!

The world changed forever on the morning of the 17th July 1945. At 5.29am the first atomic bomb exploded at the Alamogordo Bombing Range in the Jornada del Muerto desert, New Mexico. The blast swelled into an intimidating mushroom cloud that rose 7.5 kilometres into the sky and ripped out a 3 metre-deep crater lined with irradiated glass formed from melted sand. The explosion marked the consummation of the top-secret Manhattan Project that had tasked some of the Allies’ best scientists and engineers with building the ultimate weapon - a weapon that would end the Second World War.

Within weeks of the Alamogordo test, atomic bombs had levelled the Japanese cities of Hiroshima and Nagasaki. The bombs killed thousands instantly and left many more to die slowly from radiation poisoning. Five days after the destruction of Nagasaki on the 9th August 1945, the Japanese government surrendered. The Second World War was over. The world the war left behind was polarised between the communist east, led by the USSR, and the US-led free-market democracies of the west. The relationship between the wartime allies of the USA and USSR soon unravelled resulting in the Cold War, a 40-year standoff that would repeatedly take the world to the brink of nuclear war.

But the Cold War was more than just a military conflict. It was a struggle between two incompatible visions of the future and would be fought not just in diplomacy and warfare but also in economic development, propaganda, espionage and technological progress. And it was in the technological arms race of the Cold War that the video game would be conceived.

* * *

On the 14th February 1946, exactly six months after Japan’s surrender, the University of Pennsylvania switched on the first programmable computer: the Electronic Numeric Integrator and Calculator, or ENIAC for short. The state-of-the-art computer took three years to build, cost $500,000 of US military funding and was created to calculate artillery-firing tables for the army. It was a colossus of a machine, weighing 30 tonnes and requiring 63 square metres of floor space. Its innards contained more than 1,500 mechanical relays and 17,000 vacuum tubes – the automated switches that allowed the ENIAC to carry out instructions and make calculations. Since it had no screen or keyboard, instructions were fed in using punch cards. The ENIAC would reply by printing punch cards of its own. These then had to be fed into an IBM accounting machine to be translated into anything of meaning. The press heralded the ENIAC as a “giant brain”.

It was an apt description given that many computer scientists dreamed of creating an artificial intelligence. Foremost among these computer scientists were the British mathematician Alan Turing and the American computing expert Claude Shannon. The pair had worked together during the war decrypting the secret codes used by German U-boats. The pair’s ideas and theories would form the foundations of modern computing. They saw artificial intelligence as the ultimate aim of computer research and both agreed that getting a computer to defeat a human at Chess would be an important step towards realising that dream.

The board game’s appeal as a tool for artificial intelligence research was simple. While rules of Chess are straightforward, the variety of possible moves and situations meant that even if a computer could play a million games of Chess every second it would take 10

108

years for it to play evepossible version of the game.

[1]

As a result any computer that could defeat an expert human player at Chess would need to be able to react to and anticipate the moves of that person in an intelligent way. As Shannon put it in his 1950 paper

Programming a Computer for Playing Chess

: “Although perhaps of no practical importance, the question [of computer Chess] is of theoretical interest, and it is hoped that a satisfactory solution of this problem will act as a wedge in attacking other problems of a similar nature and of greater significance.”

In 1947, Turing became the first person to write a computer Chess program. However, Turing’s code was so advanced none of the primitive computers that existed at the time could run it. Eventually in 1952, Turing resorted to testing his Chess game by playing a match with a colleague where he pretended to be the computer. After hours of painstakingly mimicking his computer code, Turing lost to his colleague. He would never get the opportunity to implement his ideas for computer Chess on a computer. The same year that he tested his program with his colleague, he was arrested and convicted of homosexuality. Two years later, having been shunned by the scientific establishment because of his sexuality, he committed suicide by eating an apple laced with cyanide.

Despite Turing’s untimely exit, computer scientists such as Shannon and Alex Bernstein would spend much of the 1950s investigating artificial intelligence by making computers play games. While Chess remained the ultimate test, others brought simpler games to life on a computer.

In 1951 the UK’s Labour government launched the Festival of Britain, a sprawling year-long national event that it hoped would instil a sense of hope in a population reeling from the aftermath of the Second World War. With UK cities, particularly London, still marred by ruins and bomb craters, the government hoped its celebration of art, science and culture would persuade the population that a better future was on the horizon. Herbert Morrison, the deputy prime minister who oversaw the festival’s creation, said the celebrations would be “a tonic for the nation”. Keen to be involved in the celebrations, the British computer company Ferranti promised the government it would contribute to the festival’s Exhibition of Science in South Kensington, London. But by late 1950, with the festival just weeks away, Ferranti still lacked an exhibit. John Bennett, an Australian employee of the firm, came to the rescue.

Bennett proposed creating a computer that could play Nim. In this simple parlour game players are presented with several piles of matches. Each player then takes it in turns to remove one or more of the matches from any one of the piles. The player who removes the last match wins. Bennett got the idea of a Nim-playing computer from the Nimatron, an electro-mechanical machine exhibited at the 1940 World’s Fair in New York City. Despite suggesting Ferranti create a game-playing computer, Bennett’s aim was not to entertain but to show off the ability of computers to do maths. And since Nim is based on mathematical principles it seemed a good example. Indeed, the guide book produced to accompany the Nimrod, as the computer exhibit was named, was at pains to explain that it was maths, not fun, that was the machine’s purpose: “It may appear that, in trying to make machines play games, we are wasting our time. This is not true as the theory of games is extremely complex and a machine that can play a complex game can also be programmed to carry out very complex practical problems.”

Work to create the Nimrod began on the 1st December 1950 with Ferranti engineer Raymond Stuart-Williams turning Bennett’s designs into reality. By the 12th April 1951 the Nimrod was ready. It was a huge machine – 12 feet wide, five feet tall and nine feet deep – but the actual computer running the game accounted for no more than two per cent of its size. Instead the bulk of the machine was due to the multitude of vacuum tubes used to display lights, the electronic equivalent of the matches used in Nim. The resulting exhibit, which made its public debut on the 5th May 1951, boasted that the Nimrod was “faster than thought” and challenged the public to pit their wits against Ferranti’s “electronic brain”. The public was won over, but few showed any interest in the maths and science behind it. They just wanted to play. “Most of the public were quite happy to gawk at the flashing lights and be impressed,” said Bennett.

BBC radio journalist Paul Jennings described the Nimrod as a daunting machine in his report on the exhibition: “Like everyone else I came to a standstill before the electric brain or, as they prefer to call it, the Nimrod Digital Computer. This looks like a tremendous grey refrigerator…it’s absolutely frightening…I suppose at the next exhibition they’ll even have real heaps of matches and awful steel arms will come out of the machine to pick them up.”

After the Festival of Britain wound down in October, the Nimrod went on display at the Berlin Industrial Show and generated a similar response. Even West Germany’s economics minister Ludwig Erhard tried unsuccessfully to beat the machine. But, having impressed the public, Ferranti dismantled the Nimrod and got back to work on more serious projects.

Another traditional game to make an early transition to computers was Noughts and Crosses, which was recreated on the Electronic Delay Storage Automatic Calculator (EDSAC) at the University of Cambridge in England. Built in 1949 by Professor Maurice Wilkes, the head of the university’s mathematical laboratory, the EDSAC was as much a landmark in computing as the ENIAC. It was the first computer with memory that users could read, add or remove information from; memory now known as random access memory or RAM. For this Wilkes, who incidentally also tutored Bennett, is rightly regarded as a major figure in the evolution of computers but it would be one of his students who would recreate Noughts and Crosses on the EDSAC. Alexander Douglas wrote his version of the game for his 1952 PhD thesis on the interaction between humans and computers. Once he finished his studies, however, his

Noughts and Crosses

game was quickly forgotten, cast aside as a simple programme designed to illustrate a more serious point.

Others tried their hand at Checkers with IBM employee Arthur ‘Art’ Samuel leading the way. As with all the other games created on computers at this time, Samuel’s computer versions of Checkers were not about entertainment but research. Like the Chess programmers, Samuel wanted to create a Checkers game that could defeat a human player. He completed his first Checkers game in 1952 on an IBM 701; the first commercial computer created by the company, and would spend the next two decades refining it. By 1955 he had developed a version that could learn from its mistakes that caused IBM’s share price to leap 15 points when it was shown off on US television. By 1961 Samuel’s programme was defeating US Checkers champions.

* * *

At the same time as the scientists of the 1940s and 1950s were teaching computers to play board games, television sets were rapidly making their way into people’s homes. Although the television existed before the Second World War, the conflict saw factories cease production of TV sets to support the war effort by producing radar displays and other equipment for the military. The end of the war, however, produced the perfect conditions for television to take the world by storm. The technological breakthroughs made during the Second World War had brought down the cost of manufacturing TV sets and US consumers now had money to burn after years of austerity. In 1946 just 0.5 per cent of households owned a television. By 1950 this proportion had soared to 9 per cent and by the end of the decade there was a television in almost 90 per cent of US homes. While the shows on offer from the TV networks springing up across the US seemed enough to get sets flying off the shelves, several people involved in the world of TV began to wonder if the sets could be used for anything else beyond receiving programmes.

In 1947, the pioneering TV network Dumont became first to try and explore the idea of allowing people to play games on their TV sets. Two of the company’s employees – Thomas Goldsmith and Estle Mann – came up with the Cathode-Ray Tube Amusement Device. Based on a simple electronic circuit, the device would allow people to fire missiles at a target, such as an aeroplane, stuck onto the screen by the player. The device would use the cathode-ray tube within the TV set to draw lines representing the trajectory of the missile and to create a virtual explosion if the target was hit.

[2]

Goldsmith and Mann applied for a patent for the idea in January 1947, which was approved the following year, but Dumont never turned the device into a commercial product.

A few years later another TV engineer had a similar thought. Born in Germany in 1922, Ralph Baer had spent most of his teenage years watching the rise of the Nazi Party in his home country and the subsequent oppression of his fellow Jews. Eventually, in September 1938, his family fled to the US just weeks before Kristallnacht, the moment when the Nazis’ oppression turned violent and Germany’s Jews began to be rounded up and sent to die in concentration camps. “My father saw what was coming and got all the paperwork together for us to go to New York,” he said. “We went to the American consulate and sat in his office. I spoke pretty good English. I guess being able to have that conversation with the consulate might have made all the difference because the quota for being let into the US was very small. If we hadn’t got into the quota then it would have been…[motions slicing of the neck].”