Collected Essays (27 page)

Authors: Rudy Rucker

What it comes down to is that dry nanotechnology is about machines that we can design but can’t yet build, and wet technology is about machines that we can build but can’t yet design.

The field of nanotechnology was more or less invented by one man: Eric Drexler, who designed his own Ph.D. curriculum in nanotechnology while at MIT Drexler’s 1986 book

Engines of Creation

was something of a popular science best-seller. This year he published a second popular book,

Unbounding the Future

, and a highly technical work called

Nanosystems: Molecular Machinery, Manufacturing, and Computation

.

Drexler has the high forehead and the hunched shoulders of a Hollywood mad scientist, but his personality is quite mild and patient. A few years ago, many people were ready to write off nanotechnology as a playground for nuts and idle dreamers. It is thanks to Drexler’s calm, nearly Vulcan, logicalness that the field continues to grow and evolve.

Our interview was taped at The First General Conference on Nanotechnology, which was held at the Palo Alto Holiday Inn in November, 1991. Despite the name, this wasn’t really the

first

“First Nanotechnology Conference,” as

that

one took place in 1989. But this was the first First Nanotechnology Conference open to the public, for fifty to a hundred dollars per day, and the public packed the lecture rooms to the rafters.

Rudy Rucker:

Eric, what would be in your mind a benchmark, like something specific happening, where it started to look like nanotechnology was really taking off?

Eric Drexler:

Well, if you’d asked me that in 1986 when

Engines of Creation

came out, I would have said that a couple of important benchmarks are the first successful design of a protein molecule from scratch—that happened in 1988—and another one would be the precise placement of atoms by some mechanical means. We saw that coming out of Don Eigler’s group. At present I would say that the next major milestone that I would expect is the ability to position reactive, organic molecules so that they can be used as building blocks to make some stable three-dimensional structure at room temperature.

RR

: When people like to think of the fun dreams of things that could happen with nanotechnology, what are a couple of your favorite ones?

ED

: I’ve mostly been thinking lately about efficient ways of transforming molecules into other molecules and making high density energy storage systems. But if you imagine the range of things that can be done in an era where you have a billion times as much computer power available, which would presumably include Virtual Reality applications, that’s one large class of applications.

RR

: I notice that you’re talking on nanotechnology and space tomorrow. Can you give me a brief preview of your ideas there?

ED

: The central problem in opening the space frontier has been transportation. How do you get into space economically, safely, routinely? And that’s largely a question of what you can build. With high strength-to-weight ratio materials of the kind that can be made by molecular manufacturing, calculations indicate that you can make a four-passenger single-staged orbit vehicle with a lift-off weight that’s about equivalent to a heavy station wagon, and where the dry weight of the vehicle is sixty kilograms.

RR

: So you would be using nanotechnology to make the material of the thing so thin and strong?

ED

: Diamond fiber composites. Also, much better solar electric propulsion systems.

RR

: I’ve noticed people seem to approach nanotechnology with a lot of humor. It’s almost like people are nervous. They can’t decide if it’s fantasy or if it’s real. For you it’s real—you think it’s going to happen?

ED

: It’s hard for me to imagine a future in which it doesn’t happen, because there are so many ways of doing the job and so many reasons to proceed, and so many countries and companies that have reason to try.

RR

: Could you make some comments about the notorious gray goo question?

ED

: In

Engines of Creation

I over-emphasized the problem of someone making a self-replicating machine that could run wild. That’s a technical possibility and something we very much need to avoid, but I think it’s one of the smaller problems overall, because there’s very little incentive for someone to do it; it’s difficult to do; and there are so many other ways in which the technology could be abused where there’s a more obvious motive. For example, the use of molecular manufacturing to produce high performance weapon systems which could be more directly used to help with goals that we’ve seen people pursuing.

RR

: I’ve heard people talk about injecting nanomachines into their blood and having it clean out their arteries. That’s always struck me as the last thing I would do. Having worked in the computer business and seen the impossibility of ever completely debugging a program, I can’t imagine shooting myself up with machines that had been designed by hackers on a deadline.

ED

: In terms of the sequence of developments that one would expect to see I think it

is

one of the last things that you’d expect to see.

Note on “Mr. Nanotech: Eric Drexler”

Written 1992.

Appeared in

Mondo 2000

, Spring, 1992.

At this point, I was still resisting the idea of nanotech. But it’s never a good idea for an SF writer to balk at the latest new wrinkles in science. In the years to come, I let nanotech into my heart—but in the more plausible form of biotech. Tweaked organisms seem a lot likelier than tiny Victorian-style machines made of diamond rods and gears.

Part 3: WEIRD SCREENS

Cellular Automata

What Are Cellular Automata?

Cellular automata are self-generating computer graphics movies. The most important near-term application of cellular automata will be to commercial computer graphics; in coming years you won’t be able to watch television for an hour without seeing some kind of CA.

Three other key applications of cellular automata will be to simulation of biological systems (Artificial Life), to simulation of physical phenomena (such as heat-flow and turbulence), and to the design of massively parallel computers.

Most of the cellular automata I’ve investigated are two-dimensional cellular automata. In these programs the computer screen is divided up into “cells” which are colored pixels or dots. Each cell is repeatedly “updated” by changing its old color to a new color. The net effect of the individual updates is that you see an ever-evolving sequence of screens. A graphics program of this nature is specifically called a cellular automaton when it is (1) parallel, (2) local, and (3) homogeneous.

(1)

Parallelism

means that the individual cell updates are performed independently of each other. That is, we think of all of the updates being done at once. (Strictly speaking, your computer only updates one cell at a time, but we use a buffer to store the new cell values until a whole screen’s worth has been computed to refresh the display.)

(2)

Locality

means that when a cell is updated, its new color value is based solely on the old color values of the cell and of its nearest neighbors.

(3)

Homogeneity

means that each cell is updated according to the same rules. Typically the color values of the cell and of its nearest eight neighbors are combined according to some logico-algebraic formula, or are used to locate an entry in a preset lookup table.

Cellular automata can act as good models for physical, biological and sociological phenomena. The reason for this is that each person, or cell, or small region of space “updates” itself independently (parallelism), basing its new state on the appearance of its immediate surroundings (locality) and on some generally shared laws of change (homogeneity).

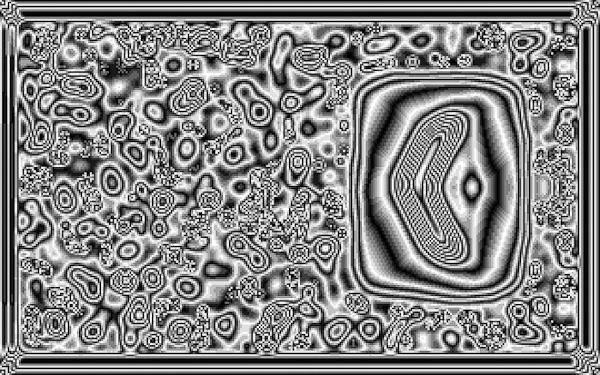

A cellular automaton rule that emulates boiling.

As a simple example of a physical CA, imagine sitting at the edge of a swimming pool, stirring the water with your feet. How quickly the pool’s surface is updated! The “computation” is so fast because it is parallel: all the water molecules are computing at once (parallelism). And how does a molecule compute? It reacts to forces from its neighbors (locality), in accordance with the laws of physics (homogeneity).

Why Cellular Automata?

The remarkable thing about CAs is their ability to produce interesting and logically deep patterns on the basis of very simply stated preconditions. Iterating the steps of a CA computation can produce fabulously rich output. A good CA is like an acorn which grows an oak tree, or more accurately, a good CA is like the DNA inside the acorn, busily orchestrating the protein nanotechnology that builds the tree.

One of computer science’s greatest tasks at the turn of the Millennium is to humanize our machines by making them “alive.” The dream is to construct intelligent Artificial Life (called “A-Life” for short). In Cambridge, Los Alamos, Silicon Valley and beyond, this is the programmer’s Great Work as surely as the search for the Philosopher’s Stone was the Great Work of the medieval alchemists.

There are two approaches to the problem of creating A-Life: the

top-down

approach, and the

bottom-up

approach.

The

top-down

approach is associated with AI (Artificial Intelligence), the

bottom-up

with CA (the study of cellular automata). Both approaches are needed for intelligent Artificial Life, and I predict that someday soon chaos theory, neural nets and fractal mathematics will provide a bridge between the two. What a day that will be when our machines begin to live and speak and breed—a day like May 10, 1869, when the final golden spike completed the U.S. transcontinental railroad! The study of CAs brings us ever closer to the forging of that last golden link in the great chain between bottom and beyond. If all goes well, many of us will see live robot boppers on the moon.

A heckler might say, “Sure that’s fine, but why are CAs needed? Why have a

bottom-up

approach at all? What do mindless colored dots have to do with intelligent Artificial Life?”

For all humanity’s spiritual qualities, we need matter to live on. And CAs can act as the “matter” on which intelligent life can evolve. CAs provide a lively, chaotic substrate capable of supporting the most diverse emergent behaviors. Indeed, it is at least possible that human life itself is quite literally based on CAs.

How so? View a person as wetware: as a protein factory. The proteins flip about, generating hormones, storing memories. Looking deeper, observe that the proteins’ nanotech churning is a pattern made up of flows and undulations in the potential surfaces of quantum chemistry. These surfaces “smell out” minimal energy configurations by using the fine fuzz of physical vacuum noise—far from being like smooth rubber sheets, they are like pocked ocean swells in a rainstorm. The quantum noise obeys local rules that are quite mathematical; and these rules can be well simulated by CAs.

Why is it that CAs are so good at simulating physics? Because, just like CAs, physics is (1) parallel, (2) local, and (3) homogeneous. Restated in terms of nature, these principles say that (1) the world is happening in many different places at once, (2) there is no action at a distance and (3) the laws of nature are the same everywhere.

Whether or not the physical world really is a cellular automaton, the point is that CAs are rich enough that a “biological” world could live on them. We human hackers live on language games on biology on chemistry on physics on mathematics on—something very like the iterated parallel computations of a CA.

Life needs something to live on, intelligence needs something to think on, and it is this seething information matrix which CAs can provide. If AI is the surfer, CA is the sea. That’s why I think cellular automata are interesting: A-Life! CAs will lead to intelligent Artificial Life!

Another interesting thing about CAs is that they are a universal form of computation. That is, any computation can be modeled (usually inefficiently) as a CA process. The question then becomes whether computations can be done

better

as CAs?

It’s clear that certain kinds of parallel computations can be done more rapidly and efficiently by a succession of parallel CA steps. And one does best to use the CA intrinsically, rather than simply using it as a simulation of the old serial mode—emulating the gates of an Intel chip is not the way to go. No, when we use CAs best, we do not use them as simulations of circuit diagrams. While behaviors can be found in

top-down

expert-system style by harnessing particular patterns to particular purposes, I think by far the more fruitful course is to use the

bottom-up

freestyle surfing CA style summed up in the slogan: