Chris Crawford on Interactive Storytelling (53 page)

Read Chris Crawford on Interactive Storytelling Online

Authors: Chris Crawford

There’s another complicating factor to consider. Suppose that Lancelot prudently declines to mention his adultery to Arthur, but then he encounters Mordred. Mordred’s reaction is of no concern to Lancelot, so he might be tempted to reveal the fatal truth—which of course Mordred would be eager to learn so that he could use it to weaken the bonds that tie the trio together. Conceivably, the same factors that would make Lancelot unwilling to talk to Mordred about anything would protect him in this case. But unless this problem is handled explicitly, the engine might produce foolish use of Gossip.

The solution to this problem lies in calculating the likelihood of information reaching Arthur from the listener. This calculation can be tricky, but it makes use of the same algorithms that underlie the Gossip system, so no new algorithms need be developed. The only new factor is considering the likelihood that an Actor will betray a Secret; this factor is calculated from that Actor’s loyalty to the Secret-holder in relation to his loyalty to the listener he’s considering telling the Secret to. Be warned: These calculations can become quite intricate.

For example, if Lancelot is considering telling Galahad about his affair with Guinevere, he needs to determine whether he can trust Galahad. Is Galahad loquacious? Is Galahad’s PerVirtue toward Lancelot higher than his PerVirtue toward Arthur? If the firstPerVirtueis higher than the second, that means Galahad likes Lancelot more than he likes Arthur, which suggests that he’ll keep the Secret.

Lesson #36

Calculating anticipation behavior requires complex algorithms.

Anticipation can also be applied in any decisions Actors make. After all, Lancelot and Guinevere should have taken Arthur’s likely reaction into account when they first decided to bed down, not just when they decide whether to talk about it. The basic approach is to add an automatic factor to the inclination formula for the Verb in question. That factor is similar to the factor for determining the desirability of revealing information, but there are two additional considerations. First, in the Gossip system, you’re considering one person’s likely reaction; for a Verb option that an Actor is considering, every other Actor’s likely reaction must be taken into account. In other words, when Guinevere decides whether to sleep with Lancelot, she must consider the reaction of every Actor in the cast. She must then weight (not weigh:

weight

) each Actor’s reaction by her own relationship with that Actor. All those reactions should then be added up to produce an overall assessment of how unpopular the decision might make her.

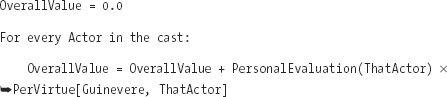

Here’s a simplified example of the process: Guinevere is considering whether to sleep with Lancelot. She needs to figure out whether people will react favorably or unfavorably to this decision. For simplicity, I’ll boil down the reaction to a single variable calledPersonalEvaluation. This variable measures how favorably or unfavorably an Actor would regard Guinevere’s decision to sleep with Lancelot. Remember that Guinevere doesn’t want to treat all Actors equally; she cares more about how her friends react than how acquaintances react. So the algorithm she would use looks like this:

EachPersonalEvaluationis weighted by Guinevere’s emotional attachment to that Actor. People she cares about are given more weight in the calculation; their opinions count for more. Conversely, people Guinevere doesn’t like are given negative weight; if they don’t like her action, so much the better, as far as she’s concerned.

Second is considering the probability that the Event can be kept secret. If, for example, Guinevere is contemplating adultery with Lancelot, she knows it’s very much in her interest as well as Lancelot’s to keep it secret. If they carry out their affair in secrecy, she can be confident that Lancelot will not reveal the Secret and Arthur will never know—in which case the consequences of the choice need not apply. This calculation is made using the algorithms described previously for determining the likelihood that a Secret can be kept.

The toughest problem in anticipation is including logical inferences. Suppose, for example, that Guinevere tells Arthur she’s going to spend the night at her mother’s house, and Arthur later discovers that Guinevere didn’t do that. In your mind and mine, that’s immediate grounds for suspicion. So how do you equip Arthur with the logic he needs to infer the possibility of hanky-panky?

Artificial intelligence (AI) researchers have developed a technology known as an

inference engine

to deal with this kind of problem. An inference engine is a general-purpose program that can take statements about reality that have been coded in the proper form and use those statements to draw inferences. In its simplest form, an inference engine does little more than arrange syllogisms into sequences. Advanced inference engines can carry out extensive logical calculations and collate many factors to draw surprising conclusions.

In practice, inference engines require a great deal of information to be coded into their databases before they can do anything useful. Take the example of Guinevere failing to spend the night at her mother’s house. How would the inference engine come to the conclusion that something fishy was afoot?

The starting point would be these two statements:

- Guinevere said she would spend the night at her mother’s.

- Guinevere did not spend the night at her mother’s.

The inference engine must first detect the discrepancy between the two statements, not too difficult a task. Having established that discrepancy, the inference engine must next attempt to explain it. To do this, it must have at its fingertips a wealth of information about human behavior, such as the following axiom: - When there is a discrepancy between what somebody says and the truth, that discrepancy is due to a lie or an extraordinary event.

So now the inference engine must examine two paths: Was it a lie or an extraordinary event? To determine which is more likely, the inference engine must make use of a statement rather like this: - People readily gossip about extraordinary events.

And then add in this observation: - Guinevere did not gossip to Arthur about any extraordinary events.

Using these two pieces of information, the inference engine can conclude: - Guinevere was not prevented from spending the night at her mother’s by an extraordinary event.

Combined with #3, this statement leads to the conclusion: - Guinevere lied about spending the night at her mother’s.

With this example, my point should be obvious: To carry out the calculations necessary for an inference engine, a prohibitively large amount of information about the world must be entered into the inference engine’s database. And in fact, AI researchers have learned that inference engines are practical only in special cases in which the domain of applicable knowledge is small and tightly defined. For general-purpose use such as interactive storytelling, too much information is required to build a proper inference engine. Nevertheless, it remains an important field of effort; someday, when we can afford to build large databases of human behavior, inference engines will play a role in interactive storytelling technology.

Lesson #37

Someday inference engines will be useful in interactive storytelling— but not yet.

Another approach to inferring the behavior of others is to explore the tree of possibilities an Actor could follow. If the storytelling engine uses a definable system of links between Events, it should be possible to trace through those links to determine what might happen. This process will likely entail a considerable amount of programming effort; in overall structure, it’s similar to the tree-searching methods used in chess-playing programs. The good news is that this kind of tree-searching usually requires fewer branches at each branchpoint; the bad news is that each decision is intrinsically more complex than a decision in a chess game because so many more variables are involved.

At each point in a chess game, there might be dozen pieces that could move, and each piece might be able to move in, say, five different ways. That means a chess-playing program would have to consider at least 60 possible moves for each move. On the other hand, each move is fairly easy to evaluate; there are only so many pieces on the board and so many geometric relationships to consider.

The tree for interactive storytelling is much messier. Each Actor might have only half a dozen options at each stage in the tree, but the basis for evaluating those options is far more complicated than the basis for evaluating chess moves. Personality traits, relationships, roles, historical factors, and moods must all be taken into consideration. These calculations pose a stiff challenge to designers.

Moreover, the decisions made at each juncture must be based on the calculating Actor’s perception of the relevant variables, not their true values. For example, if Mordred is unaware of the budding love between Lancelot and Guinevere, he won’t be motivated to attempt to further their affair as a ploy to damage Arthur. All this complexity makes tree analysis immensely difficult; I suspect that the first generation of interactive storytelling engines will refrain from using this technique.

Endowing Actors with anticipation is a maddeningly difficult task; at heart it requires recursive programming, in which one Actor attempts to think like another, and then uses that anticipatory information to make decisions. Extended anticipation, in which an Actor tries to follow a chain of likely decisions by other Actors, is even more difficult. For the moment, anticipatory behavior lies on the frontiers of interactive storytelling.

THE BASIC TASK OF ANY

storytelling engine is to figure out, after an Event takes place, what happens next; this task is referred to as

sequencing

. Common sense and dramatic logic restrict the number of appropriate reactions to an Event. After all, if Romeo discovers Juliet dead in the tomb, he shouldn’t have the option to play ping-pong; that’s just not dramatically appropriate. Indeed, for most Events, the range of dramatically reasonable options is quite narrow.

But why shouldn’t I be able to play ping-pong or do whatever I want during the course of the story? It’s my story, after all.

But why shouldn’t I be able to play ping-pong or do whatever I want during the course of the story? It’s my story, after all.

No, it isn’t; it’s

our

story. I as storybuilder define the

reasonable

options available to you as player; you choose among those options. (See Lesson #31 in

Chapter 12

, “Drama Managers.”) That’s the division of responsibility within the medium of interactive storytelling.

But what if I don’t like the options you offer me? What if I want that third option?

But what if I don’t like the options you offer me? What if I want that third option?