Brilliant Blunders: From Darwin to Einstein - Colossal Mistakes by Great Scientists That Changed Our Understanding of Life and the Universe (37 page)

Authors: Mario Livio

I should note that Einstein himself made one interesting attempt to connect the cosmological constant to elementary particles. In what could be regarded as his first foray into the arena of trying to unify gravity and electromagnetism,

Einstein proposed in 1919 that perhaps electrically charged particles are being held together by gravitational forces. This led him to an electromagnetic constraint on the value of the cosmological constant. Apart, however, from one additional

short note on the subject in 1927, Einstein never returned to this topic.

The idea that the vacuum is not empty but, rather, could contain a vast amount of energy is not really new. It was first proposed by the German physical chemist Walther Nernst in 1916, but since he was interested primarily in chemistry, Nernst did not consider the implications of his idea for cosmology.

The practitioners of quantum mechanics in the 1920s, Wolfgang Pauli in particular, did discuss the

fact that in the quantum domain the lowest possible energy of any field is not zero. This so-called

zero-point energy

is a consequence of the wavelike nature of quantum mechanical systems, which causes them to undergo jittery fluctuations even in their ground state. However, even Pauli’s conclusions did not propagate into cosmological considerations. The first person to specifically connect the cosmological constant to the energy of empty space was Lemaître. In a paper published in 1934, not long after he had met with Einstein, Lemaître wrote, “

Everything happens as though the energy

in vacuo

would be different from zero.” He then went on to say that the energy density of the vacuum must be associated with a negative pressure, and that “this is essentially the meaning of the cosmological constant Λ.”

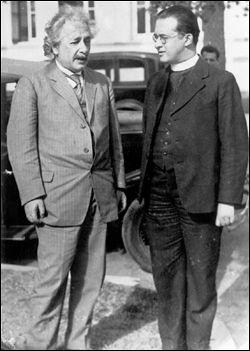

Figure 37

shows Einstein and Lemaître meeting in Pasadena in January 1933.

Figure 37

As perceptive as Lemaître’s comments were, the subject lay dormant for more than three decades until a brief revival of interest

in the cosmological constant attracted the attention of the versatile Jewish Belarusian physicist Yakov Zeldovich. In 1967

Zeldovich made the first genuine attempt to calculate the contribution of vacuum jitters to the value of the cosmological constant. Unfortunately, along the way, he made some ad hoc assumptions without articulating his reasoning. In particular, Zeldovich assumed that most of the zero-point energies somehow cancel out, leaving only the gravitational interaction between the virtual particles in the vacuum. Even with this unjustified omission, the value that he obtained was totally unacceptable; it was about a billion times larger than the energy density of all the matter and radiation in the observable universe.

More recent attempts to estimate the energy of empty space have only exacerbated the problem, producing values that are much, much higher—so high, in fact, that they cannot be considered anything but absurd. For instance, physicists first assumed naïvely that they could sum up the zero-point energies up to the scale where our theory of gravity breaks down. That is, to that point at which the universe was so small that one needs to have a quantum theory of gravity (a theory that does not exist currently). In other words, the hypothesis was that the cosmological constant should correspond to the cosmic density when the universe was only a tiny fraction of a second old, even before the masses of the subatomic particles were imprinted. However,

when particle physicists carried out that estimate, it resulted in a value that was about 123 orders of magnitude (1 followed by 123 zeros) greater than the combined cosmic energy density in matter and radiation. The ludicrous discrepancy prompted physics Nobel laureate Steven Weinberg to dub it “the worst failure of an order-of-magnitude estimate in the history of science.” Obviously, if the energy density of empty space were truly that high, not only would galaxies and stars not have existed but also the enormous repulsion would have instantly torn apart even atoms and nuclei. In a desperate attempt to correct the guesstimate, physicists used symmetry principles to conjecture that adding up the zero-point energies should be cut off at some lower energy. Dismally, even though the revised

estimate resulted in a considerably lower value, the energy was still some 53 orders of magnitude too high.

Faced with this crisis, some physicists resorted to believing that a yet-undiscovered mechanism somehow completely cancels out all the different contributions to the energy of the vacuum, to produce a value of exactly zero for the cosmological constant. You’ll recognize that mathematically speaking, this is precisely equivalent to Einstein’s simple removal of the cosmological constant from his equations. Assuming that the cosmological constant vanishes means that the repulsive term need not be included in the equation. The reasoning, however, was completely different. Hubble’s discovery of the cosmic expansion quickly subverted Einstein’s original motivation for introducing the cosmological constant. Even so, many physicists regarded as unjustified the assignment of the specific value of zero to lambda for the mere sake of brevity or as remedy to a “bad conscience.” In its modern guise as the energy of empty space, on the other hand, the cosmological constant appears to be obligatory from the perspective of quantum mechanics, unless all the different quantum fluctuations somehow conspire to add up to zero. This inconclusive, frustrating situation lasted until 1998, when new astronomical observations turned the entire subject into what is arguably the most challenging problem facing physics today.

The Accelerating Universe

Since Hubble’s observations in the late 1920s, we knew that we live in an expanding universe. Einstein’s theory of general relativity provided the natural interpretation of Hubble’s findings: The expansion is a stretching of the fabric of space-time itself. The distance between any two galaxies increases just as the distance between any two paper chads glued to the surface of a spherical balloon would increase if the balloon were inflated. However, in the same way that the Earth’s gravity slows down the motion of any object thrown upward, one would anticipate that the cosmic expansion should be slowing, due to the mutual gravitational attraction of all the matter

and energy within the universe. But in 1998

two teams of astronomers, working independently, discovered that the cosmic expansion is not slowing down; in fact, over the past six billion years, it has been speeding up! One team, the Supernova Cosmology Project, was led by Saul Perlmutter of the Lawrence Berkeley National Laboratory, and the other, the High-Z Supernova Search Team, was led by Brian Schmidt of Mount Stromlo and Siding Spring Observatory and Adam Riess of the Space Telescope Science Institute and the Johns Hopkins University.

The discovery of accelerating expansion came as a shock initially, since it implied that some form of repulsive force—of the type expected from the cosmological constant—propels the universe’s expansion to speed up. To reach their surprising conclusion, the astronomers relied on observations of very bright stellar explosions known as Type 1a supernovae. These exploding stars are so luminous (at maximum light, they may outshine their entire host galaxies) that they can be detected (and the evolution of their brightness followed) more than halfway across the observable universe. In addition, what makes Type 1a supernovae particularly suitable for this type of study is the fact that they are excellent

standard candles:

Their intrinsic luminosities at peak light are nearly the same, and the small deviations from uniformity that exist can be calibrated empirically. Since the observed brightness of a light source is inversely proportional to the square of its distance—an object that is three times farther than another is nine times dimmer—knowledge of the intrinsic luminosity combined with measurement of the apparent one allows for a reliable determination of the source’s distance.

Type 1a supernovae are very rare, occurring roughly only once per century in a given galaxy. Consequently, each team had to examine thousands of galaxies to collect a sample of a few dozen supernovae. The astronomers determined the distances to these supernovae and their host galaxies, and the recession velocities of the latter. With these data at hand, they compared their results with the predictions of a linear Hubble’s law. If the expansion of the universe were indeed slowing, as everyone expected, they should have

found that galaxies that are, say, two billion light-years away, appear brighter than anticipated, since they would be somewhat closer than where uniform expansion would predict. Instead, Riess, Schmidt, Perlmutter, and their colleagues found that the distant galaxies appeared

dimmer

than expected, indicating that they had reached a larger distance. A precise analysis showed that the results imply a cosmic acceleration for the past six billion years or so. Perlmutter, Schmidt, and Riess shared the 2011 Nobel Prize in physics for their dramatic discovery.

Since the initial discovery in 1998, more pieces of this puzzle have emerged, all corroborating the fact that some new form of a smoothly distributed energy is producing a repulsive gravity that is pushing the universe to accelerate. First, the sample of supernovae has increased significantly and now covers a wide range of distances, putting the findings on a much firmer basis. Second, Riess and his collaborators have shown by subsequent observations that an earlier epoch of deceleration preceded the current six-billion-year-long accelerating phase in the cosmic evolution. A beautifully compelling picture emerges: When the universe was smaller and much denser, gravity had the upper hand and was slowing the expansion. Recall, however, that the cosmological constant, as its name implies, does not dilute; the energy density of the vacuum is constant. The densities of matter and radiation, on the other hand, were enormously high in the very early universe, but they have decreased continuously as the universe expanded. Once the energy density of matter dropped below that of the vacuum (about six billion years ago), acceleration ensued.

The most convincing evidence for the accelerating universe came from

combining detailed observations of the fluctuations in the cosmic microwave background by the Wilkinson Microwave Anisotropy Probe (WMAP) with those of supernovae, and supplementing those observations with separate measurements of the current expansion rate (the Hubble constant). Putting all of the observational constraints together, astronomers were able to determine precisely the current contribution of the putative vacuum energy to the total

cosmic energy budget. The observations revealed that matter (ordinary and dark together) contributes only about 27 percent of the universe’s energy density, while “dark energy”—the name given to the smooth component that is consistent with being the vacuum energy—contributes about 73 percent. In other words, Einstein’s diehard cosmological constant, or something very much like its contemporary “flavor”—the energy of empty space—is currently the dominant energy form in the universe!

To be clear, the measured value of the energy density associated with the cosmological constant is still some 53 to 123 orders of magnitude smaller than what naïve calculations of the energy of the vacuum produce, but the fact that it is definitely not zero has frustrated much wishful thinking on the part of many theoretical physicists. Recall that given the incredible discordance between any reasonable value for the cosmological constant—one that the universe could accommodate without bursting at the seams—and the theoretical expectations, physicists were anticipating that some yet-undiscovered symmetry would lead to the complete cancellation of the cosmological constant. That is, they hoped that the different contributions of the various zero-point energies, as large as they might be individually, would come in pairs of opposite signs so that the net result would be zero.

Some of these expectations

were hung on concepts such as supersymmetry: particle physicists predict that every particle we know and love, such as electrons and quarks (the constituents of protons and neutrons), should have yet-to-be-found supersymmetric partners that have the same charges (for example, electrical and nuclear), but spins removed by a half quantum mechanical unit. For instance, the electron has a spin of

1

/

2

, and its “shadow” supersymmetric partner is supposed to have spin of 0. If all superpartners were also to have the same mass as their known partners, then the theory predicts that the contribution of each such pair would indeed cancel out. Unfortunately, we know that the superpartners of the electron, the quark, and the elusive neutrino cannot have the same mass, respectively, as the electron, quark, and neutrino, or they would have been

discovered already. When this fact is taken into account, the total contribution to the vacuum energy is larger than the observed one by some 53 orders of magnitude. One might still have hoped that another, yet-unthought-of symmetry would produce the desired cancellation. However, the breakthrough measurement of the cosmic acceleration has shown that this is not very likely. The exceedingly small but nonzero value of the cosmological constant has convinced many theorists that it is hopeless to seek an explanation relying on symmetry arguments. After all, how can you reduce a number to 0.00000000000000000000000000000000000000000000000000001 of its original value without canceling it out altogether? This remedy seems to require a level of fine-tuning that most physicists are unwilling to accept. It would have been much easier, in principle, to imagine a hypothetical scenario that would make the vacuum energy precisely zero than one that would set it to the observed minuscule value. So, is there a way out? In desperation, some physicists have taken to relying on one of the most controversial concepts in the history of science—anthropic reasoning—a line of thought in which the mere existence of human observers is assumed to be part of the explanation. Einstein himself had nothing to do with this development, but it was the cosmological constant—Einstein’s brainchild or “blunder”—that has convinced quite a few of today’s leading theorists to consider this condition seriously. Here is a concise explanation of what the fuss is all about.