1989 - Seeing Voices (16 page)

Read 1989 - Seeing Voices Online

Authors: Oliver Sacks

94. Supalla and Newport, 1978.

The most systematic research on the use of time in Sign has been done by Scott Liddell and Robert Johnson and their colleagues at Gallaudet. Liddell and Johnson see signing not as a succession of instantaneous ‘frozen’ configurations in space, but as continually and richly modulated in time, with a dynamism of ‘movements’ and ‘holds’ analogous to that of music or speech. They have demonstrated many types of sequentiality in ASL signing—sequences of handshapes, locations, non-manual signs, local movements, movements-and-holds—as well as internal (phonological) segmentation within signs. The simultaneous model of structure is not able to represent such sequences, and may indeed prevent their being seen. Thus it has been necessary to replace the older static notions and descriptions with new, and often very elaborate, dynamic notations, which have some resemblances to the notations for dance and music.

95

95. See Liddell and Johnson, 1989, and Liddell and Johnson, 1986.

No one has watched these new developments with more interest than Stokoe himself, and he has focused specifically on the powers of ‘language in four dimensions’:

96

96. Stokoe, 1979.

Speech has only one dimension—its extension in time; writing has two dimensions; models have three; but only signed languages have at their disposal four dimensions—the three spatial dimensions accessible to a signer’s body, as well as the dimension of time. And Sign fully exploits the syntactic possibilities in its four-dimensional channel of expression.

The effect of this, Stokoe feels—and here he is supported by the intuitions of Sign artists, playwrights, and actors—is that signed language is not merely prose—like and narrative in structure, but essentially ‘cinematic’ too:

In a signed language…narrative is no longer linear and prosaic. Instead, the essence of sign language is to cut from a normal view to a close-up to a distant shot to a close-up again, and so on, even including flashback and flash-forward scenes, exactly as a movie editor works…Not only is signing itself arranged more like edited film than like written narration, but also each signer is placed very much as a camera: the field of vision and angle of view are directed but variable. Not only the signer signing but also the signer watching is aware at all times of the signer’s visual orientation to what is being signed about.

Thus, in this third decade of research, Sign is seen as fully comparable to speech (in terms of its phonology, its temporal aspects, its streams and sequences), but with unique, additional powers of a spatial and cinematic sort—at once a most complex and yet transparent expression and transformation of thought.

97

97. Again, Stokoe describes some of this complexity:

When three or four signers are standing in a natural arrangement for sign conversation…the space transforms are by no means 180-degree rotations of the three-dimensional visual world but involve orientations that non-signers seldom if ever understand. When all the transforms of this and other kinds are made between the signer’s visual three-dimensional field and that of each watcher, the signer has transmitted the content of his or her world of thought to the watcher. If all the trajectories of all the sign actions—direction and direction-change of all upper arms, forearm, wrist, hand and finger movement, all the nuances of all the eye and face and head action—could be described, we would have a description of the phenomena into which thought is transformed by a sign language…These superimpositions of semantics onto the space-time manifold need to be separated out if we are to understand how language and thought and the body interact.

The cracking of this enormously complex, four-dimensional structure may need the most formidable hardware, as well as an insight approaching genius.

98

98. ‘We currently analyze three dimensional movement using a modified OpEye system, a monitoring apparatus permitting rapid high-resolution digitalization of hand and arm movements…Opto-electronic cameras track the positions of light-emitting diodes attached to the hands and arms and provide a digital output directly to a computer, which calculates three-dimensional trajectories’ (Poizner, Klima, and Bellugi, 1987, p. 27). See fig. 2.

And yet it can also be cracked, effortlessly, unconsciously, by a three-year-old signer.

99

99. Though unconscious, learning language is a prodigious task—but despite the differences in modality, the acquisition of ASL by deaf children bears remarkable similarities to the acquisition of spoken language by a hearing child. Specifically, the acquisition of grammar seems identical, and this occurs relatively suddenly, as a reorganization, a discontinuity in thought and development, as the child moves from gesture to language, from prelinguistic pointing or gesture to a fully grammaticized linguistic system: this occurs at the same age (roughly twenty-one to twenty-four months) and in the same way, whether the child is speaking or signing.

What goes on in the mind and brain of a three-year-old signer, or any signer, that makes him such a genius at Sign, makes him able to use space, to ‘linguisticize’ space, in this astonishing way? What sort of hardware does

he

have in his head? One would not think, from the ‘normal’ experience of speech and speaking, or from the neurologist’s understanding of speech and speaking, that such spatial virtuosity could occur. It may indeed not be possible for the ‘normal’ brain—i.e., the brain of someone who has not been exposed early to Sign.

100

What then is the neurological basis of Sign?

100. It has been shown by Elissa Newport and Ted Supalla (see Rymer, 1988) that late learners of Sign—which means anyone who learns Sign after the age of five—though competent enough, never master its full subtleties and intricacies, are not able to ‘see’ some of its grammatical complexities. It is as if the development of special linguistic-spatial ability, of a special left hemisphere function, is only fully possible in the first years of life. This is also true for speech. It is true for language in general. If Sign is not acquired in the first five years of life, but is acquired later, it never has the fluency and grammatical correctness of native Sign: some essential grammatical aptitude has been lost. Conversely, if a young child is exposed to less-than-perfect Sign (because the parents, for example, only learned Sign late), the child will nonetheless develop grammatically correct Sign—another piece of evidence for an innate grammatical aptitude in childhood.

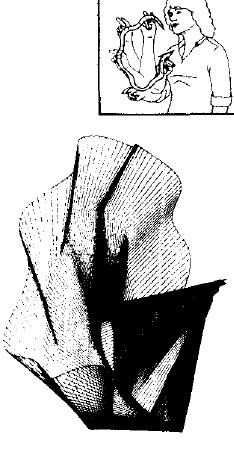

LOOK ALL OVER

LOOK ALL OVER

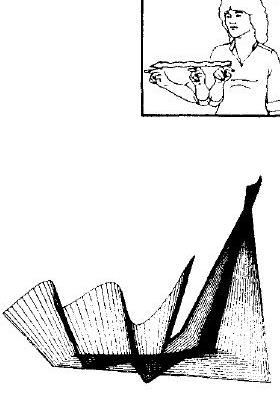

LOOK ACROSS A SERIES

LOOK ACROSS A SERIES

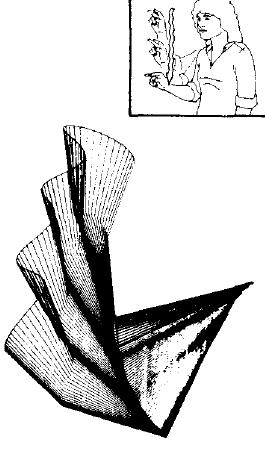

LOOK AT INTERNAL FEATURES

LOOK AT INTERNAL FEATURES

Figure

2. Computer-generated images showing three different grammatical inflections of the sign LOOK. The beauty of a spatial grammar, with its complex three-dimensional trajectories, is well brought out by this technique (see footnote 98, p. 91). (Reprinted by permission from Ursula Bellugi. The Salk Institute for Biological Studies, La Jolla, California.)

Having spent the 1970’s exploring the structure of sign languages, Ursula Bellugi and her colleagues are now examining its neural substrates. This involves, among other methods, the classical method of neurology, which is to analyze the effects produced by various lesions of the brain—the effect, here, on sign language and on spatial processing generally, as these may be observed in deaf signers with strokes or other lesions.

It has been thought for a century or more (since Hughlings-Jackson’s formulations in the 1870’s) that the left hemisphere of the brain is specialized for analytic tasks, above all for the lexical and grammatical analysis that makes the understanding of spoken language possible. The right hemisphere has been seen as complementary in function, dealing in wholes rather than parts, with synchronous perceptions rather than sequential analyses, and, above all, with the visual and spatial world. Sign languages clearly cut across these neat boundaries—for on the one hand, they have lexical and grammatical structure, but on the other, this structure is synchronous and spatial. Thus it was quite uncertain even a decade ago, given these peculiarities, whether sign language would be represented in the brain unilaterally (like speech) or bilaterally; which side, if unilateral, it would be represented on; whether, in the event of a sign aphasia, syntax might be disturbed independently of lexicon; and, most intriguingly, given the interweaving of grammatical and spatial relations in Sign, whether spatial processing, overall spatial sense, might have a different (and conceivably stronger) neural basis in deaf signers.

These were some of the questions faced by Bellugi and her colleagues when they launched their research.

101

101. The prescient Hughlings-Jackson wrote a century ago: ‘No doubt, by disease of some part of the brain the deaf-mute might lose his natural system of signs which are of some speech-value to him,’ and thought this would have to affect the left hemisphere.

At the time, actual reports on the effects of strokes and other brain lesions on signing were rare, unclear, and often inadequately studied—in part because there was little differentiation between finger spelling and Sign. Indeed, Bellugi’s first and central finding was that the left hemisphere of the brain

is

essential for Sign, as it is for speech, that Sign uses some of the same neural pathways as are needed for the processing of grammatical speech—but in addition, some pathways normally associated with visual processing.

That signing uses the left hemisphere predominantly has also been shown by Helen Neville, who has demonstrated that Sign is ‘read’ more rapidly and accurately by signers when it is presented in the right visual field (information from each side of the visual field is always processed in the opposite hemisphere). This may also be shown, in the most dramatic way, by observing the effects of lesions (from strokes, etc.) in certain areas of the left hemisphere. Such lesions may cause an aphasia for Sign—a breakdown in the understanding or use of Sign analogous to the aphasias of speech. Such sign aphasias can affect either the lexicon or the grammar (including the spatially organized syntax) of Sign differentially, as well as impairing the general power to ‘propositionize’ which Hughlings-Jackson saw as central to language.

102

102. The kinship of speech aphasia and sign aphasia is illustrated in a recent case reported by Damasio et al. in which a Wada test (an injection of sodium amytal into the left carotid artery—to determine whether or not the left hemisphere was dominant) given to a young, hearing Sign interpreter with epilepsy brought about a temporary aphasia of both speech and Sign. Her ability to speak English started to recover after four minutes; the sign aphasia lasted a minute or so longer. Serial PET scans were done throughout the procedure and showed that roughly similar portions of the left hemisphere were involved in speech and signing, although the latter seemed to require larger brain areas, in particular the left parietal lobe, as well (Damasio et al., 1986).