Read A Field Guide to Lies: Critical Thinking in the Information Age Online

Authors: Daniel J. Levitin

A Field Guide to Lies: Critical Thinking in the Information Age (22 page)

This is the core reason why rumors, counterknowledge, and pseudo-facts can be so easily propagated by the media, as when Geraldo Rivera contributed to a national panic about Satanists taking over America in 1987. There have been similar media scares about alien abduction and repressed memories.

As Damian Thompson notes, “For a hard-pressed news editor, anguished testimony trumps dry and possibly inconclusive statistics every time.”

Perception of Risk

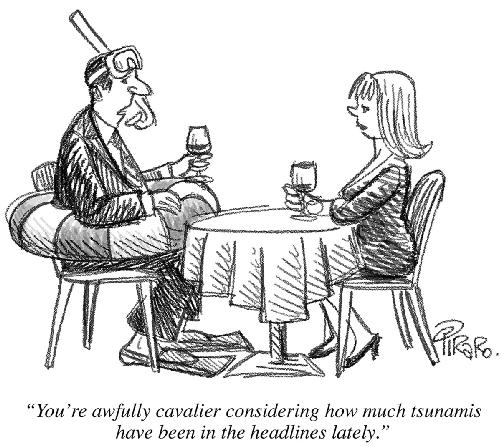

We assume that newspaper space given to crime reporting is a measure of crime rate. Or that the amount of newspaper coverage given over to different causes of death correlates to risk. But assumptions like this are unwise. About five times more people

die each year of stomach cancer

than of unintentional drowning. But to take just one newspaper, the

Sacramento Bee

reported no stories about stomach cancer in 2014, but three on unintentional drownings. Based on news coverage, you’d think that drowning deaths were far more common than stomach-cancer deaths. Cognitive psychologist Paul Slovic showed that people dramatically overweight the relative risks of things that receive media attention. And part of the calculus for whether something receives media attention is whether or not it makes a good story. A death by drowning is more dramatic, more sudden, and perhaps more preventable than death by stomach cancer—all elements that make for a good, though tragic, tale. So drowning deaths are reported more, leading us to believe, erroneously, that they’re more common. Misunderstandings of risk can lead us to ignore or discount evidence we could use to protect ourselves.

Using this principle of misunderstood risk, unscrupulous or simply uninformed amateur statisticians with a media platform can easily bamboozle us into believing many things that are not so.

A front-page headline in the

Times

(U.K.) in 2015 announced that 50 percent of Britons would contract cancer in their lifetimes, up from 33 percent. This could rise to two-thirds of today’s children, posing a risk that the National Health Service will be overwhelmed by the number of cancer patients. What does that make you think? That there is a cancer epidemic on the rise? Perhaps something about our modern lifestyle with healthless junk food, radiation-emitting cell phones, carcinogenic cleaning products, and radiation coming through a hole in the ozone layer is suspect. Indeed,

this headline could be used to promote an agenda by any number of profit-seeking stakeholders—health food companies, sunblock manufacturers, holistic medicine practitioners, and yoga instructors.

Before you panic, recognize that this figure represents all kinds of cancer, including slow-moving ones like prostate cancer, melanomas that are easily removed, etc. It doesn’t mean that everyone who contracts cancer will die.

Cancer Research UK (CRUK) reports that the percentage of people beating cancer has doubled since the 1970s, thanks to early detection and improved treatment.

What the headline ignores is that, thanks to advances in medicine, people are living longer.

Heart disease is better controlled than ever and deaths from respiratory diseases have decreased dramatically in the last twenty-five years. The main reason why so many people are dying of cancer is that they’re not dying of other things first. You have to die of

something

. This idea was contained in the same story in the

Times,

if you read that far (which many of us don’t; we just stop at the headline and then fret and worry). Part of what the headline statistic reflects is that cancer is an old-person’s disease, and many of us now will live long enough to get it. It is not necessarily a cause for panic. This would be analogous to saying, “Half of all cars in Argentina will suffer complete engine failure during the life the car.” Yes, of course—the car has to be put out of service for some reason. It could be a broken axle, a bad collision, a faulty transmission, or an engine failure, but it has to be something.

Persuasion by Association

If you want to snow people with counterknowledge, one effective technique is to get a whole bunch of verifiable facts right and then add only one or two that are untrue. The ones you get right will have the ring of truth to them, and those intrepid Web explorers who seek to verify them will be successful. So you just add one or two untruths to make your point and many people will haplessly go along with you. You persuade by associating bogus facts or counterknowledge with actual facts and actual knowledge.

Consider the following argument:

- Water is made up of hydrogen and oxygen.

- The molecular symbol for water is H

2

0. - Our bodies are made up of more than 60 percent water.

- Human blood is 92 percent water.

- The brain is 75 percent water.

- Many locations in the world have contaminated water.

- Less than 1 percent of the world’s accessible water is drinkable.

- You can only be sure that the quality of your drinking water is high if you buy bottled water.

- Leading health researchers recommend drinking bottled water, and the majority drink bottled water themselves.

Assertions one through seven are all true. Assertion eight doesn’t follow logically, and assertion nine, well . . . who are the leading health researchers? And what does it mean that they drink bottled water themselves? It could be that at a party, restaurant, or on an airplane, when it is served and there are no alternatives, they’ll drink it. Or does it mean that they scrupulously avoid all other forms of water? There is a wide chasm between these two possibilities.

The fact is that bottled water is at best no safer or healthier than most tap water in developed countries, and in some cases less safe because of laxer regulations.

This is based on reports by a variety of reputable sources, including the Natural Resources Defense Council, the Mayo Clinic,

Consumer Reports

, and a number of reports in peer-reviewed journals.

Of course, there are exceptions.

In New York City; Montreal; Flint, Michigan; and many other older cities, the municipal water supply is carried by lead pipes and the lead can leech into the tap water and cause lead poisoning. Periodic treatment-plant problems lead city governments to impose a temporary advisory on tap water. And when traveling in Third World countries, where regulation and sanitation standards are lower, bottled water may be the best bet. But tap-water standards in industrialized nations are among the most stringent standards in any industry—save your money and skip the plastic bottle. The argument of pseudo-scientific health advocates as typified by the above does not, er, hold water.

PART THREE

EVALUATING THE WORLD

Nature permits us to calculate only probabilities. Yet science has not collapsed.

—

RICHARD P. FEYNMAN

H

OW

S

CIENCE

W

ORKS

The development of critical thinking over many centuries led to a paradigm shift in human thought and history: the scientific revolution. Without its development and practice in cities like Florence, Bologna, Göttingen, Paris, London, and Edinburgh, to name just a handful of great centers of learning, science may not have come to shape our culture, industry, and greatest ambitions as it has. Science is not infallible, of course, but scientific thinking underlies a great deal of what we do and of how we try to decide what is and isn’t so. This makes it worth taking a close look behind the curtain to better see how it does what it does. That includes seeing how our imperfect human brains, those of even the most rigorous thinkers, can fool themselves.

Unfortunately, we must also recognize that some researchers make up data. In the most extreme cases, they report data that were never collected from experiments that were never conducted. They get away with it because fraud is relatively rare among researchers and so peer reviewers are not on their guard. In other cases, an investigator changes a few data points to make the data more closely reflect his or her pet hypotheses. In less extreme cases, the

investigator omits certain data points because they don’t conform to the hypothesis, or selects only cases that he or she knows will contribute favorably to the hypothesis.

A case of fraud occurred in 2015 when Dong-Pyou Han, a former biomedical scientist at Iowa State University in Ames, was found to have fabricated and falsified data about a potential HIV vaccine. In an unusual outcome, he didn’t just lose his job at the university but was sentenced to almost five years in prison.

The entire

controversy about whether the measles, mumps, and rubella (MMR) vaccine causes autism was propagated by Andrew Wakefield in an article with falsified data that has now been retracted—and yet millions of people continue to believe in the connection. In some cases, a researcher will manipulate the data or delete data according to established principles, but fail to report these moves, which makes interpretation and replication more difficult (and which borders on scientific misconduct).

The search for proof, for certainty, drives science, but it also drives our sense of justice and all our judicial systems. Scientific practice has shown us the right way to proceed with this search.

There are two pervasive myths about how science is done. The first is that science is neat and tidy, that scientists never disagree about anything. The second is that a single experiment tells us all we need to know about a phenomenon, that science moves forward in leaps and bounds after every experiment is published. Real science is replete with controversy, doubts, and debates about what we really know. Real scientific knowledge is gradually established through many replications and converging findings. Scientific knowledge comes from amassing large amounts of data

from a large number of experiments, performed by multiple laboratories. Any one experiment is just a brick in a large wall. Only when a critical mass of experiments has been completed are we in a position to regard the entire wall of data and draw any firm conclusions.

The unit of currency is not the single experiment, but the meta-analysis. Before scientists reach a consensus about something, there has usually been a meta-analysis, tying together the different pieces of evidence for or against a hypothesis.

If the idea of a meta-analysis versus a single experiment reminds you of the selective windowing and small sample problems mentioned in Part Two, it should. A single experiment, even with a lot of participants or observations, could still just be an anomaly—that eighty miles per gallon you were lucky to get the one time you tested your car. A dozen experiments, conducted at different times and places, give you a better idea of how robust the phenomenon is. The next time you read that a new face cream will make you look twenty years younger, or about a new herbal remedy for the common cold, among the other questions you should ask is whether a meta-analysis supports the claim or whether it’s a single study.

Deduction and Induction

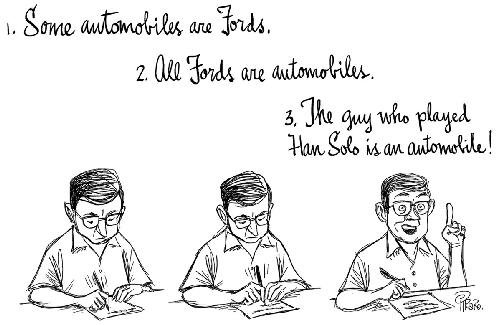

Scientific progress depends on two kinds of reasoning. In deduction, we reason from the general to the specific, and if we follow the rules of logic, we can be certain of our conclusion. In induction, we take a set of observations or facts, and try to come up with a general principle that can account for them. This is reasoning from the specific to the general. The conclusion of inductive reasoning is not certain—it is based on our observations and our understanding of the world, and it involves a leap beyond what the data actually tell us.

Probability, as introduced in Part One, is deductive. We work from general information (such as “this is a fair coin”) to a specific prediction (the probability of getting three heads in a row). Statistics is inductive. We work from a particular set of observations (such as flipping three heads in a row) to a general statement (about whether the coin is fair or not). Or as another example, we would use probability (deduction) to indicate the likelihood that a particular headache medicine will help you. If your headache didn’t go away, we could use statistics (induction) to estimate the likelihood that your pill came from a bad batch.

Induction and deduction don’t just apply to numerical things like probability and statistics. Here is an example of deductive logic in words. If the premise (the first statement) is true, the conclusion must be also:

Gabriel García Márquez is a human.

All humans are mortal.

Therefore (this is the deductive conclusion) Gabriel García Márquez is mortal.